Ditching 10GBe in favor of Infiniband: aftermarket buying guide

I was the first of my acquaintances who installed a 1 GbE network, and many of them wondered: who even needs 1 GbE? Twenty years later, I remain the first of them to have a 10-Gigabat network at home, and although I no longer hope that someone from my circle will understand me, I finally have the opportunity to inexpensively go beyond 10Gbe and get real fast network connection both at home and in the office or in the testlab.

Today, cost-effective solutions for deploying a 10 GbE network at home or in a small office are still rare and expensive. In data centers or large enterprises, a 10 Gigabit network has been around for about 15 years, and home solutions are still too expensive. Professional network cards of the 10GBase-T standard can cost under $ 400, and only Broadcom and Aquantia have solutions for about $ 100, usually integrated into motherboards (read our comparison of Aquantia AQC107 vs Intel X550-T2) As for switches, a 4-port 10Gbe twisted pair model can cost $ 300-500 per device, and if you need more than 12 ports, then prepare $ 1500-2000, which is completely out of the question. Of course, we can say that there are ultra-cheap unmanaged 10Gbe switches with SFP + slots for optics from Mikrotik and TP-Link in the world, but if you lose the main advantage of the "ten" - its compatibility with a short CAT5E twisted pair, and even then this dubious achievement (read our article: SFP + vs 10GBase-T, which standard is better for a 10-gigabit network ). So why do you need all these 1 GB/s limits?

In general, let's digress a little and ask ourselves a question: at what speeds do we live today? 5-6 years ago, a good SSD drive produced 400-500 MB/s when copying backups or large files with virtual machines, and it seemed that the 10-gigabit grid limit would not be reached very soon. Today the most average NVME SSD shows linear speeds up to 3 Gb/s, and there are already solutions with speeds of 4-5 Gb/s. When installing such drives as an SSD cache in a RAID array, even from slow HDDs, you can quite imagine the array speed of 3-4 Gb/s when working with cached data. As they say, 10Gbe is already nervously smoking on the sidelines with its peak of 1.25 Gb/s, achievable when using MTU above 9K.

Yes, of course, we are talking about some borderline cases when the equipment operates at maximum speed and is not constrained by the software capabilities. For example, VMware ESXi artificially limits the speed of virtual machine migration so as not to disrupt the stability of your infrastructure, and sometimes one VM can move at a speed of 20 MB/s between two NVME SSDs. Moreover, with multi-threaded access to data on network folders, be it iSCSI or SMB/NFS, the speed is usually 200-300 Mb/s for SSD and 10-15 Mb/s for HDD. And in fact, the maximum network performance is used either during backups, or when working with large video files, or in HPC setups, which is most likely not for us.

Nevertheless, in data centers all these years no one looked at these restrictions, and the market flew upward. Companies such as Melanox (Nvidia), Qlogic, Chelsio and Intel offer solutions with speeds of 40, 100 or 200 Gbps. When using multi-core fiber, it is already possible to achieve speeds of 400 and 600 Gbps, and in general for interconnects in servers for AI and ML, several ports can provide "outward" up to 2.4 Tbps. The fact that the segment of small business and home users hopelessly lagged behind the high technology of network cards was a consequence of the rapid development of Wi-Fi, but we moved away from the topic. In general, such a technological backwardness plays into our hands: what in data centers is recognized as unusable, outdated and unnecessary, we can buy cheap on eBay or Avito, because for us these remnants of a more highly developed civilization are not just the ultimate dream, but "space" , which is already here.

In 1999, Israeli Mellanox made an alternative to the Ethernet standard: the Infiniband (IB) standard, which later acquired the technology for remote direct memory access, RDMA. I will briefly describe the advantages of this technology. Typically, when your computer communicates over an Ethernet network using the TCP/IP protocol, the data being sent is copied several times through the IP stack buffers on your local computer, then the packets travel as frames over the network, then these packets are reassembled on the target computer where again the data is copied multiple times through the IP stack buffers to end up in the target application. Please note that all these manipulations and data copying are performed by the processor on each computer. Network cards in one way or another take over the unloading of packet processing, but in practice it looks like with the next driver update one or another function of network cards is disabled and then refined for years, and it is not a fact that it will work.

RDMA works in a completely different way: the “client” computer starts the application that initiates the request, with the “server” computer that runs the target/target application. The data to be sent is already in the memory space of the client application, so RDMA copies it directly over the network (InfiniBand) to the memory space of the server application. Done! The processor is not involved, communication is fully handled by the InfiniBand NIC. Obviously, I'm oversimplifying, but that's the whole point of RDMA, which discards a lot of unnecessary computation by moving data “from memory to memory” between client and server.

The Infiniband network was so successful that customers asked Mellanox to implement IP over this protocol, resulting in IP over IB (ipoib), and then this feature grew into RoCE (RDMA over Converged Ethernet), allowing RDMA to be used on existing connections Ethernet without re-routing cables.

In total, after 20 years of progress, we have received a high-speed bus that communicates according to the "memory-to-memory" principle, using the most common, cheapest fiber for this. In addition, there is a huge stock of 40Gb controllers on the secondary market starting at $ 20 per board and 18-port switches starting at $ 100 apiece.

At the same time, even used 10Gbe equipment costs 3-4 times more than similar IB. Why overpay, because you can understand the issue and get fantastic speed cheaply, as in the most powerful supercomputers of yesterday?

InfiniBand Glossary:

- VPI - Virtual Protocol Interconnect, a type of connection in which the data transfer protocol can be configured by the user.

- VPI Card is a Mellanox network card that can function as Ethernet or Infiniband adapter. On dual port cards, for example, one port can act as Ethernet and the other as Infiniband.

- EN Card - Mellanox network card that can only function as an Ethernet adapter.

- RDMA - Remote Direct Memory Access

- IPoIB - Internet Protocol over Infiniband, allows data exchange over IP over an RDMA connection.

- RoCE - RDMA over Converged Ethernet. Do not be alarmed by the word "converged", in fact it is a regular Ethernet, on top of which various protocols (iSCSI, VoIP) run.

- SFP - Small Form-factor Pluggable, a standard for optical transceivers used in 1 Gigabit Ethernet switches and network cards.

- SFP + is an enhanced type of SFP. Typically used in 10 Gigabit networking equipment.

- QSFP, QSFP + - Quad Small Form Factor, a type of transceiver for 4-channel communication, typically 40 Gbps.

- QSFP14 is an improved version of QSFP with support for speeds up to 56 Gbps.

- QSFP28 is an improved version of QSFP14 with support for speeds up to 100 Gbps

- DAC is a factory crimped twinaxial copper cable (twisted pair on steroids) with SFP + ports to connect equipment up to 5 meters.

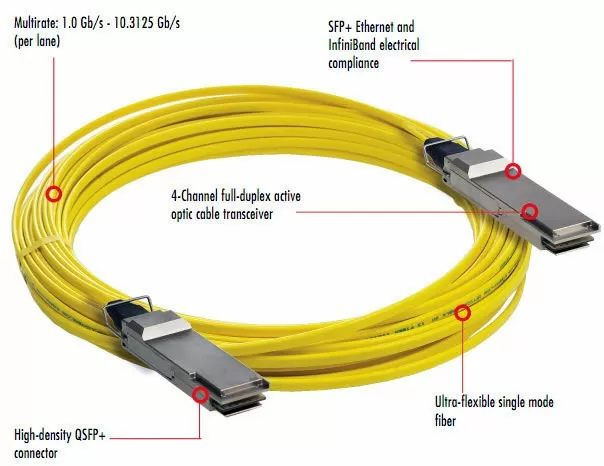

- AOC is an active optical cable with an amplifier (optical fiber on steroids) for connecting network equipment at a distance of up to 30 meters.

Connection speeds depending on port type:

- SDR - 10 Gbps

- DDR - 20Gbps

- QDR - 40 Gbps

- FDR - 56 Gbps

- EDR - 100 Gbps

- HDR - 200 Gbps

All these speeds are available today. The vast majority of InfiniBand (or Ethernet SFP) cables are 4-lane cables. However, there are 8-lane and 12-lane cables. With these cables, the maximum theoretical speed is doubled or tripled.

Today in the secondary market QDR or FDR equipment is sold at reasonable prices, and EDR is more expensive, and besides, it is necessary to distinguish FDR from FDR10, the former provides a speed of 54.3 Gbps, and the second - 44.8 Gbps. Therefore, before purchasing an FDR card, switch or cable, be sure to check the model number and its documentation. Usually, if the seller simply indicates the speed of "56 Gb/s", this may not be enough. Mellanox has switches that share the same model number, where only the subnumber tells you they are using the FDR10 or FDR standard. Do not hesitate to ask the seller a question again, and do not be surprised if he does not answer. The real bandwidth of the QDR standard is somewhere around 32 Gb/s, so today this standard is no longer as interesting as FDR.

Which cards are worth buying?

When buying Mellanox NICs on eBay, make sure you buy what you need: VPI cards support both InfiniBand and Ethernet, while EN cards only support Ethernet. Dual port cards are usually the best solution because you can connect 3 PCs without the need for a daisy chain switch. Dual-port cards also allow the PC to be on the InfiniBand subnet through the first port and (typically 10 GbE) Ethernet subnets through the second port.

Among 56 Gigabit adapters, the best solution is Mellanox Connect-X3, labeled MCX354A-FCBT or MCX354A-FCCT.

Which switch do I need?

Why is it better to use the above adapters for Infiniband over Ethernet? Because you can easily buy a used 18-36 port QDR, FDR10 or FDR InfiniBand switch for $ 125 to $ 250, and the same 10Gbe Ethernet switch will cost 10 times more. Here are some examples: 40 Gigabit 18-port Mellanox IS5023 or Mellanox SX6015 . That is, it is cheaper to use IP over IB with an IB QDR, FDR10 or FDR switch than spending money on 10Gbe.

Mellanox switches have very robust thick steel chassis with noisy 40mm fans running at 15K RPM. Installing them at home or in the office, even in a closed server cabinet, is guaranteed to get a headache from the eerie noise.

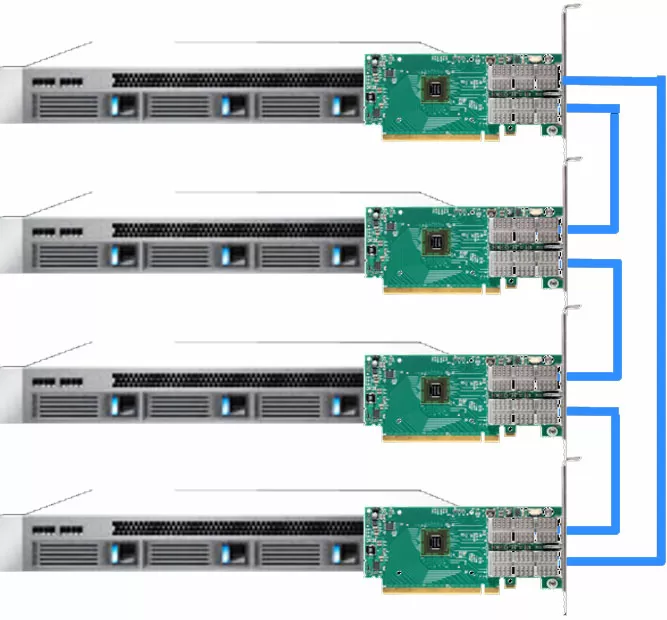

Do I need a switch at all?

The beauty of InfiniBand lies in its Daisy Chain networking capabilities, where each 2-port adapter is a transmitter and receiver. This was done to connect nodes in supercomputers, which made it possible to do without switches even on very large server fleets.

Of course, throughput suffers a little, but not so much that it is seriously noticeable in applications.

Which cables should you use?

You won't find anything better for connecting nearby equipment than good old DAC cables. They are sufficient for distances from 1 to 5 meters, and for longer distances, use active optical cables (AOC).

Keep in mind that AOCs are more fragile than regular optical cables, and if there are sharp corners in the path, you can easily break and damage the active optical cable.

Which transceivers to use?

If you already have an optical cable installed, or are just about to do it, perhaps this is the best way to connect hosts via Infiniband.

QSFP + LC transceivers for single-mode fiber support speeds up to 40 Gbit/s: this solution has its advantages, such as cheaper cable, comparative prevalence and the ability to split the signal from one QSFP + input into 4 SFP +, if you need to connect 4 clients of 10 Gbps to one 40 Gigabit port.

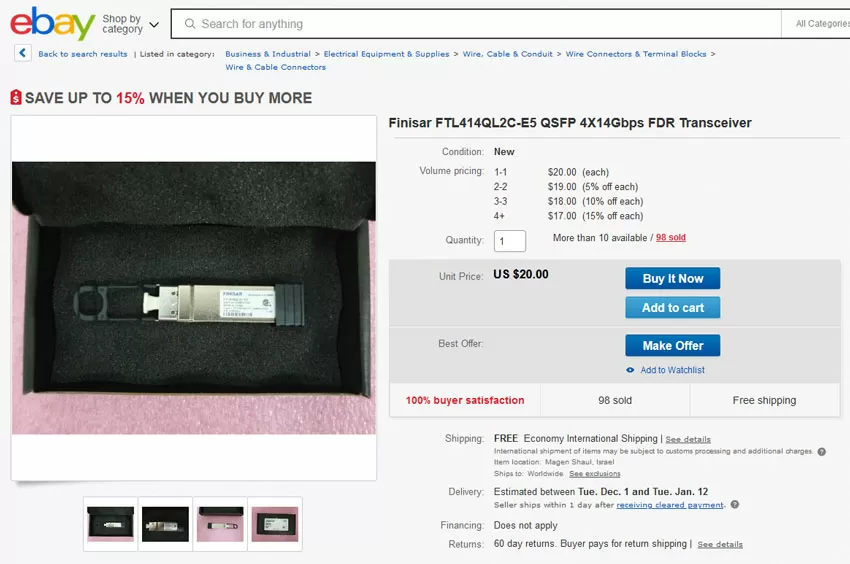

For 56 Gigabit connections up to 100 meters, look for QSFP + MTP/MPO transceivers using multimode fiber. For example, $ 20 Finisar FTL414QL2C-E5 transceivers will allow you to connect equipment via OM3 fiber at a distance of up to 30 meters at a speed of 56 Gbps.

Conclusion

High speed, low cost in the secondary market, the ability to abandon switches - these are the advantages that bribe to abandon 10Gbe and focus on Infiniband.

It's easier and cheaper not to chase 56 Gb/s, but to stop at 40 Gb/s and use cheaper switches, transceivers and single-mode cables.

Ron Amadeo

04/11.2020