What can Synology be replaced with in the conditions of sanctions. Part 1: Choosing a stable platform.

After the events of February 2022, Synology was one of the first to file for divorce and leave the Russian market. This means that any other market is not immune from the fact that the company does not fall under the embargo and will not leave its users without support, without supplies and without spare parts. It is better to hedge in advance, and reconsider your view of dependence on storage solution providers. So what should we do to offset the sanctions risk if Synology is tightly integrated into the infrastructure?

Once the beloved decided to leave, then we will not hang around under the windows and beg for forgiveness: we will also leave, not because she was bad-looking and did not know how to cook, but it just so happened that our union cannot be saved, and we need to live on somehow.

TL:DR - either buy QNAP, or pick up a good distribution and configure everything yourself through virtualki and containers.

Why is it extremely difficult to replace Synology?

In general, Synology's business was built on the development of an operating system for NAS, which it sold as part of its hardware and software complexes, DiskStation, Flashstation, etc. For this reason, detractors have always scolded the company for unreasonably high prices, especially comparing them with the prices of server platforms or self-assembled computers.

For two decades, Synology has made a journey that can be compared to the development of Windows or macOS: a huge set of services and functions with an emphasis on the graphical shell (WebUI) and simplicity. The sysadmin, when integrating and maintaining Synology, never had to climb into the command line, and in general think: everything is extremely simple, intuitive, you don't need to read any documentation (by the way, this documentation was submitted in such a way that you don't need to read it), but out of the box you have High Availability support, a hypervisor with HA support, a powerful storage platform with modified BTRFS, from which all glitches are removed, containers with direct access to storage, snapshots with replication, video surveillance with cascading, powerful backup server and fast deployment with easy maintenance even behind a "gray IP" through the cloud. Synology even has 2-controller NAS and iSCSI SAN solutions for those who need fault tolerance in one box.

Of course, there are also disadvantages: Synology got so carried away with finishing BTRFS that it missed such important trends in the storage world as object storage, did not implement file-level parity technologies (such as Snapraid and Unraid), passed by block virtualization (like HP 3PAR and Huawei OceanStor), and did not a full-fledged cloud for file collaboration, ruined the data synchronization mechanism from smartphones, reasoning that all the missing software functions can be installed via Docker or in a virtual machine, and its own RAID with BTRFS - this is a feature, in the presence of which you should not look for something else. And although in early releases of DSM 6 I found an opportunity to implement data deduplication, this function never fully worked.

Looking for a Drop-In Replacement… QNAP, Asustor, Qsan, Terramaster, Seagate

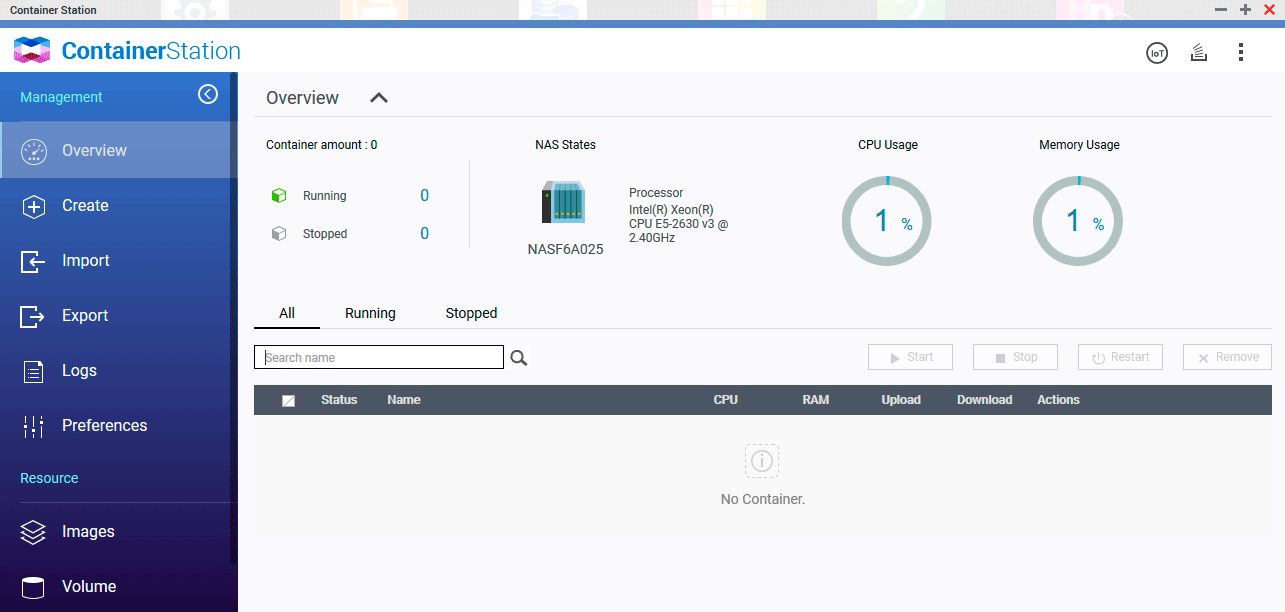

If the goal is simply to change the box with the Synology logo to a similar one, but under a different brand, I have good and bad news for you. Let's start with the good ones: from the entire spectrum of business NAS's, the most relevant to Synology is QNAP. These guys went toe-to-toe with Synology, they began to yield a little in terms of software quality in 2017-2020, but then they pulled up sharply, fixed the shortcomings, and today QNAP can be considered both as a Drop-In replacement and as an equal alternative.

There is container and hardware virtualization, there are built-in tools for creating snapshots, there is even a separate fork of the OS with ZFS support. Everything is packaged in the same convenient Web UI that does not require access to the command line. The software is great, it is developing, it is improving and finishing, plus, QNAP has RAID 50/60, multi-layer storage (Tiering), and from the point of view of hardware, QNAP can offer a larger range of devices for high loads with scaling.

The bad news is that QNAP can also "file for divorce" at any moment, wave a pen after and leave our market. And let us believe in the good, but we will prepare for the worst, besides, in conditions of restrictions, no one will say whether spare parts will be available tomorrow, and how long they will have to wait.

Companies such as Asustor, Qsan, Terramaster are on sale sporadically, they are technologically hopelessly behind Synology and QNAP, and if they can still be installed for storing backups and file sharing in a video studio, then as soon as you begin to impose some requirements on the data storage layer or file sharing, you will understand that you got stuck on the time tape somewhere in 2008-2009, and you can't try to adapt the device to modern processes.

And in order not to rebuild the infrastructure 10 times, not to run after smugglers and not to look for which box to replace the unavailable one, the only right choice would be to buy a neutral server and install and configure free software. In the future, this step will protect you in case of problems with the x86 architecture (the issue of processor diversification is discussed in detail in this article), and in general, it is always easier to buy a regular server: here you have a huge used market and dozens of different manufacturers: there is room for maneuver. In conditions when the supply of spare parts is unstable, I recommend, if possible, choosing a Tower or 4U/5U server for a standard ATX power supply and with a standard ATX/E-ATX/microATX motherboard. No proprietary stuff: from used models we choose HPE Proliant (in extreme cases, Dell), because there are a lot of them, and there will always be spare parts for them (read our guide to buying used servers), from the new one - Supermicro or an assembly from local vendors. Even abandoning the SAS HDD in favor of SATA will greatly facilitate the search for spare parts in the future.

Choosing an operating platform for the future storage array

So, the task is set: to replace Synology as much as possible with the help of freely distributed open source software, to get a server with a COW file system optimized for storing big data and archives, with container and hardware virtualization, with WebUI, and finally with torrents and a convenient file manager. Let's go.

Option 1. Install a pirated version of Synology

To be honest, this seems to be the easiest option: on the Internet you can easily find a Synology DSM pirate and instructions for installing it (Google the word Xpenology). And since the beloved has abandoned us, we have every moral reason to do the same with her. But this should not be done for the following reason.

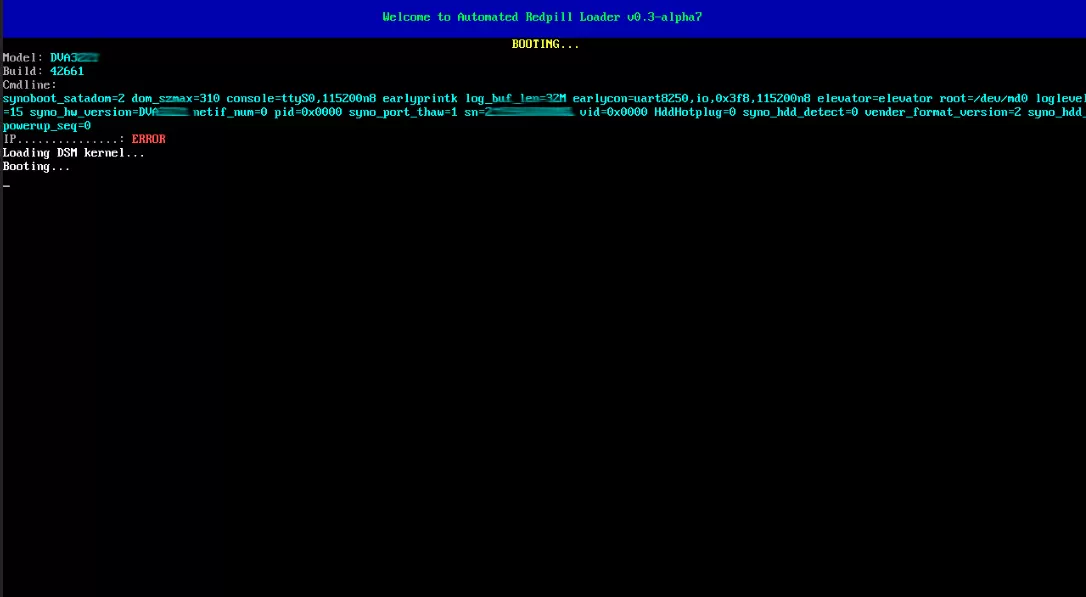

The pirate was good on the DSM 6.x version - it worked stably for years (if not updated), easily got up both on pure hardware and in a virtual machine under VMware ESX (including also on a pirate) or Proxmox VE. But time passed, and with the transition to DSM 7.1, this idyll collapsed.

- Firstly, the Xpenology has problems with AMD processors right off the bat: you won't get hardware virtualization or video decoding support on the fly.

- Secondly, DSM 7.1 has a more complex disk numbering than DSM 6, so the pirate does not work with more than 16 disks, and even when they are replaced or connected to another controller, it can go into a cyclic reboot and not return.

- Thirdly, starting with DSM 7, Xpenology users began to encounter checksum errors in BTRFS volumes. To be honest, even on the Synology forum, the owners of licensed devices paid attention to this, but I met myself with the discrepancy of checksums in DSM 7 installed through a pirated bootloader into a virtual machine, and I had to restore files from backup, which was unpleasant. And this is on server hardware with ECC-tested memory and server-class disks.

- Fourth, the pirated versions of DSM available today are limited in the number of CPU cores and RAM volume, that is, full-fledged use as with Windows 10 will not work.

If Xpenology was successful at one time to such an extent that sysadmins in the SMB segment installed it on Supermicro servers and fearlessly brought it into production, then with the transition to DSM 7.1 there is no longer that feeling of omnivorousness and solidity, there is no confidence in the safety of data, there is no normal work with NVME. Simply put, the sheepskin is not worth it, especially since third-party software has not stood still all these years.

Option 2. TrueNAS (ex-FreeNAS) Core or Scale

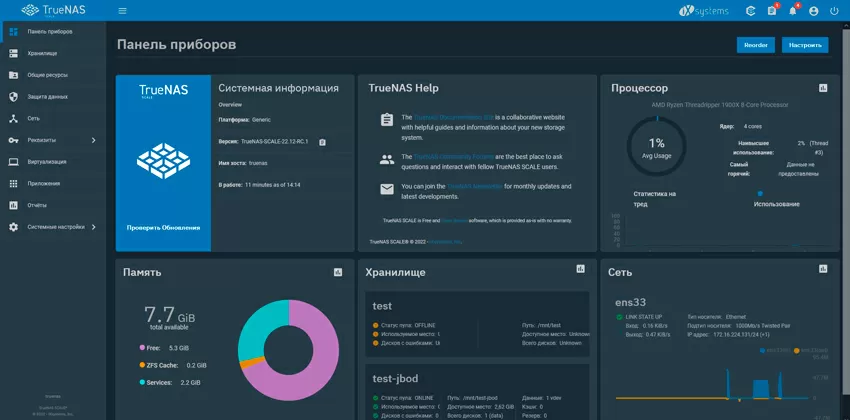

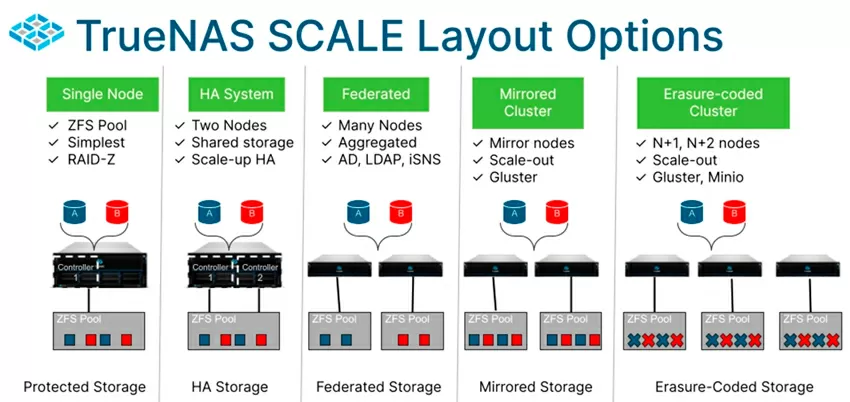

Most often, when we talk about free software for storage, we recall the Truenas product (formerly FreeNAS). This is a storage with a single ZFS file system, having two branches: Truenas Core - based on FreeBSD and Truenas Scale - based on Debian Linux. Since version 11, TrueNAS has acquired a more or less modern HTML5 interface, and the Linux version has received container virtualization.

I have been using Truenas for more than 5 years, and many times I tried to build a software stack based on it, but every time I ran into completely wild problems, and I abandoned this idea in horror. Let me explain.

Truenas core is FreeBSD, for which no one ever writes any software. Over the decades of its existence, Truenas Core has not grown into anything other than a chronicle, and even such trivial things as snapshot, replication or redundancy are implemented here inconveniently, uninformatively and illogically. Bhyve hardware virtualization is not viable, there are no Linux containers in any form here. Truenas Core can only be used as a storage for file sharing, ideally by running it in a virtual machine with an HBA controller passed through.

Truenas Scale was supposed to fix the situation, because under Debian Linux there is a whole world of free software, plus years of honed virtualization and a very stable kernel. But, the developers have made a bet that their own plugins, which are docker containers optimized for this release, can be delivered to Truenas Scale. As a result, everything went wrong: they could not make a normal repository with software: truecharts (that's what it's called) is sometimes not available at all, some programs are not installed at all. A good Docker could have changed the situation, but the developers took a swing at Kubernetes by implementing native support for k3s, but I still have the feeling that they went to drink beer somewhere in the middle of this process. As a result, there is no Docker, which means there is no ease of installing third-party services, hardware virtualization is at about the same level as container virtualization, the interface is uninformative and confusing. As a result, Truenas Scale is exactly the same storage layer as Truenas Core, slightly slower due to ZFS on Linux, without any innovations that the Debian operating system could provide.

The High Availability function is implemented through a third-party TrueCommand service, which is a bit inconvenient. However, compared to other software, I have to say thank you here for the fact that fault tolerance is generally implemented, and to the credit of the developers, it should be noted that several HA algorithms are supported.

You can install Truenas core/scale in a virtual machine to get a ZFS array in the server, and give all services and software binding to third-party virtual machines. In this case, the problem will arise that you will not have direct access from VMs and containers to ZFS datasets, you will not be able to build different storage layers on the same HBA: RAID, ZFS, archive layer, and you will not be able to properly manage the NVME layer. Of course, all this can be implemented to one degree or another through the passing disks using RDM/Disk Passthrough, leaving the HBA controller at the mercy of the hypervisor, but all this makes sense only if Truenas gives you some very necessary special functions, for example, something in ZFS that does not exist in other distributions with ZFS support. You can even manage ZFS on Ubuntu by installing Project Cockpit or Webmin as the interface.

I consider Truenas a half-dead project that will sooner or later leave the scene, being replaced by other, more dynamically developing platforms. As a storage with SMB/NFS/iSCSI support, this distribution is suitable, but no more.

Option 3. Openmediavault (OMV)

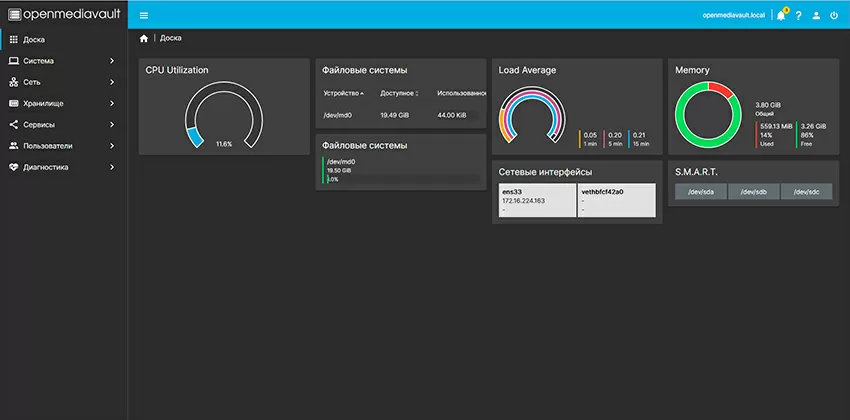

OMV was initially considered as an alternative to Freenas, built again on Debian Linux, not fixated on a single ZFS file system, a little more friendly for home and SOHO users. What is nice about OMV is the plugins: there is Snapraid support here, and if you use a RAID controller capable of simultaneously giving the operating system logical disks (RAID arrays) and disks themselves (Raw drives), then you can easily configure any storage configurations: RAID on XFS for quick access to large amounts of data, software RAID on ZFS, BTRFS or EXT4 for NVME drives, Snapraid for media library and large archives - all this is well in a more or less live web interface, albeit old as a dinosaur, but more or less working.

The problem with OMV is that almost everything that goes beyond "download a movie and distribute it over the network" is done here via the command line or through third-party services. That is, if you want to run Openmediavault in production and get the same freedom of action as in Synology, you will have to finish it for a long time and tediously, install third-party software on the server itself, use third-party shells for virtualization and containers, and as a result, you will understand that you are not the OMV shell itself you use it, increasingly resorting to the CLI and other developments.

I remember OMV because it was the first distribution, having installed it, without additional.settings and unnecessary packages, he began to pour out errors of the web interface, so it was in 2017 ... 5 years have passed and…

There is an opinion that OMV should be installed on top of Proxmox VE to organize everything related to file sharing and storage, but this is nonsense, because the simplest task of failover on OMV (creating a software RAID followed by disconnecting the disk) leads to the fact that either your OS will not boot, or you will not you can create a RAID again. In general, all those rakes that the programmers of Synology, QNAP, Thecus and even Truenas stepped on, OMV developers bypassed, and generally do not know how a reliable machine should work.

I've heard that OMV is put on routers to manage torrents, and I think that's where it belongs. I do not recommend using it on disk devices ever, under any circumstances, in any condition.

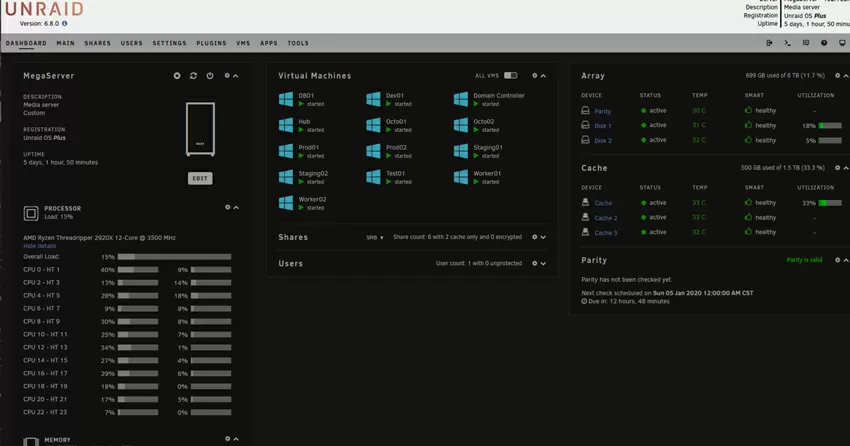

Option 4. Unraid

Unraid is the first and only operating system project for NAS that is designed to run third-party applications through Docker. I must say right away that Unraid is a commercial project, but the price even for the top license is purely symbolic - less than $ 150. In general, I love commercial Linux projects, and I think that having had enough of Open Source, in principle, you'd better pay, but get the thing. Unfortunately, this is not about Unraid.

Unraid is interesting to everyone: firstly, its array technology, in which parity is calculated not for blocks, but for files. What does it mean? The fact that the array is built on top of the file systems of hard disks formatted in XFS, BTRFS or ReiserFS (Oh, my....), and each disk itself is available both as part of and without an array: you can go to it and read files from it. You can turn off the server, get the HDD out of it, connect it to the computer and drain the data from it - they will be read.

Even if everything collapses, and 19 out of 20 hard drives die, files will remain on the 20th, and this is a truly super technology for large archives, for a media library and "big data". You can add up to 2 parity storage disks, and then you can restore data if any 2 drives in the array break down. That is, for example, you had 20 disks, 2 were allocated for parity, and 2 HDDs died out of 18 filled ones. So at this moment, all the information on the working 16 disks is still available to you, instead of two dead ones, you install a couple of new ones and restore the information from the dead drives already on them. When using hard drives with a volume of more than 16 TB, this is a very, very useful technology for "cold" data. By the way, dissimilar disks can be combined into an array: SAS, SATA, SSD, HDD of different sizes, but for parity storage, you must definitely choose the HDD of the largest volume available.

You can put an SSD cache on top of the array by combining one or more SSDs into an LVM pool. You can also make a separate LVM pool for quick access from applications, or you can install a plugin to support ZFS, getting LARC, deduplication, compression, snapshots and other ZFS charms (in beta condition).

The Android interface is so friendly that you can even choose an icon diagram of your server, or you can use a separate plugin to create a disk layout plan in the trash to easily find which one you have crashed. In general, Unraid is recommended by well-known bloggers who like to run a gaming station on it in a virtual machine with a video card, and play remotely via a KVM extension cable. This, in their opinion, is an indicator of the steepness of the platform and its readiness for any troubles.

It would seem that this is happiness, just for less than $ 150, but no. Unraid has several fundamental problems that have not been solved here for years, and are unlikely to ever be solved.

Firstly, as far as the data storage layer is concerned, Unraid does not work in real time, but according to scheduled scripts. That is, at the moment when you write data to its array, the parity for the files is not calculated, and they remain defenseless. The disk will crash immediately after recording - and goodbye unsynchronized files. And all because parity calculations for files are quite resource-intensive, and run on a schedule, at night, and easily consume both 16 and 24 cores of the server CPU. In general, even in such simple tasks as copying a file via SMB to disk, Unraid consumes more processor resources than TrueNAS with deduplication enabled, so the issue of OS speed is solved here by installing a more powerful CPU.

Secondly, the SSD cache here also works according to schedule: by default, all data on the array is written to the SSD pool, and then at night it is transferred to magnetic disks, and if at some point when writing space on caching SSDs runs out, you will get an error "out of space". You cannot connect to the disk directly, bypassing the array layer, that is, bypassing the configured SSD cache, as in the case of Snapraid, here.

Thirdly, the Unraid array itself has two states: enabled and disabled, which is nonsense for storage in general. It's like for the first time in 16 years of persistent testing of storage, I met a device where you can suspend the data storage service, and here the corresponding button is placed on the main screen, and it will have to be constantly used. And all because there are different settings that are made only when the array is disabled, and even you can add a disk to the ZFS or LVM pool that are not included in the main array only when it is disabled. And in this mode, the hypervisor and Docker automatically stop, and all your applications that were running on the machine turn into a "pumpkin". If you had pfSense in a virtual machine - congratulations, you were left without Internet. This is especially cynical if you are trying to solve some problem with the array: it's time to stay without the Internet at such moments. With virtual machines, everything is also at the level of "playing around": there are no snapshots, there can be no question of any replication.

Unraid itself is launched from a USB flash drive, and binds its license to its ID. At the same time, there are constant requests to read from the USB drive, and this problem is not solved. The flash drive is dead - the license restoration process is waiting for you. The USB flash drive fell off while the server was running (USB drives like to do this) - download the plugin to automatically mount it back. However, there is a good side to this decision: no matter how much I tortured Unraid, I couldn't finish it off so that it wouldn't load. The array did not rise - it was, and not once, the services did not start - it was, but the machine always started.

Well, the most unpleasant thing is that the technology around which Unraid is built, namely its disk array, is unstable. If some client has not closed some file on the server, the array will not be disconnected, and the server will not overload. When the hard drives fly out or when they are replaced with new ones, there is a far from zero chance that the array simply will not rise. Your data will remain intact, but it will not be easy to get access to it. There is a state when the array seems to have risen, but it doesn't seem to: you can't reconfigure it, you can't do anything with it - go to the tech support forum and look for a command to stop it via curl.. oh, yes, you don't have the Internet at this moment, because you used a software gateway to access the Internet…

Some basic things like folder encryption are missing here as a class, there is trouble with ACL settings. Built-in plugins for the OS (not to be confused with Docker containers of individual applications) here like to conflict so much that the repository has a special utility for troubleshooting the compatibility of modules with the operating system.

Unraid is very interesting and friendly, and if this project was 2-3 years old today, I would bet that it would eventually displace QNAP and Synology from the multi-disk NAS market for the SmB/SOHO segment, and I would not spare money for a license, but the project is already 17 years old, and "who is still there". There can be only one array in the system, and only 2 parity disks per 20 data disks is catastrophically small. Problems with blocking the start and stop of the array have not been solved for several years, as well as the operation of services when the array is stopped. Unraid is unlikely to grow into something more, and if, in the case of TrueNAS Scale or OpenMediaVault, you can make up for the lack of functions through third-party software, and Debian glitches are promptly cut out, then with a closed Unraid, nothing is and is not expected.

The only scenario of using Unraid that I approve of is the storage of footages in the video studio.

How to live on?

So, one thing is clear: you are very lucky if you used Synology only as a file cleaner, because you will easily switch to anything, but of course it is better to use TrueNAS Soge/Scale. From the point of view of their main function (storage), these distributions are almost flawless: they are oak, reliable and relatively simple, the main thing is not to ask them for anything more than data storage. The option to put TrueNAS in a virtual machine, and run software next door on the same host has a place to be, but imposes certain restrictions, with which, in principle, you can also live.

Replacing Synology with QNAP looks like an attempt to treat the symptoms, not the disease itself: yes, you will get the same level of service, but you will take on the same risks. Everything else, including A-brand products, is a highly niche solution that is justified in high Enterprise installations with non-optimized software that imposes increased requirements on storage. And if it so happens that it's time to reconsider your attitude to the data storage layer, there is no point in running from vendor A to vendor B in the hope that it will last a little longer. It would be more correct to move away from proprietary software once and for all into the world of freely distributed software: review the decision support policy, spend time training yourself or your staff, but completely eliminate sanctions risks.

Since there is no ready-made software full-stack solution similar to Synology and is not expected, we will have to bother ourselves and build our own software stack, replacing the main Synology services with what is found in the extensive GitHub bins, and if in any case we have to configure via the command line, launch services via Docker and consolidate Web interfaces of different containers in one place, then let's take the popular Linux hypervisor, and around it we will build a software binding for our needs! At least, we will have an Enterprise-class foundation, with a good history of implementation at enterprises, with high quality code, with a huge community and the most modern technologies.

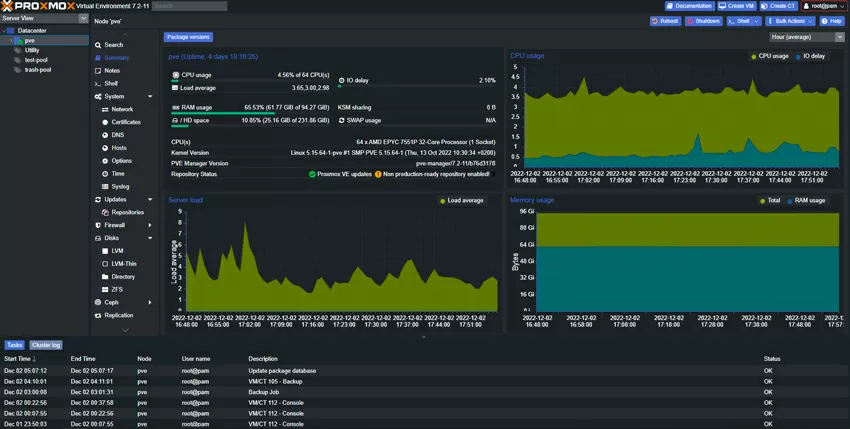

My option - Proxmox VE

I choose Proxmox VE, and here's why. Firstly, although it is a free product, but with paid technical support for the Enterprise-level, and this leaves an imprint on the entire distribution. For the reason that it is not produced by the community, but by a company, it is at least not assembled on its knees, like OMV, devoid of space ambitions like Truenas Scale, unlike Unraid, it is designed to work 24x7 in an Enterprise environment, where it is actively used, and at the same time distributed free of charge with open source code that is audited by the community, which distinguishes it from QNAP QTS and Synology DSM. The Proxmox VE core is Debian Linux, which gives access to any software and any modern hardware installed in the server (unlike VMware ESXi).

In the Proxmox VE community, it is often emphasized that this distribution is not a solution for NAS, but from my point of view, even in the Proxmox database it has enough to become a storage platform, because it is a hypervisor with its own storage layer, and the most modern ZFS file system is at the forefront here, but at the same time there is an opportunity create LVM volumes, there is support for BTRFS RAID, EXT4 and XFS. There is SMART for disk monitoring, Graphite and InfluxDB support for exporting metrics, built-in High Availability for fault tolerance, distributed storage - Ceph, and two-factor authentication, API tokens, your own backup server and even your Firewall. That is, as a Web-managed platform, Proxmox looks mature and functional. No, let's just say: after 5 years of working with VMware ESX, I don't understand why I didn't switch to Proxmox VE earlier.

What is not in the Proxmox VE database is everything that concerns file sharing, and you will have to configure everything yourself. There are no plug-ins and application stores, so containers will be used here, but there is trouble with them in the database: Proxmox VE does not support Docker, that is, the whole world with millions of programs installed in one team is left out. Instead of Docker, the developers focused on LXC, container virtualization, which from my point of view, as they say, "did not take off" anywhere except for low-cost VPS hosting: there are not many advantages over hardware virtualization, and Docker in SMB/SOHO and Kubernetes in Enterprise have become the de facto standard for containers. leaving LXC overboard.

A normal free solution similar to Proxmox with Docker support does not exist in nature, and without Docker, go set up your Synology analog... You can try to recreate the software platform on your own through Ubuntu with the Cockpit control panel, but there is no such level of management of the network subsystem and storage, you can try Webmin, but there is no such management of virtual machines and containers. And no matter how much I am against producing entities, but I will have to install Docker manually on Proxmox VE, and as a management interface - Portainer. Why do I put Docker on the host itself, and not in a virtual machine or an LXC container? Yes, because installing it in LXC is not recommended due to a drop in performance, and the virtual machine will give an extra layer of isolation, which in my case will simply interfere.

|

|

Synology DSM 7.1 |

TrueNAS Core 13.0 |

TrueNAS Scale 22.12-RC1 |

OMV 6.0.46 |

Unraid 6.11 |

Proxmox VE 7.2 |

| File system |

EXT4, BTRFS |

ZFS |

ZFS |

EXT3, EXT4, BTRFS, F2FS, JFS, XFS, using plugins: ZFS |

XFS, BTRFS, ReiserFS |

XFS, EXT4, BTRFS, ZFS |

|

Block level RAID |

Yes |

No |

No |

No |

Only for LVM pools (Cache and VM) |

No |

|

File level RAID |

No |

No |

No |

Yes using Snapraid plugin |

Yes |

No |

|

Encryption |

Volume-level | Volume-level |

Volume-level |

No |

Disk-level |

Using CLI for datasets, volumes and disks |

|

Plugins quailty |

Excellent |

Nightmare |

Nightmare |

Median |

Good |

N/A |

|

Virtualisation quality |

Excellent |

Nightmare |

Good |

N/A |

Average |

Excellent |

|

Native Docker support |

Excellent |

N/A |

Kubernetes |

Damn bad! |

Good |

N/A |

|

Native SMB/NFS/iSCSI/Rsync support |

Excellent |

Excellent |

Excellent |

Good |

Average |

N/A |

|

HA support |

Excellent |

Good |

Good |

N/A |

N/A |

Excellent |

|

Native distributed FS support |

N/A |

N/A |

N/A |

N/A |

N/A |

Ceph |

|

OS customization |

N/A |

N/A |

Good |

Good |

Damn bad! |

Excellent |

|

Widely used in the business environment for 24x7 services |

Yes |

Yes |

Yes |

No |

No |

Oh, yes! |

In Synology, you couldn't, and it didn't make much sense to do storage planning: set up several pools and cut them into volumes - that's it… In the case of Proxmox VE, we have real freedom of choice.

Storage planning

At the hardware level, I didn't find anything better than installing Proxmox on RAID-1 (ZFS Mirror) from a 128 GB SSD. These can be NVME or SAS/SATA disks, not necessarily high-end: they will contain the OS itself and storage for ISO files of virtual machines and container images. Basically, read operations will be performed here, so both TLC drives and QLC can be used here even without additional cooling.

For the high-performance layer used by virtual machines and containers, you can use U.2 PCI Express drives, if there is a corresponding basket in the case, or M.2 SSDs, which are convenient to install via bifurcation cards, allowing you to connect 4 drives in 1 PCI Express 16x slot. Such boards are quite cheap, since they do not have any chips on board, and the RAID from NVME can be raised via the same ZFS, native to Proxmox. Here you can also create encrypted volumes with users' personal data.

Proxmox is absolutely not against RAID controllers, and if there is a modern Adaptec level adapter of the 7th or 8th series, then you can turn on the RAID Expose RAW mode, combine part of the drives into a hardware RAID 5 with caching and hot swap, getting a huge linear write speed of literally any volumes on the HDD, and expose part of the operating system the system in the naked form (the so-called RAW mode) to control them at the software level. To format logical disks of hardware RAID, you can use the XFS file system as the fastest when working on top of multi-disk arrays, and to control the operation, install arcconf, megacli or another native utility for the RAID controller on the host. I am not an adept of ZFS and I believe that today there is a place for both software-defined storage and hardware, especially since modern RAID controllers give flexibility in choosing and expanding the disk configuration.

For the archive storage layer of documents and media files, I choose the Snapraid + MergerFS solution. This is a fairly common bundle for organizing the fault tolerance of an array with a file RAID by creating dedicated parity drives, similar to what is done in Unraid. This solution is suitable only for rarely modified data, it will allow you to work with a large number of disks as with a single volume, and in case of an accident to withstand the loss of up to 6 disks with full data recovery, plus to recover to a smaller number of drives, that is, compress the array.

In total, choosing Proxmox VE as a platform, I get:

- support for hardware RAID controllers,

- different file systems for different tasks,

- fault-tolerant boot volume

- NVME array

- deduplication, snapshots and compression by one of the many algorithms at the ZFS file system level

- the ability to create encrypted volumes within ZFS

- support for snapshots and replication

- enterprise-class hardware virtualization

- Docker + LXC container virtualization with direct access to the storage layer and hardware

- support for a large stack of modern and outdated (!) the hardware

- is completely free of the software part

- the ability to increase the storage subsystem almost indefinitely

- modern Web interface for platform management

This is incomparably more than I could get even from heavy Synology business class servers, but what I don't get at this stage is SSD caching for any types of arrays except ZFS, it's cloud management support for accessing the server behind a NAT provider, a beautiful desktop with removable wallpapers and mobile applications on a smartphone. Nothing, we'll fix everything in the next part.

Michael Degtjarev (aka LIKE OFF)

02/12.2022