What can Synology be replaced with in the conditions of sanctions. Part 2: Choosing a software

Installing applications, configuring services

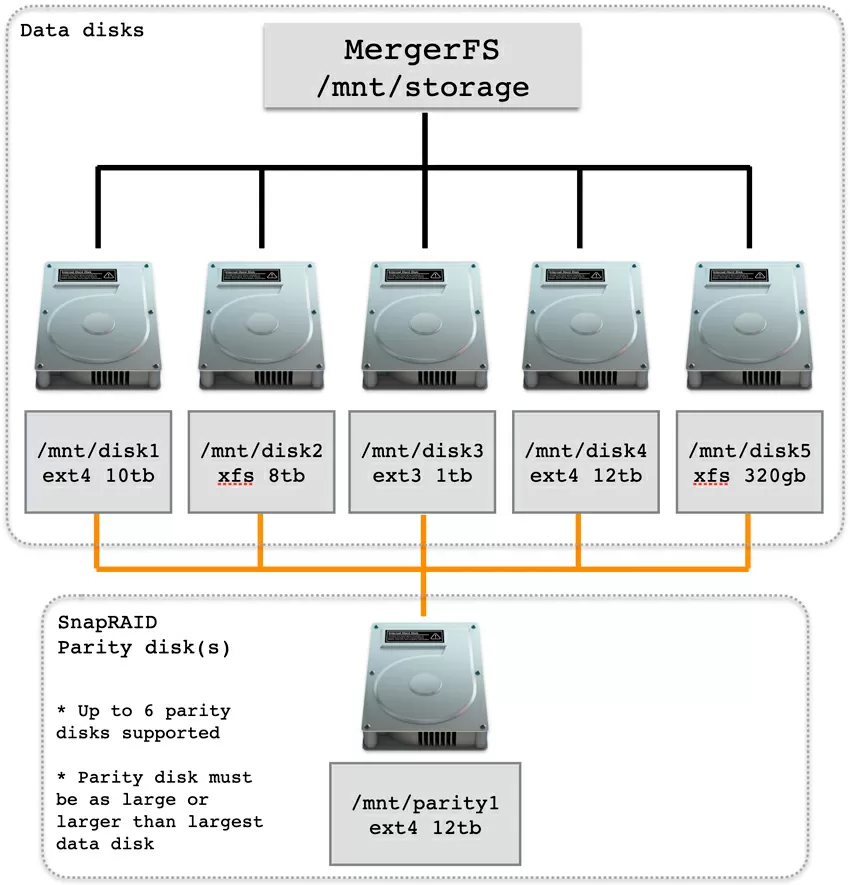

Having planned the storage, I proceed to installing the software. Docker is already installed, and now I will install MergerFS and SnapRAID file system support for the archived data array. For example, let's take 8 HDDs on which the files will be located. With the Proxmox Web UI, I format them in EXT4, but I don't add them as storage for the hypervisor. At this stage, I select a mount point in a separate directory /mnt/pve, and for 7 hard drives I set the consonant names media-disk-1 media-disk-2 ... media-disk-7, and I will give the 8th hard disk for parity storage for SnapRAID and mount it with the name disk-parity-1 to the same directory. I would like to note that Proxmox VE does not write mount points to /etc/fstab, so if you pull out one of these disks on a disabled server, the machine will still boot and continue to work, the archive volume will be available, and the information on the remaining disks will be saved.

I install the MergerFS package not from the repository, but from the developer's page on Github, since this version works better under Debian. Yes, unlike Synology, where everything was done for us, here we have to bother with package versions.

The MergerFS file system has several algorithms for distributing recorded files across disk directories. For example, it can distribute files randomly across disks, or it can first fill one medium, and then move on to the next. It does not split the files themselves into parts, so if the array is assembled from 1 TB of hard drives, even if there are 10 of them, you will not be able to write a 1.5 Tb file there. All the parameters with which MergerFS combines physical disks into a logical volume are set at the mount stage in /etc/fstab, so I run the following command from the command line:

nano /etc/fstab

I add the line to the open file

/mnt/pve/media-disk* /storage/media-merger fuse.mergerfs defaults,nonempty,allow_other,use_ino,cache.files=partial,moveonenospc=true,cache.statfs=60,cache.readdir=true,category.create=msplfs,dropcacheonclose=true,minfreespace=5G,fsname=mergerfs 0 0

as you can see, at the very beginning, the /mnt/pve/media-disk* parameter indicates that all available disks with names starting with media-disk are mounted to the shared file system. And it doesn't matter how many of them there will be at boot: 5, 6 or 10: when any of them crashes, the system will boot and the shared volume is mounted in the /storage/media-merger folder. Please note - disk-parity-1 is not included here. We perform the mount -a command and check that the free space in the /storage/media-merger folder is equal to the sum of all disks included in the array.

Recommendations for setting up the MergerFS + Snapraid bundle and a detailed description of how everything works, you will find here.

Containers: LXC and Docker, what's really the difference?

Proxmox, unlike Synology DSM, supports LXC container virtualization, but does not have native Docker support. We have already solved the second question with two paragraphs above, and now let me say a few words about LXC, if you have not yet encountered this type of virtualization. There are many articles on the topic of LXC on the Internet, of which it is generally unclear why they are needed if there is Docker. Specifically for our case, everything is completely simple.

Docker offers you a container as a ready-made application, with a ready-made set of presets and packages. Not all useful programs are packaged in Docker, and in general they can fully work in it, and for some, Docker virtualization is contraindicated, and there are installation instructions only for Debian and Red Hat branches. Any changes inside the container image are lost after it is rebooted, so it is not impossible to change the installation packages, but it is a chore.

It's much easier to launch an LXC container, and in 10 seconds get an analog of a virtual machine, log in via SSH and install the software you need manually. The container has its own IP address, so you don't bother with the availability of external ports and setting up a reverse proxy. LXC containers can be photoshopped and backed up, keeping the state and quickly rolling back to previous versions, and in general, some programs can be quickly tested in LXC, then either deleted or repackaged in Docker, or left in LXC as they are. And the shared folders of the host file systems can be dropped into the LXC container in the same way as in Docker (while losing the function of snapshots).

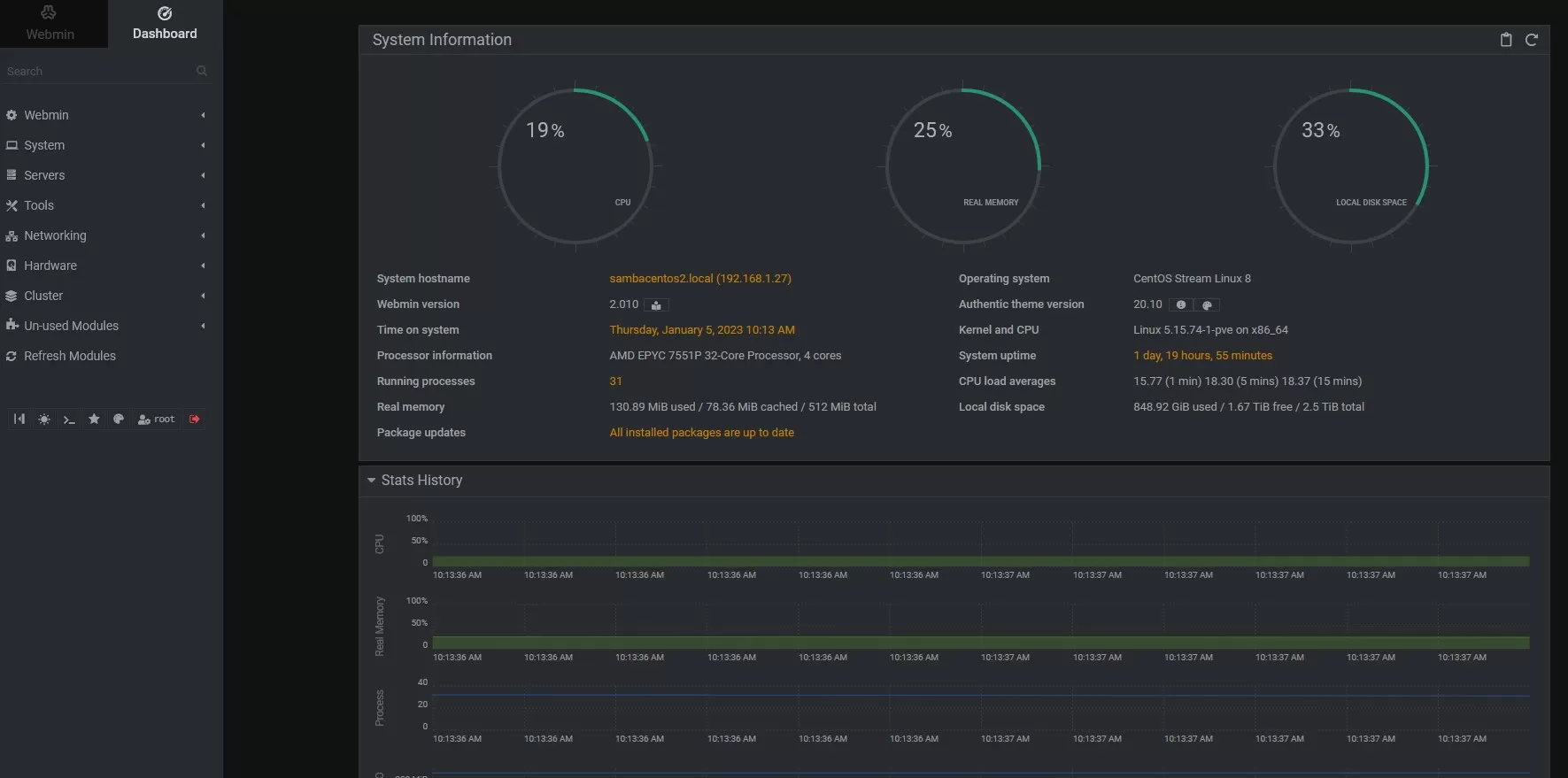

For example, if you need to install Samba, NFS and iSCSI servers, then of all the possible ways, the easiest for me was the LXC container with Debian + Webmin. In the container settings in Proxmox, there are even Samba and NFS options that need to be noted when creating a container.

When setting up, I tried a couple of hundred programs to replace the built-in Synology DSM services, and I will not describe the installation process, but only describe what I chose and why.

Why not ownCloud/Next Cloud?

In general, perhaps Nextcloud is able to replace Synology services to a greater extent on the "all-in-one" principle, and if your focus is setting up a space for collaboration with documents, then you should start with it. You will get a very cluttered software, but with a single user authorization, good support and a pleasant interface. But, like any other "Swiss knife", NextCloud is replete with flaws and a lack of functions that should be by default. Well, for example, NextCloud keeps user notes clean.in a txt file, without any protection, and there is no native mobile client for them at all. And this happens at every step, so I am of the opinion that it is better not to slide into an all-in-one solution, but to select services granularly for the task.

What and why do I choose to replace Synology services?

Samba/NFS/iSCSI: without further ado, the easiest way is to install CentOS LXC in a container and configure file services through this platform by dropping disk device volumes into the container and configuring access rights for users in the virtual machine.

To manage via the Web UI, you can use Webmin.

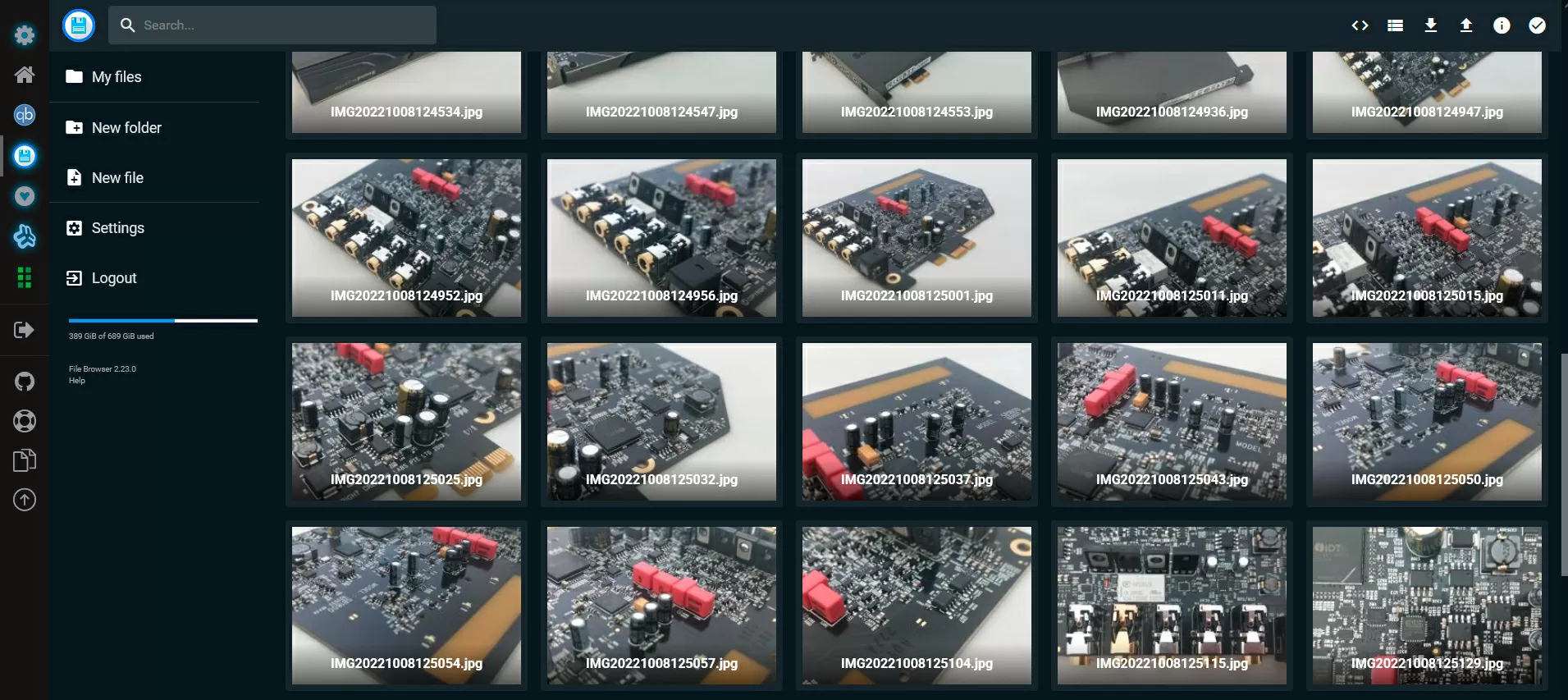

File Browser: Is crucial for me because I need direct access to the contents of shared folders. Filebrowser is worthy of attention because of its simplicity and Filerun because of its sophistication (it even supports integration with Elasticsearch for file search). The second one has difficulties with licensing and a non-removable trash when deleting files and folders, which is why the process of removing unnecessary junk takes place in two stages: we delete the file itself, and then we clean the trash.

This can cause volume overflow problems, so I abandoned Filerun. File browser is installed via Docker, and it is better to throw shared folders into it through symbolic links symlinks. In this case, if the folder is unavailable when the server boots (for example, it is encrypted with a password), then after unlocking you will not need to restart the container with Filebrowser.

Streaming media library on TV: this is a problem, since the main branches of media servers are catalogs by genre, rating, etc. If you just need to display the contents of folders with movies on TV, then you have to choose between a buggy Gerbera and a voracious Serviio. The second one has paid and free versions, and the free version is quite capable of replacing Synology Media Server. It is better to use Emby to catalog your media library. Both Serviio and Emby can be run through Docker at the same time and get two views of the same home media library: simpler and more complicated. I don't perceive Plex, no matter how I tried to start using it, and didn't like it.

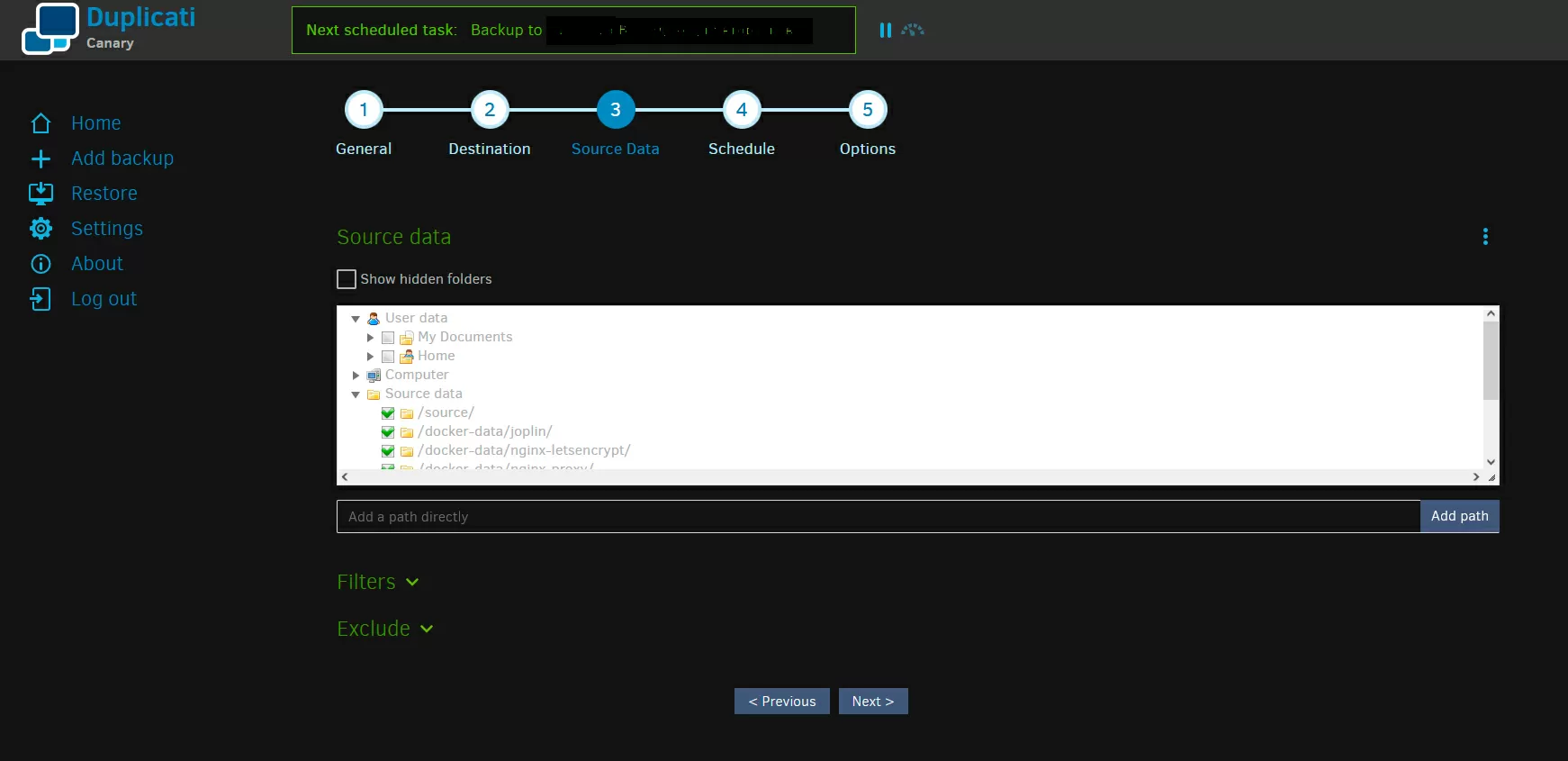

Backups of the server itself to the cloud or to a third-party server. You will be surprised, but such a simple thing as backups is a pain in the modern world of free software. The closest thing to Synology HyperBackup is the Duplicati program, which is in the eternal beta stage. Written for macOS, it runs through an emulator, which is why it wildly eats CPU resources, and to avoid this, you need to install Canary versions, which are not even served as alpha, but even more raw.

The program's assets include a huge list of supported back-ends, a more or less normal Web interface and support for all possible protocols, including FTP for backup to routers with external HDD, and S3 for distributed file storages like Storj. Like Hyper Backup, it cannot do two backups at the same time.

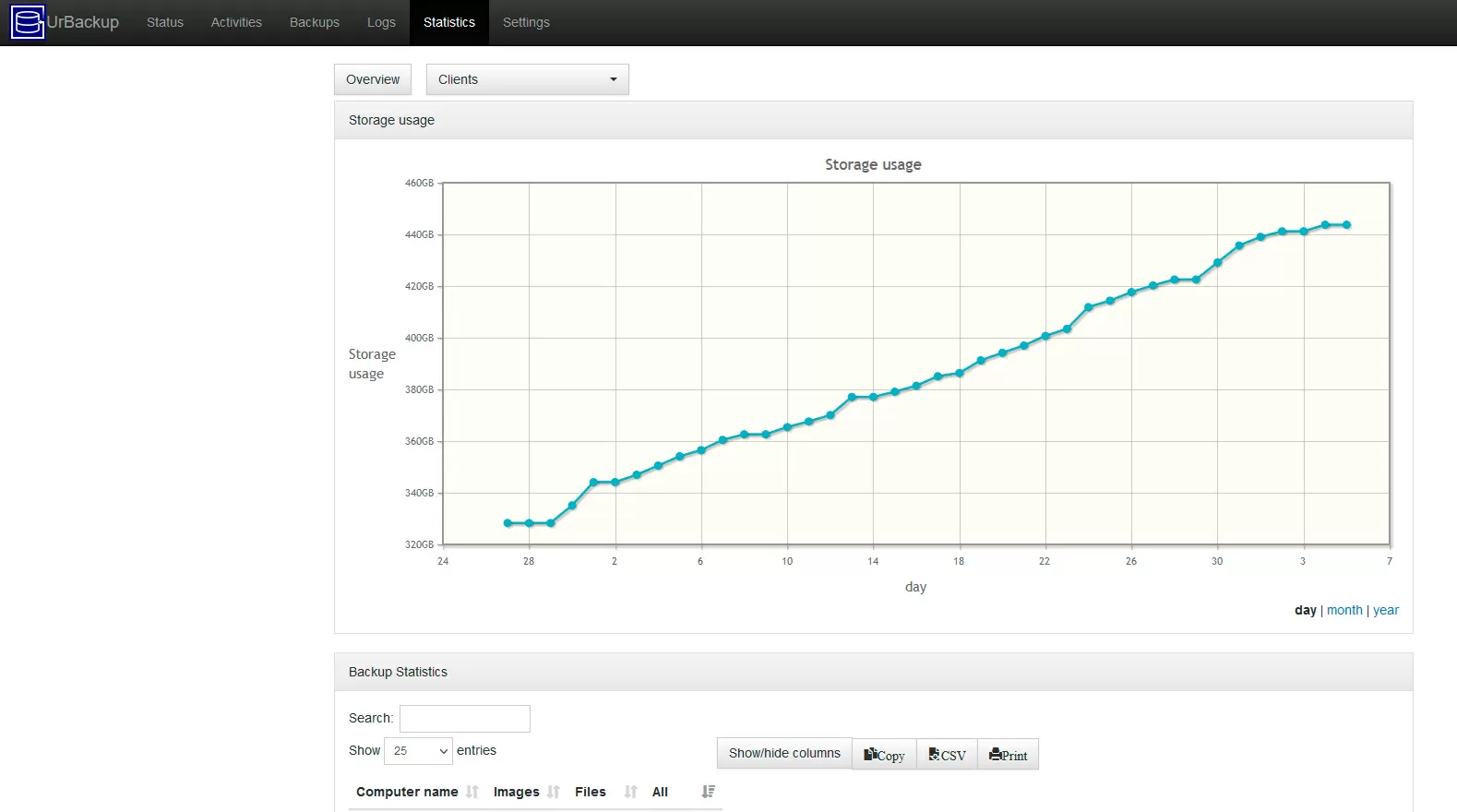

Backups of computers on the network on the NAS. There is an even bigger problem here than in the previous case. There is no analogue of Active Backup for Business, and UrBackup, a little-known but actively developed software, helps to more or less close the topic of reserving Windows machines, including via the Internet. This program itself does not know how to do deduplication and compression, but if there is ZFS on the server, this is not a problem: we can choose the most modern type of ZSTD compression and enable deduplication on a volume that we will drop into a Docker container with URBackup.

This volume needs to be encrypted with ZFS, because UrBackup stores data in plain text. The program supports backup of volumes, files and folders, has a data browser and a Rescue disk for restoring clients.

Backups of host virtual machines: everything is very good here, Proxmox has its own Backup Server product with deduplication and incremental copies of VMs and containers. Both the entire virtual machines and some files from them via the Web interface are subject to recovery. It is installed directly on the host or in the virtual machine.

Backups of VMware, Hyper-V and QEMU Linux virtual machines: everything is very bad here: everyone wants money for VMware backups, and only Vincin Backup in the free version is as close as possible to Active Backup for Business, but only for three virtual machines. Yes, plus the free version requires a license key, which has a feature to fly. But in general, this software looks very promising, it is not as loaded with functionality as Bacula and not as primitive as Borg. Its problem is that it does not support backup with Proxmox and restore to it. That is, it is currently impossible to transfer virtual machines from ESXi to Proxmox via backup. It is installed in a separate virtual machine.

Shared workspace: everything is very good here - Synology has not developed this branch, and Pydio is able to completely close the issue of general file handling and data sharing between workgroups. It is installed via Docker.

Notes service: It was a shock to me here that such a developed direction did not give rise to any ultimate solution that could replace Synology Notes. Standard Notes is more or less close, but in the free version, the functionality is such that it does not even allow you to upload photos, and the installation is very complicated, and requires working through a reverse proxy with SSL, that is, through a domain accessible from the Internet. The rest of the services are either not focused on security and encryption, or are not installed on a local server… Joplin looks very good, but it does not have a Web interface, and the local client stores encryption passwords in plain text in an SQLite database. Trilium looks great for Web access, but it is one-user and without a mobile client. As a result, I settled on Bitwarden in the Vaultwarden version optimized for Docker installation, which I used for secure notes and passwords. Simple notes that do not contain important data were left to Joplin.

VPN and Firewall - everything is fine here: I have been working with pfSense for several years, and this operating system in a virtual machine works on Proxmox without problems. If there is one ETH port in the server, then traffic can be shared via VLAN. Installing pfSense will allow you to abandon the built-in Firewall in Proxmox, which by the way is available individually for each virtual machine or container, and you can use both.

Access via the cloud (analogous to Quickconnect). I believe that if today you can buy a 1-core virtual machine in the cloud with a dedicated IP address for less than $ 1 per month to put pfSense there, connecting to it via VPN or proxying traffic through NGINX, then at such prices, look for and configure an analogue of Quickconnect with glitches inherent in free Linux-I I won't even.

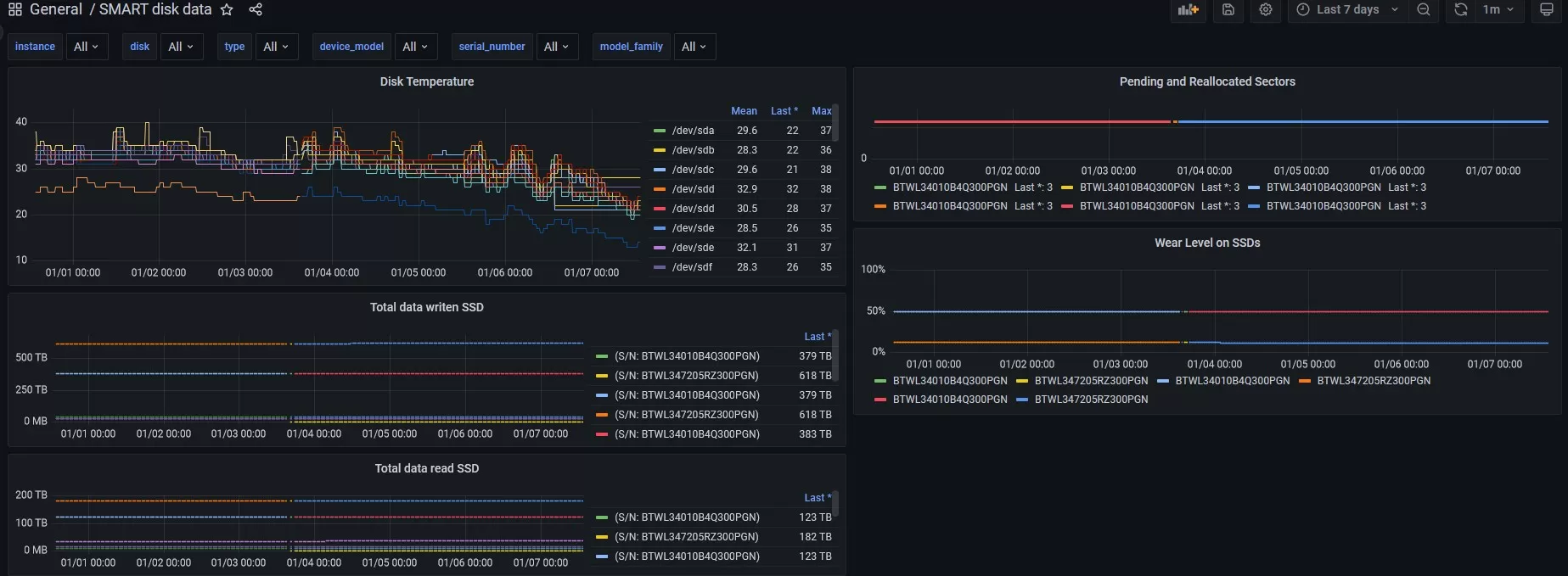

Monitoring of S.M.A.R.T., temperatures and fans and various metrics. Everything is fine here: firstly, Proxmox has a built-in exporter to InfluxDB and Graphite, secondly, the collection of metrics and logs is very well developed on the Prometheus + Grafana + Loki + Promtail stack, and you can easily get impressive graphs, and a quick search through logs and alerts on alerts. Here you can also integrate InfluxDB + Telegraf, fast Syslog NG, the SNMP metrics collector and IPMI. Everything is natively available through Docker with tons of configuration and installation documentation, and at the output you can get an exhaustive analysis of the system's performance with granularity to each container, each volume on disk, each parameter of S.M.A.R.T and analyze both in embedded and third-party programs.

Synology has nothing like this, and there is nothing to compare it with.

Video surveillance is a failure. I couldn't find a decent replacement for Surveillance Station among the freely distributed software.

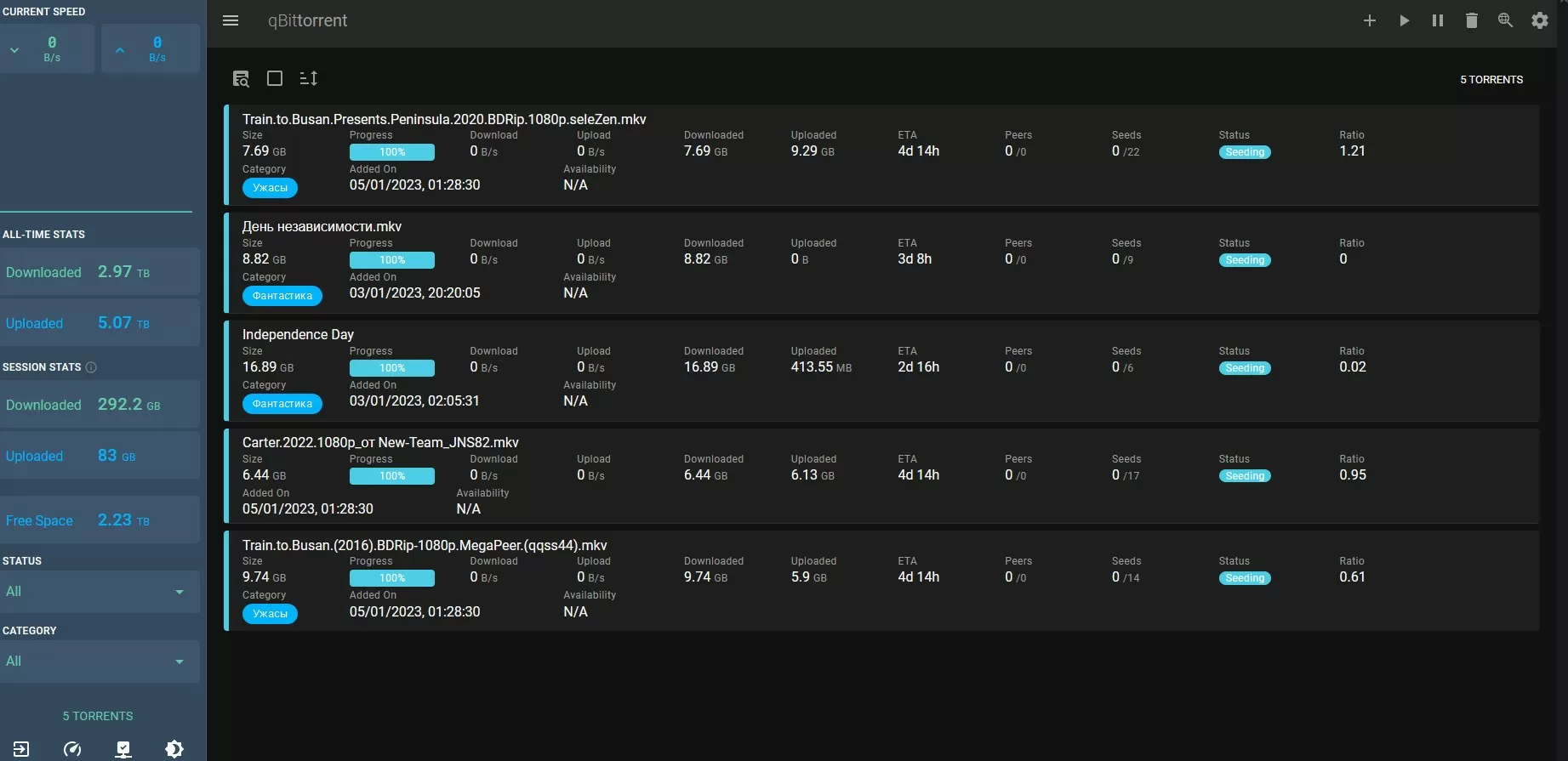

Torrent client - well, where without your favorite torrents. Here I chose qBitTorrent with a VUE webinterface - it looks very modern, is available in Docker, is actively developed and supported by the community. It has a very convenient function of tags and categories, so you don't have to run through catalogs every time in search of where to download a movie or TV series. This project has a significant problem: it consumes about 2 GB of RAM, and can safely eat both 7 and 8 GB of memory. Memory compacting at the Proxmox level helps a lot, but does not completely solve the problem. Against the background of transmission, which consumes 200-250 MB of memory, this is of course a terrible feature that the developers are not going to fix.

Now the so-called arr walls are fashionable, combining automatic search engines for TV series, movies, music and other content with torrent downloads. I tried it - it "didn't go in" for me, but everything is installed from Docker and configured very easily.

Snapshots - not everything is as smooth as we would like. For virtual machines, the snapshot function is well integrated at the Proxmox level, but in everything that concerns the file system, you will have to rely on the capabilities of ZFS, and it is best to use third-party scripts configured through the CLI, such as Sanoid and Syncoid.

We bring beauty

I am writing this part already a few months after I ran the NAS on myself. At first, it seemed important to me to have a single entry point into the space of web services, each of which is a separate program. For this purpose, Organizr is best suited, which is able to support authorization in third-party services, so that once you enter the password into the file browser, you will get rid of the need to enter it again in the future. Plus, Organizr supports inserting HTML blocks, and this makes it possible to fill its interface with graphs that Grafana draws for us from the monitoring stack.

As if not so. Firstly, there is an immediate problem with the httpS protocol, since security-obsessed browsers like Firefox do not allow websites to be opened through an iFrame. Secondly, well, you need to understand that the Web-muzzle here is mainly for such simple tasks as copying files or adding a torrent, and as long as the lion's share of the storage settings is carried out through the CLI, then the command line is dear to you. As a result, I was able to restore some more or less modern beauty through Organizer, of course, but I never got used to using it.

And once again I focus the reader's attention on the fact that I do absolutely all the settings without much regard for security. Steps such as using https or 2-factor authentication significantly complicate integration in a single window mode.

My own impressions after several months of work

The technologically created machine is significantly ahead of Synology's NAS, and gives unlimited opportunities for growth and expansion - it's nice. In everything related to data storage, snapshots, launching services and virtual machines, we have gone far ahead of DSM, but we have overlooked two points that are absolutely important for any modern hardware and software complex.

The first, as mentioned above, is security. By choosing a prefabricated hodgepodge from third-party packages, we disproportionately increase the area of a possible attack due to vulnerabilities in untested distributions. In principle, both Docker and LXC and KVM give us additional layers of isolation, using which we can lock our services in specially created networks and disk volumes. As a simple example, you can put all the management in a separate network, and the services in an open network. It is problematic to do this on Synology.

The second point is the potential instability of the installed packages. The simplest example: I used an SMB server in an LXC container on Ubuntu for three months, and in the fourth month I encountered a situation where it has a memory leak and the process is killed by the OOM manager. I had to reconfigure the container to the Centos 8 distribution, where there are no memory leaks, but the service discovery feature for SMB is disabled by default, and it needs to be enabled manually. And such moments manifest themselves at every step: the more you do, the more attention the car requires. Some services may start eating up memory or disk, someone needs to manually prescribe the rotation of logs ... setting up an ACL is a pain, limiting the volume of the created folder ... yes, it's easier to split the disk into different partitions and limit them already.

As for the disk subsystem, ZFS provides amazing customization options, but like any other FUSE, like BTRFS in Synology, under certain conditions it can "go silent" for a couple of minutes, disabling access to all ZFS resources for all services on the host at once. Both TrueNAS and Synology have this problem, and it costs only one method - using a hardware RAID controller where the disk load is capable of putting FUSE on the blades.

Since we don't bother optimizing resources, we need to be prepared for the fact that it is easier for some services to allocate more memory and CPU cores than to look for ways to reduce their voracity, and where Synology costs 4-core processors with 8 GB of RAM, we will need 8-core ones with 16, 32 GB of memory or even more.

Conclusions

From the company's point of view, replacing Synology will require the involvement of a specialist with deep knowledge of Linux, virtualization and file systems. The usual sysadmin of the "clean the mouse, overload the printer, configure the domain" level will not cope. The greatest economic effect will be the replacement of multi-disk top-end solutions with subsequent consolidation of resources on a new node. Here it makes sense to assemble large storage systems on tens and hundreds of hard drives, mixing ZFS and hardware RAID, and you will never run into the limitations of the iron platform. The more complex and demanding your infrastructure is, the more advantages you will get by abandoning the branded solution in favor of Proxmox. In the future, you can plan to decommission VMware, Veeam and Hyper-V, reducing annual license costs.

However, it will not be possible to replace Synology with 100% free software, and something will most likely have to be bought or pirated. With the increasing risks of being left without a guarantee tomorrow, without package updates and without the opportunity to buy and bring a new device, you have to decide for yourself whether it is necessary…

Michael Degtjarev (aka LIKE OFF)

07/01.2023