5 performance indicators of corporate backup

Finding out if your backup and recovery systems are working well is much more difficult than just knowing how long the backup process takes. Meeting the baseline requirements is a prerequisite for properly evaluating your system to determine if it is performing well or in need of redesign.

Here are five metrics every company should measure to ensure that backup systems perform well to meet business needs.

1. Storage capacity

Let's start simple: does your backup system have enough free space to meet your current and future backup needs? Whether it's a tape library or a storage array, your storage has finite capacity, and you need to keep track of what that capacity is and what percentage of it you're using over time. Failure to control it can lead to you being forced to make decisions that might be against your company's policy, for example, the only way to create additional capacity without buying more is to delete old backups. It would be a shame if the inability to control the capacity of your storage system results in an inability to meet the storage requirements set by your company.

Cloud storage may be the solution, as some services offer virtually unlimited storage capacity for archived data.

2. Bandwidth

Each storage system has the ability to take a certain amount of backups per day, usually measured in megabytes per second or terabytes per hour. You should be aware of this characteristic and watch how the bandwidth is used by your backup system. Failure to do so may result in the backup taking longer and stretching over the entire business day.

Bandwidth control is especially important when using tape. It is very important that the bandwidth of your backups matches the capabilities of your tape drive, in particular, the bandwidth fed to the tape drive should not exceed its minimum speed. The documentation for the drive usually lists the maximum and minimum speeds, but you can always google it for `` minimal streaming speed ''. It is unlikely that you will come close to the maximum speed of a tape drive, but you should also watch out for that.

Tape speed ranges (megabytes per second):

- LTO 8: 360 -750

- LTO 7: 120 - 300

- LTO 6: 54 - 160

- LTO 5: 47 - 140

It is unlikely that you will get close to the maximum speed of a tape drive, but you should also watch out for that.

Hard disk backup systems do not have a minimum performance threshold, and the backup speed is often limited by the interfaces and capabilities of the software used.

3. Computing power

The capabilities of your backup system also depend on the capabilities of the computing system behind it. If the processing capabilities of the backup servers or the underlying database of the backup system fail to keep up, it can also slow down the performance of the backups and lead to leaks on the business day. You should also monitor the performance of your backup system to see to what extent this is happening.

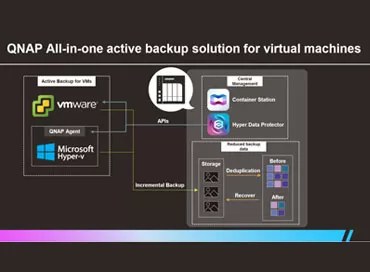

Especially the above makes sense if such resource-intensive operations are involved as data deduplication at the level of software responsible for backup. In general, deduplication can provide huge savings, but only if the original data is analyzed before it is stored in the archive in the form of archives. In our reviews of NAS, ZFS (QSAN and QNAP ones) we considered the possibility of deduplication on the fly, implemented using the means of the file system and found out that for such devices it is better to transfer data to NAS uncompressed, original using RSync.

A completely different situation in the case when the backup is centrally managed by the NAS using its own software, such as the one we have reviewed in detail Synology Active Backup for Business . Here, resource-intensive operations such as deduplication are performed only for backup files and are recalculated each time they are copied, while the file system provides compression. Figuratively speaking, if you are concerned about the processing power of your backup system, the best way is to use a modern NAS.

4. Reservation time frame

The previous two metrics are very important because they affect what we call the backup window: the period of time during which backups are allowed. If you are using a traditional backup system where there is a significant impact on the performance of your core services during a backup, you must decide in advance on what is called the "backup window." If you're getting close to filling the entire window, it's time to either revisit it or redesign your backup system.

To put it simply, the backup window is the amount of time during which there are concurrently allowed:

- Decreased performance or complete shutdown of services

- Increased network load

- Increased storage load

- The data being copied is the most relevant

Companies using backup methods that fall into the continuous incremental category, such as CDP (Continous Data Protection) or Continuous Data Protection, "almost CDP", usually do not need to worry about the backup window. This is because the backups take very short periods of time and transfer a small amount of data — a process that usually has a very low impact on the performance of the underlying systems. This is why customers using these systems typically back up all day, and once an hour or even every five minutes. The true CDP system actually runs continuously, transferring each new byte to the backup storage as it is written.

5.1 Recovery time

Nobody really cares how long it takes for you to back up; everyone cares about how long it takes to recover. Recovery Time Objective (RTO) is the amount of time agreed by all parties that it should take to recover from any incident that requires it. The length of an acceptable RTO for any given company is usually determined by the amount of money it will lose during the period when the service is down. For example, if a company is going to lose millions of dollars per hour during downtime, it usually wants to keep its RTO as low as possible. For example, financial trading companies aim to keep RTOs as close to zero as possible. Other companies for which downtime of a workplace or service is not critical can measure their RTO in weeks. The important thing is that the RTO meets the business needs of the company, and not who has this parameter less or more.

There is no need to have one common RTO across the entire company: it is perfectly normal and reasonable to have a tighter RTO for more mission-critical applications and a larger RTO for the rest of the data center.

5.2 Recovery Point

Recovery Point Objective (RPO) is the amount of data loss that can be tolerated after a major incident, measured over time. For example, if we agree that we could lose one hour of data, we agreed to a one-hour RPO. Most companies, however, settle for values that are much higher, such as 24 hours or more. This is primarily because the smaller your RPO, the more often you should run your backup system. Many companies might want a minimal RPO, but with their existing backup system, this is simply not possible. As with RTOs, it is perfectly normal to have multiple RPOs across the entire company, depending on the criticality of different datasets.

Recovery point and recovery time parameters are measured only when recovery occurs - be it a real incident or a test one. RTO and RPO are goals, but there are RPR and RTR parameters that measure the degree to which you have achieved these goals after recovery. It is important to measure these and compare against RTO and RPO targets to see if you need to consider redesigning your backup and recovery system.

The reality is that the RPRs and RTRs of most companies are far from aligned with the agreed RTOs and RPOs, and what's important is to shed light on that reality and acknowledge it. Either we tweak the RTO and RPO, or we rework the backup system. There is no point in having a hard RTO or RPO if the RTR and RPR are different.

What to do with the specified parameters

One way to build confidence in your backup system is to document and publish all of the metrics mentioned here. Let your management know to what extent your backup system is performing as intended. Let them know, based on current growth rates, how long it will take before they need to upgrade. And above all, make sure they are aware of the ability of your backup and recovery system to match your agreed RTO and RPO. Hiding this fact is of no benefit to anyone if a failure occurs.

Ron Amadeo

03 / 04.2020