QNAP ES1640dc v2 review - testing a dual-controller NAS with inline deduplication

In our previous review QNAP TDS-16489U, which can be used both as a computational node and as a virtualization tool with support for discrete graphics cards and machine learning applications. Today, we are testing a higher-level NAS whose functionality can be described in one phrase: "work with data, and nothing else." It is an enterprise-class device with a sophisticated 2-controller Active-Active architecture, OpenStack support, FreeBSD-based QNAP QES operating system and OpenZFS file system with deduplication and on-the-fly data compression, which allows serious savings on hard drives. As conceived by the manufacturer, QNAP ES1640dc v2 is intended for use in VDI (Virtual Desktop Infrastructure), as well as in the infrastructures of cloud providers that provide VPS services, where deduplication provides huge cost savings, and snapshots are already replacing traditional backups.

For testing, we used a test bench with the following configuration:

- Server 1

- IBM System x3550

- 2 x Xeon X5355

- 20 GB RAM

- VMWare ESXi 6.0

- RAID 1 2x SAS 146 Gb 15K RPM HDD

- Intel X520

- Server 2

- IBM System x3550

- 2 x Xeon X5450

- 20 GB RAM

- VMWare ESXi 6.0

- RAID 1 2x SAS 146 Gb 15K RPM HDD

- Intel X550-T2

- Storage System

- QNAP ES1640dc v2:

- 2 x 48 GB DDR3

- 10 x Seagate Savvio 10K6 600 Gb

- Soft

- VMWare ESXi 6.0 U1

- Debian 9 Stretch without Intel Meltdown/Specter patches

- Iometer (pseudo repeat, 412 GB)

- iSCSI LUN = 1.2 TB

- Test folder 1 - Consultant Plus Federal database (3250 files, 278 GB)

- Test folder 2 - 3 virtual machines: Windows 10 x64, Windows Server 2016, Debian 9 x64 (111 GB total)

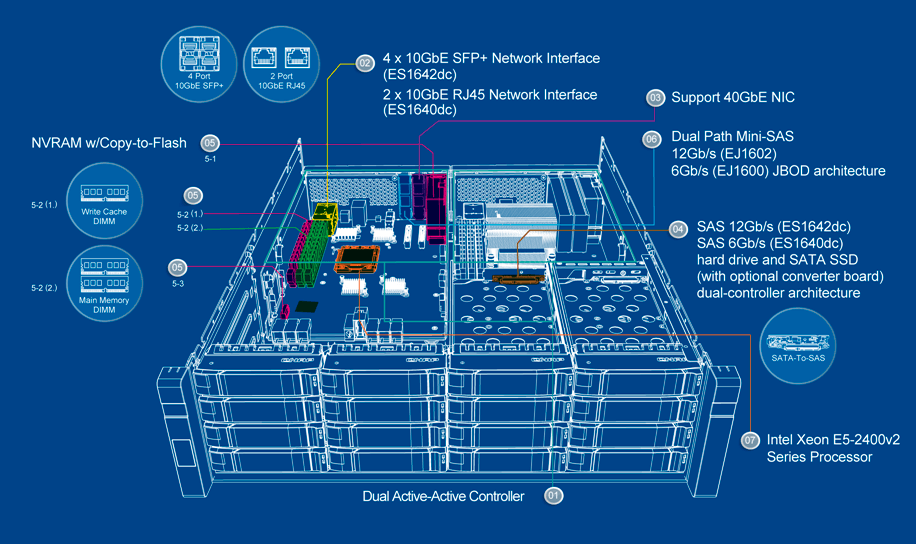

Dual-controller NAS

Unlike SAN devices, where controllers are duplicated even in the cheapest storages, a file access device without a single point of failure is a rare beast even in the corporate class. In fact, the manufacturer of such a storage system needs to fit in one case two full-fledged NAS-a, each of which can work independently of the other, but at the same time - synchronize the cache, and in case of any sneeze, even if the Ethernet cable is damaged, quickly take over the functions of its partner, up to IP addresses on high-speed interfaces. Compared to fault-tolerant systems on 1-controller NAS in High Availability mode, there are huge savings in the number of hard drives and expansion shelves, because you do not need to duplicate these components to protect against failures, no matter how large the storage system is. Of course, if everything were that simple, everyone would be doing 2-controller storage systems with file access, so let's take a look at the design features of QNAP ES1640dc v2.

We have FreeBSD operating system here, which has native support for ZFS, the only corporate file system that performs data compression and deduplication on the fly. Neither BTRFS (Linux) nor ReFS/NTFS (Windows Server 2016) does this, and in order to get instant savings when writing data to the NAS, each block of data has to be processed in real time: compress and compress so that files weigh less directly now, and not in a week, when scheduled optimization starts, and they may not be there anymore. This operation requires a lot of CPU resources, so each QNAP ES1640dc v2 controller has a 6-core Intel Xeon E5-2420 v2 (2.2 GHz, 12 threads, 15 MB cache). Yes, here the processors work only for the needs of the NAS, there is no own virtualization, there are no unnecessary applications here.

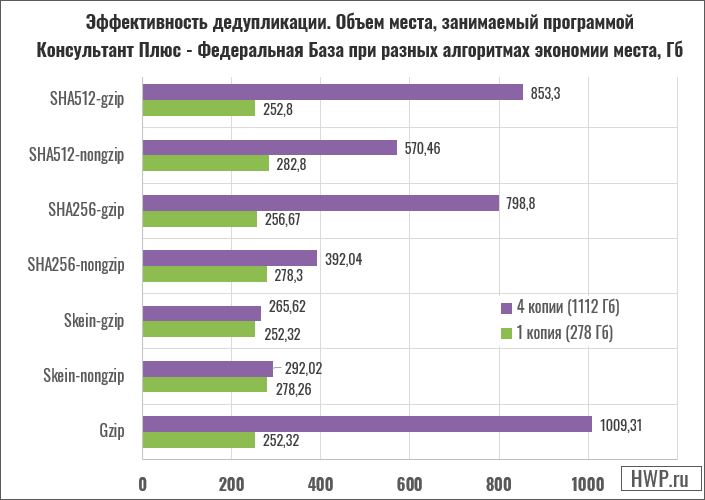

Deduplication can only be configured for root shares, and QNAP QES offers three algorithms to choose from: Skein, SHA256 and SHA512, which we now compare in terms of efficiency to compression.

Test shows that the default Skein algorithm gives the best results. Of course, the most juice of using ZFS is storing virtual machine images for web hosting and VDI . In both cases, all service files of operating systems located inside the virtual machine images will be deduplicated, and you can save space tenfold. One terabyte of hard disk space can store tens of terabytes of repeating virtual machine images!

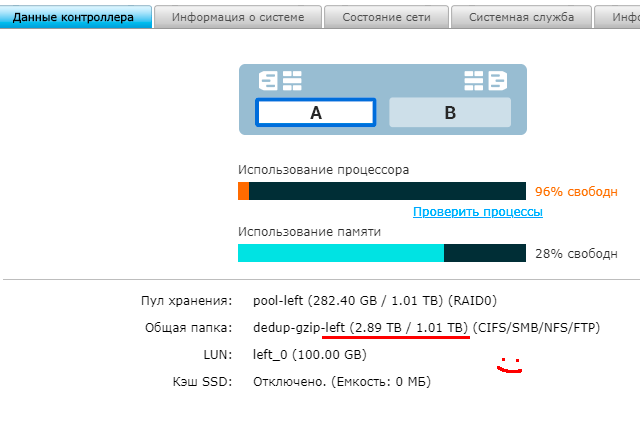

By enabling Over Provisioning, you will show the connected client more disk space than you actually have. This will avoid write errors when the client operating system does not know that compression algorithms are enabled on the storage system and thinks that the data will not fit into the common pool. Let's see how well the VDI saver works.

In our example, a small pool with a volume of 1.01 TB was created, then we took 3 images of virtual machines: Windows 10, Windows Server 2016 and Debian 9 with a total volume of 80 GB and wrote it to a shared folder, connecting it via NFS ... We made 40 (!) Copies of this folder, which corresponds to 120 VDI clients, and the total amount of data on the disk was 282 GB. Of course, when working with virtual machines, it will change, but the meaning is clear - after writing almost 3 TB to a pool of 1. TB, we still have 720 GB of free space .

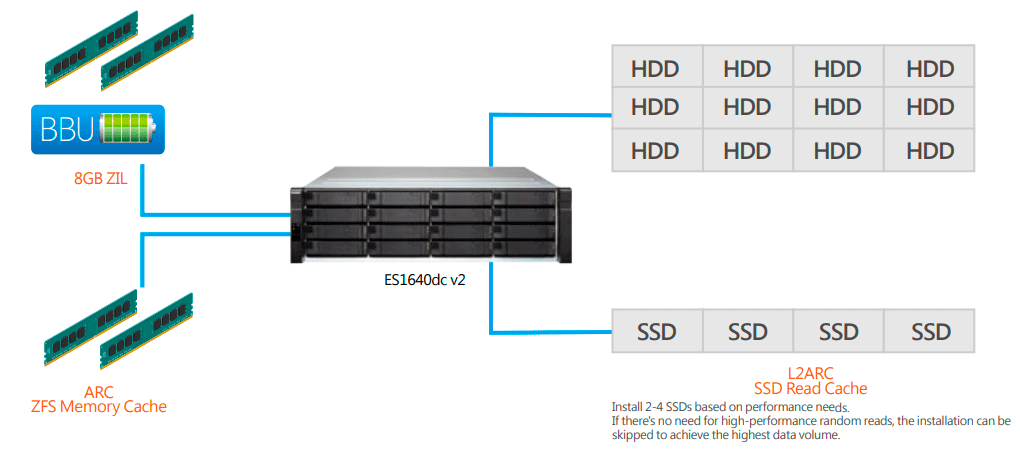

Caching - LARC, L2ARC and ZIL

The ZFS file system at the kernel level of the operating system supports 2 levels of caching: LARC (located in RAM) and L2ARC (placed on SSD), and also maintains a write log (ZIL), which QNAP caches in RAM and synchronizes with the disk system. Each controller has 48 GB DDR3 ECC (three 16 GB modules), of which 32 GB is reserved for the needs of the operating system, and 16 GB is for write caching. By itself, the QES operating system is not demanding on the amount of RAM, but with deduplication, tables with maps of repeating data blocks are stored in memory, which grow with the amount of data. The file system developer, Oracle, recommends installing 1 GB of RAM for every 1 TB of deduplicated data, but nothing bad happens when the memory becomes full - tables are just partially flushed to disk.

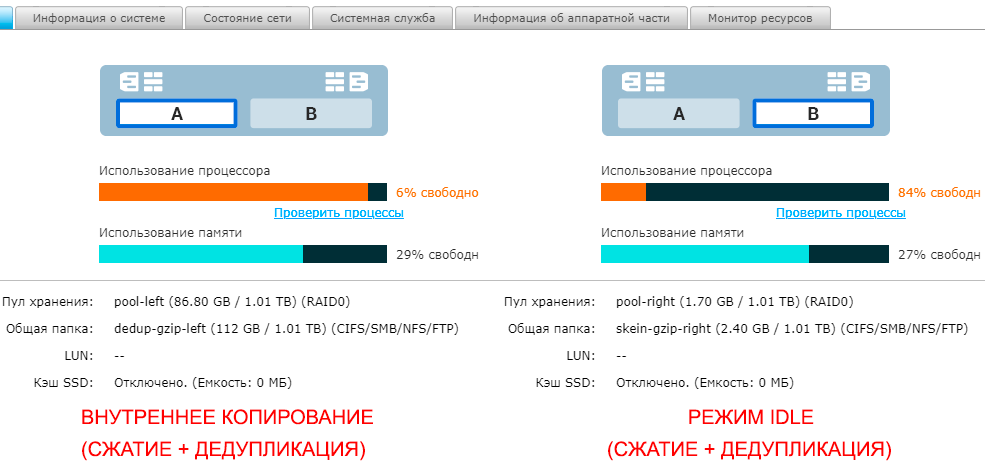

Monitoring shows that QNAP ETS has a higher priority for allocating resources for file operations, and by default, all free memory is allocated for read cache and deduplication tables, while a third of the memory is allocated for non-volatile data caching, and almost always remains free. The maximum load of processors falls on read/write operations inside the NAS, when it is necessary to decompress and compress data on the fly, and external interfaces do not act as constraints.

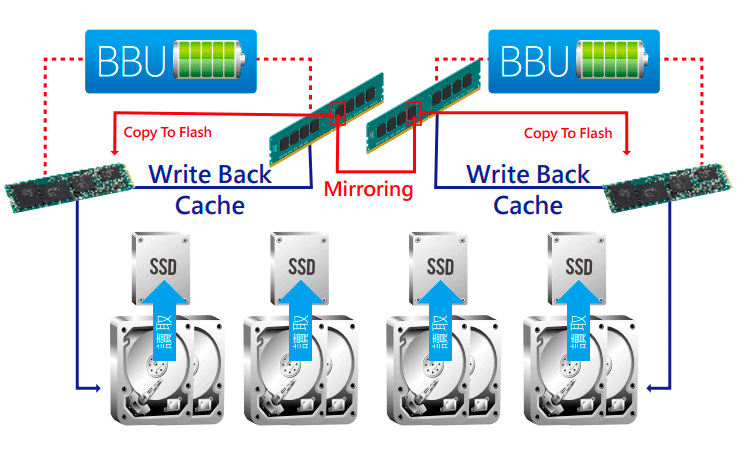

Looking at the screenshot of loading controller resources, we see that the amount of used disk space and the intensity of disk operations do not practically affect the use of RAM. With intensive internal copying on the left controller, only about 960 MB fit into the write cache, which is protected by the fault-tolerant Copy to Flash (C2F) scheme. Each controller is equipped with a 2800 mAh battery, which is enough charge to write the contents of the memory module allocated for LARC and ZIL to two pre-installed M2 SSDs. Moreover, the built-in battery powers the entire NAS controller , including the fans, so unplug the cables from the running ES1640dc v2, it will make noise and blink lights for a couple of minutes, saving data to the built-in drive.

Pay attention - the contents of the cache are duplicated, so if after a blackout it turns out that one controller has burned out, the file system will remain in working order, because the cache will also be saved on the neighboring SSD, the volume of which is equal to two volumes of the write cache.

QNAP ES1640dc v2 does not have dedicated slots for SSD L2ARC cache, PCI Express SSDs are not supported, and moreover, only SAS drives can be installed to enable simultaneous connection to two controllers ... The developers of the ZFS file system themselves say that installing an SSD for caching should be done last, if there is no way to increase the amount of memory, and it is clearly not enough. Our tests will show that thanks to deduplication and data compression, when reading, the speed on the network interface can be 10 times faster than the speed of reading from hard drives.

Paranoid disk and pool checking is the key to data integrity

Generally, in traditional NAS-s, combining disks into a RAID array is performed using the mdadm program for Linux, but in ZFS the file system itself creates disk pools, arrays, and also monitors the state of hard drives. The creators of ZFS from Oracle provided protection against invisible data corruption (Silent Corruption), scheduled volume check (Scrub), as well as S.M.A.R.T. on schedule, plus automatic recovery of found errors. It is very interesting that in the QNAP ES1640dc v2 interface you will not find vdev, Zpool and other terminology ZFS . Instead, there are clearer and simpler designations for RAID 1... RAID 5, which is great, but even more interesting is that concern for the health of the HDD here comes to paranoia.

We installed 12 Seagate Savvio 10K6 hard drives, each of which first served for 3 years in data centers, and then was repeatedly tested both under ZFS (FreeNAS, NAS4Free) and under various Linux distributions, and under Windows Server 2016, and no one complained about hard drives. So - QNAP ES1640dc v2 rejected two hard drives, flatly refusing to add them to the pool. Studying S.M.A.R.T. showed that the hard drives are healthy, but their write speed in IOPS was half the speed of the rest, and in principle, if you don't test your hard drives for speed every day, you won't find out about it, and QNAP ES1640dc v2 said: "no, such jokes don't work here. If you want data safety, look for other disks."

Such a scrupulous attitude towards hard drives is welcomed in every possible way by ZFS lovers, since data safety is an unshakable plus of this file system, but you need to check with your supplier - whether he will accept under warranty hard drives that the storage system did not like, but while looking healthy?

External Interface Support

The first version of QNAP ES1640dc v2 had only two 10-gigabit network ports for data transmission, and for some reason the manufacturer chose the BASE-T interface for the twisted pair. Probably, customers accustomed to the optical environment were convincing enough that in the second version of storage, QNAP additionally installed 4 SFP + network slots with a speed of 10 Gb/s. In total, each controller in ES1640dc v2 has 2 copper 10 Gigabit RJ45 ports (using a discrete Intel X550-T2 network card), 4 10G SFP + slots (an Intel XL710 chip on the motherboard), as well as a dedicated 1 Gigabit RJ45 port for control via the Web interface and also the console port.

Considering that each QNAP ES1640dc v2 controller can work independently, in total out of the box, without upgrades you get 120 Gigabits of the purest network speed , and if suddenly this seems too little, in each controller, you can install one more expansion board:

- QNAP LAN-10G2T-X550 (Intel X550 processor)

- QNAP LAN-40G2SF-MLX QSFP +

- QNAP LAN-10G2SF-MLX SFP +

But in general 120 gigabits per second should be enough for any needs, even taking into account the growth of a large enterprise, so it is more important to use such a variety of ports for redundancy and fault tolerance.

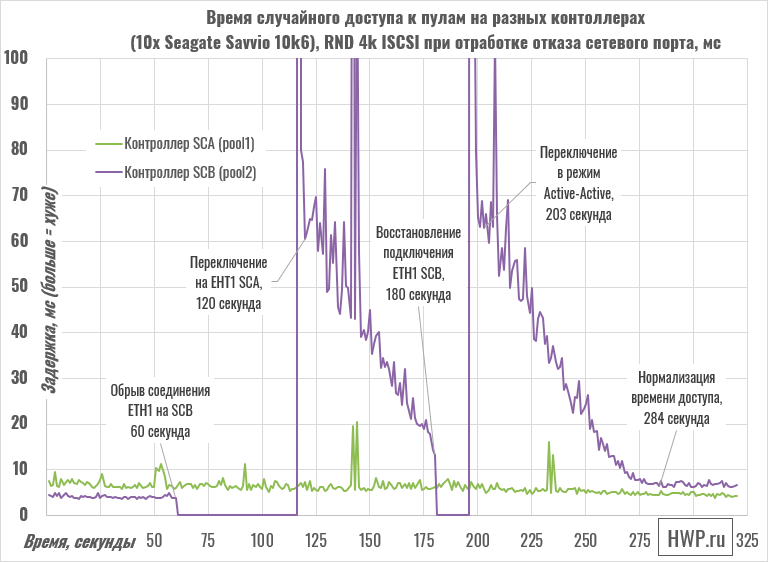

Active-Active Controller Reservation

The first thing we do in modern NAS is create a disk pool, and in QNAP ES1640dc v2 you need to specify which controller (SCA or SCB) it will be bound to. For example, put a pool for hundreds of VDI clients on the first controller, and a pool for database servers on the right controller. You can route traffic to different network ports, to different hard drives, through different processors. Great, isn't it?

However, there is one point - logically, each network interface is tied to the controller in which it is installed. That is, if the Ethernet 1 port on the SCA controller has the address 192.168.2.1, and Ethernet 1 on the SCB is 192.168.3.1, then only the pool resources on the SCB controller will be visible at the second address, and there is no way to change the pool binding.

If a network port on the SCB loses a link or the controller hangs/crashes, then SCA takes over both its disk pool and its IP addresses of network interfaces, and on Ethernet 1 port in the first controller there will already be 2 IP addresses: 192.168.2.1 and 192.168.3.1, and the resources of both controllers will be available on the same physical interface, but on the same different IP addresses. Likewise, all other network ports will be mirrored to the working controller. Let's see how fast this process is.

Average responce time while controller down

60 seconds for direct switching and about 20 seconds for reverse is not a record, of course, but for this price range it is more than good, and even such a fault-tolerant design is not a reason to refuse backups.

Replication and data backup technologies

We have already said that the QNAP QES operating system does not have support for third-party applications, and unfortunately this also applies to cloud service customers. There is no native support for Amazon S3, Microsoft Azure or Google, which looks a bit odd considering how persistently leading storage experts today recommend backing up data to the cloud.

Two technologies are used for replication between NAS: traditional Rsync and block SnapSync. The latter is good in that before sending data to the server, the NAS can independently perform data compression and deduplication in order to transfer only changed blocks. This allows you to make incremental backups of images of virtual machines and databases, which occupy tens of gigabytes in one file: when only part of the data changes inside the file, this part will be transferred to the target device, and the area of the virtual machine where the operating system is stored is not will be endlessly backed up, taking up time and clogging up communication channels.

Let's check how SnapSync works - take the same VDI content folder, including 3 virtual machine images, sync it to a remote folder, then start Windows Server 2016, crash and sync again. The first time the backup required the transfer of 111 GB of data, the second time - only 550 MB, so only the changed part was synchronized - the operating system updates that were downloaded during the test.

And, of course, one cannot but mention the snapshots that are produced in the ZFS file system in a matter of seconds, thanks to the support of the Copy On Write principle. For each resource, be it the network root or iSCSI LUN, you can create up to 65,536 snapshots, which means that if you take them automatically every hour and don't delete them, you can keep the entire hourly history of your files in last 8 years!

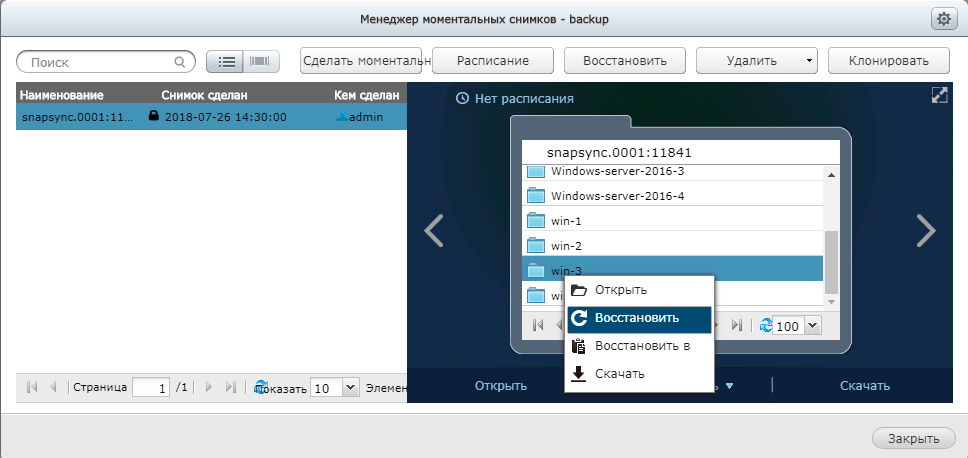

By the way, the snapshot browser is just a role model! It looks like Apple Time Machine, and by opening the snapshot, you can view all the files in the file browser and restore the one you need. It is especially pleasant that here, in snapshots, your every step is processed very quickly - instant reaction to a mouse click, no expectations.

As you can see, the QNAP ES1640dc v2 design is capable of withstanding any disruption in the enterprise digital ecosystem, all in one enclosure, with one set of hard drives. A modular scheme with full redundancy and hot-swap of components, fast switching between controllers is a guarantee of high availability, for which customers always pay double, but in the case of QNAP ES1640dc v2, no one overpays, but on the contrary saves on compression and the number of disks and shelves .

Speed test results

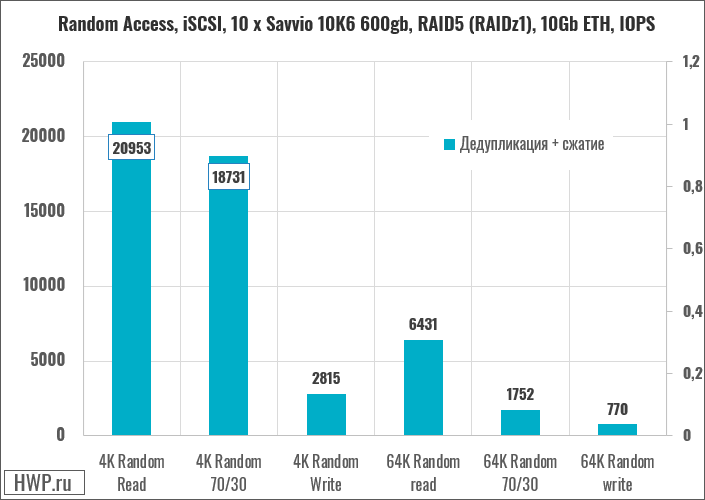

Since QNAP ES1640dc v2 only supports iSCSI LUNs at the file level, we used Iometer to create a 412GB test file with Pseudo Random padding to reduce the impact of compression on the results. We have not previously tested industrial storage systems with the ZFS file system, so no storage tuning or test bench was performed - we measured typical values with default settings.

The results, to put it mildly, are surprising: given that one hard drive gives a performance of about 300 IOPS in the 4K random read test, a dozen hard drives in the array cannot go beyond 3000 IOPS if they want to, but here we have - 21 thousand! This is what I was talking about - deduplication, plus compression, plus competent caching allow you to break the laws of physics in synthetic tests.

Inspired by such values, we conducted an intermediate test - we combined two HDDs into a Stripe pool and ran a standard 4K Random test, and decided to look at the speed by internal monitoring of storage performance. As you can see in the screenshot below, ZFS caches a fantastic 6K I/Os in the slowest array type.

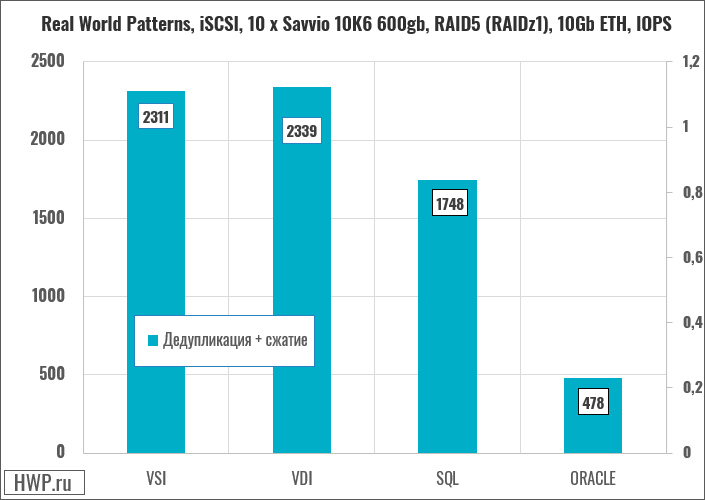

But let's go back to our main configuration and see the performance in real patterns.

In complex patterns, optimization is invisible, and the speed matches what you expect from the data storage system. The only unclear moment is a strong drawdown in the Oracle problem. Typically, in this test, storage systems give performance comparable to VSI results.

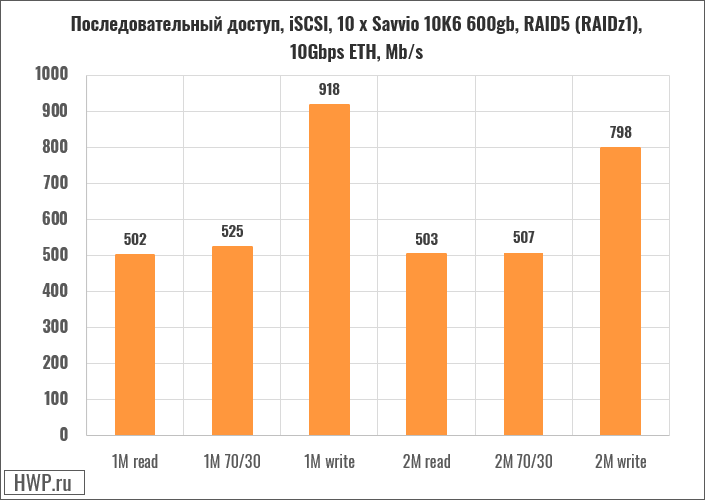

Sequential access shows exactly what you expect from it: good consistent speed with a peak at 1MB blocks.

Scaling

You can connect up to 7 EJ1600 (v2) disk shelves with 16 disks each to one head unit, which, when using 12 terabyte hard drives, gives a total volume of 1536 TB.

Each controller is connected to each disk enclosure with two cables (dual-path), forming a fault-tolerant loop with a total speed of 48 Gb/s, in which the interruption of any connection is experienced without consequences. But if you disconnect the entire disk enclosure included in the array, then the head unit will block access to the data stored on it, and as soon as the enclosure is working again, the data will be restored.

Purchase and Warranty

QNAP ES1640dc v2 comes with a 3-year warranty, the exact price was not disclosed.

Additional licenses

All the functionality specified in the review is included in the device price and is available without additional licenses. QNAP does not have any additional licenses for this storage system at all.

Conclusions

QNAP ES1640dc v2 is a file access for multiplying customers, because this storage system allows you to invest in a head unit once and then save at every step: install fewer disk shelves, buy fewer hard drives and SSDs, rent less rack space and end up using less energy.

What didn't you like? We are constantly talking about the trend of splicing storage systems and computing nodes into one whole, and in this regard, I regard the lack of virtualization in QES as a movement against the trend. QNAP has an object storage server, a good container station, a hypervisor with forwarding USB and PCI-E devices, but all this is in the QTS series, and the functionality of QES is comparable to that of SANs. Of course, you can say that the ES1640dc v2 is a machine for archival storage and for cold data, but is that a reason to ignore Amazon S3 and Azure cloud services?

Yes, of course, if you are changing an old SAN to a new powerful NAS and expect some advanced features from it, you should look towards QNAP TDS-16489U. But if the project is just being worked out, then ES1640dc v2 is the case when we will now put a smart NAS instead of a SAN, and in the future we will reduce operating and expansion costs by n times , but while our company is growing, we are waiting for new features to be added.

What we liked:

- Very convenient work with snapshots

- Built-in File Station is something that expensive Enterprise software and more expensive NAS lacks.

- The logic for working with ZFS pools was ripped out and replaced with RAID logic. There is no zvol, vdev, or RAIDZ1 here - that's great!

- Snapsync Block Replication

- Complete lack of additional licenses for anything

It's no secret that HPE, Dell/EMC, and Lenovo train their sales managers and teach them to resist any comparisons of their storage systems with QNAP products, creating one simple image for the customer: "The QNAP is a home device, and the rack-mounted QNAP is an overgrown torrent rocking machine." We are convinced that QNAP ES1640dc V2 tears this template to pieces, because from the smallest bolt to the most powerful snapshot manager, this is a true-enterprise for serious buyers and large projects with thousands of physical terabytes, for those who think with their heads and choose with a wallet, and not pre-crisis stereotypes. So let them look for new arguments, or try to do something similar in the same price niche.

Mikhail Degtyarev (aka LIKE OFF)

14/08.2018