QNAP TDS-16489U - testing tiering, SSD caching and virtualization in enterprise storage

Modern storage systems are primarily software functions aimed at storing, protecting and distributing information. I would like to say that in the second place - this is hardware, but no - for small companies it has become important with what services the data storage system can be integrated, whether it can backup or even replace any "cloud" and how many other paid services replaces one NAS today and replaces tomorrow. The developers of network storages went further and proposed to completely transfer the entire virtual infrastructure to one device: both data and the applications themselves. And when the manufacturer says: “Inside our NAS, you can run virtual machines and containers, work with CAD applications using a video card, on the same device you can store hundreds of terabytes that are available to your programs without delay and which are protected every minute by snapshots, replications and backups to the cloud ", Here we are already starting to think about how many devices in the rack will replace one such NAS, and here we remember about the" hardware ".

QNAP needs no introduction. Starting with SOHO-class desktop NAS-s, today it releases powerful virtualization nodes of the TDS-16489U series, built on two Intel Xeon E5-26xx V3 (8 cores, 16 threads), with 256 GB of memory, with PCI Express slots for expansion cards, and most importantly - with software in which both data storage functions and hypervisor functions are combined into one web interface and controlled from a common window.

The well-known dilemma of the last five years: “which drives to install - fast SSDs or large HDDs”, QNAP decides simply: “install both, our SSD cache does not use bays for hard drives: Our flagship model TDS-16489U, designed for Big Data, has 16 bays for 3.5-inch HDD (SAS/SATA), plus 4 bays for 15 mm SSD (SAS/SATA), 4 PCI-Express slots, all in a 3U package. " Different types of SSD media are needed to take advantage of both SSD acceleration technologies in the world today: Tiering and SSD cache.

Tiering - hot data transfer to SSD

The term "Tiering" has no analogue in Russian, but this word means the distribution of data by media types, as by layers of a pie. Everything is very simple here - let's say we have a large amount of information that we store on slow 7200 RPM hard drives. Periodically, we refer to these files and start working with them, reading and saving them to disk. This is a typical situation when preparing a report or analyzing a large archive. The storage controller understands that if this data suddenly arouses interest, and the application or users access it, it must be moved to faster media and automatically transfers it to where the access speed and cost of a terabyte are significantly higher.

QNAP has three storage levels in Qtier technology:

- 7200 RPM SATA/NL-SAS Hard Drives (Capacity Layer)

- 10K/15K RPM SAS Hard Drives

- SSD (Performance layer)

Tiering creates another RAID array from the SSD, the volume of which is added to the existing disk pool , and this has a huge advantage over simple SSD caching, where the volume of the solid state drive is not taken into account in the general disk array. It would seem that it is possible to recruit inexpensive high-speed SAS hard drives and fill them with an additional disk shelf in order to get a higher speed, but in the current realities, drives with 10/15 thousand revolutions have become so unprofitable that manufacturers are removing them from the assembly line: this sector is completely replaced by solid-state drives, so in real life, the most popular files will always be on solid-state drives when multilayer storage is activated. This is why it is highly desirable to use RAID 6 for the ultra-fast level.

Specifically, in QNAP TDS-16489U 4 additional hot-swappable bays are allocated for SSD, so that solid-state drives are installed here not at the expense of the Capacity layer, but in addition to it. Considering that at the time of this writing, there were 7.68 terabyte SSDs in 2.5 "format on sale. (HGST Ultrastar SS200 and SS300), these four additional bays are enough for nearly 30GB of blisteringly fast data.

The Qtier process is fully automated - a self-learning algorithm takes into account the frequency of accessing data blocks and transfers the most popular ones to a higher layer. This process is carried out either on a schedule (at night when everyone is asleep), or with a minimum load on the storage system - as configured. You can forcibly enable moving "right now", but you cannot influence what the system wants to put on the SSD and what on the HDD: you only have access to a choice of directories and LUNs for which QTier is activated.

One of the most useful features of multilayer storage is storing new files directly onto the Performance layer. This is a mega-useful option, and here's why: if you use large LUNs stored on the HDD, then be prepared for the fact that the storage system will analyze their usage for a very long time before transferring them to the SSD. And so you can copy an existing LUN, or create a new one - and it will immediately appear on a higher layer, and at high load, it will remain there forever. And remember that if you upgrade your existing disk pool to the QTier level (that's what the manufacturer calls the activation of multilayer storage), then you will not be able to disable it.

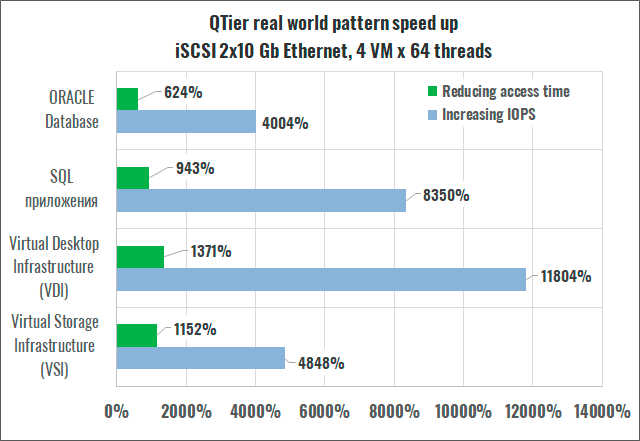

To show you the beauty of this technology, we took 4 400GB Hitachi (HGST) UltraStar SS200 Enterprise SSDs and "upgraded the disk pool to QTier" by creating a RAID 5 of these drives, adding to existing disk terabytes have 1 terabyte of SAS SSD. The upgrade process took about 30 minutes, after which real-life patterns were launched, filmed by PureStorage specialists to assess how much our storage system is changing.

Yes, of course, we are all accustomed to the fact that SSDs are tens of times faster than HDDs, but note that we did not sacrifice any hard drives, and all 16 Capacity-layer bays are still at our disposal. and since QTier is part of the QTS operating system and does not require the purchase of licenses, we only pay for solid state drives. Each of the HGST Ultrastar SS200s used in our test costs about $ 440 - is that a lot or a little in terms of QTier?

And if we take into account that with the growth of the volume of SSDs, their speed indicators also grow (together with the price, however), then the use of terabyte SSDs can give even more impressive coefficients.

If the user has access to the settings for how and what and where to transfer between layers, then there will be no need for a traditional SSD cache at all.

SSD Caching

SSD caching works at the block level, and right away when you configure it, you will be prompted to choose for which access the fast media is used: for random or sequential, as well as whether it will work only for reading, or for chronicle also.

In general, the ideology of the SSD cache is such that it acts as an intermediate link between the client and the SSD hard drives, and the data in it does not linger for a long time, being replaced by LRU or FIFO algorithms. This is why when choosing an SSD for read/write mode, the QNAP operating system will warn you twice that if the solid state drive breaks down or is disconnected, you may lose information in the disk pool. Before the scheduled replacement of the caching SSD, you must first disable it in the operating system settings, wait until its contents are synchronized with the hard drives, and only then remove it from the machine. Our tests have shown that in some situations it can take up to 10 hours (!) To flush the contents of the SSD cache to the disk pool.

For more efficient use of SSD, there is a mechanism for "cutting off" long blocks of data during random access, designed to prevent cache from something that can be quickly read or written to hard drives (for example, large long files that read and write entirely, such as video recordings).

SSD cache does not depend in any way on data separation into layers, and you can use either of these technologies separately or both at the same time, allocating the fastest and most expensive NVMe media for caching, and for Tiering - SAS/SATA SSDs, which are cheaper.

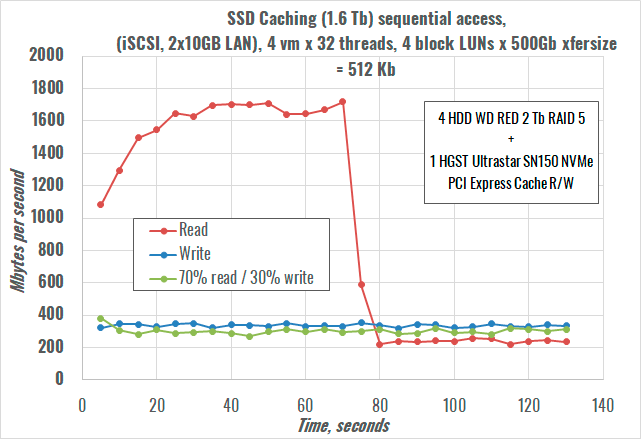

In our testing, we used a 1.6 TB HGST SN150 board, with a declared performance of 740,000 IOPS 4k with random reading. It should be noted right away that this board is not from the list of recommended by QNAP, but we decided to try it anyway, since there was no other alternative at hand. Unfortunately, speeding up random access did not work with it , although it was determined. We tried LUNs of different sizes, switching the caching mode to random and sequential access, but there was no difference with the disk array in our tests. Therefore, in practice, we recommend choosing components from the list of compatible models on the manufacturer's website.

But, regardless of the operating system settings, accelerating sequential access worked fine. On the example of 4 LUNs with a capacity of 900 GB, it can be seen that at the beginning of the test, the storage system saturates the cache by reading data from hard drives, and then the stream goes completely from the cache until it is empty. By the way, the graph is obtained for the mode "acceleration of random access read/write" with 4 MB cutoff.

Total: speeding up the disk array QNAP TDS-16489U , better use Tiering technology, and refuse from SSD cache altogether.

Virtualization with video card and USB forwarding

The QTS operating system from QNAP (version 4.3 at the time of this writing) supports two virtualization environments: traditional and containerized. The first uses its own hypervisor Virtualization Station 3, which is based on KVM. For containers, the Container Station plugin is used, which supports both formats at once - Docker and LXC; the QNAP repository also has its own object storage server for accessing third-party servers to containers using the OpenStack and S3 protocols.

The built-in hypervisor allows you to allocate hardware resources with redundancy, that is, you can present all physical CPU cores and all memory to each guest system, but you may be denied when starting the guest system if more virtual resources are used than In stock. But the function of forwarding a video card to a virtual machine looks especially interesting. Why is this required in the NAS?

Looking at the new SOHO / SMB / Enterprise QNAP servers, many of them support GPU installation, and have additional power for powerful video cards that are planned to be used for applications related to artificial intelligence and machine learning ... On the entry-level models, you can completely engage in experimentation and development, and the top-end models can be used in combat conditions. It is already quite obvious that GPU in NAS is a new trend, which can only be welcomed, because in addition to AI and ML applications, you can now create a full-fledged virtual workplace with 3D graphics and sound on the file server, which we will do now.

Our test QNAP TDS-16489U was released 2 years ago , and does not have power cables for the video card, so in terms of GPU gluttony we are limited to 75 watts that the PCI-E 16x slot can give out. has a P2000 model in the Quadro professional series, which does not require additional power, has 4 monitor ports and drivers optimized for professional applications, including work in virtual environments. The technical ability to install a video card in a NAS and display an image on a video wall of 4 monitors looks very tempting, especially if you understand that you have no limitations either in performance or in the speed of downloading files: on the left - 32 Xeon cores (16 physical and 16 logical), on the right - 180 TB 2-tier storage with SSD cache, on the top - 256 GB of RAM & hellip; all that remains is to configure everything.

Experts working with 3D graphics and VDI have repeatedly discouraged me from the idea of setting up a workplace for CAD using the RDP protocol. All as one agreed that it would be buggy and slow, regardless of the network, and if you use a video card for such purposes, then immediately display the picture on the screen via Displayport locally or an IP extension cable. We didn't have a quality Video-over-IP extender on hand, but we did have the flagship KVM over IP switch, Aten KN2124VA , designed to monitor and maintain a large server fleet in a data center. This IP-KVM gives direct access to the console from a browser window or through its own software, and can connect to video cards with VGA, DVI, HDMI and DisplayPort outputs, and the device itself can work in both gigabit and 100-megabit networks. for which special algorithms of media stream compression are used Console modules are connected to IP KVM directly with CAT5 cable, do not require software installation on the server side and are powered by USB. Assuming that Video-over-IP compression algorithms would kill all picture quality in 3D, I thought that we would not need this device, but I was wrong ...

Everything went well - in one click we create a virtual machine, select the pool where its files are stored, connect the Windows 10 x64 ISO from the image and install the operating system in 5 minutes, allocating 8 CPU cores and 16 GB to it memory. We start Windows 10 using the built-in VNC console, install the virtual instruments package, the demo version of 3D Studio Max 19, the latest version of SketchUp, check the availability of the machine via RDP: everything works except SketchUp, which swears at the lack of a 3D accelerator, and now we will give it a whole Quadro P2000.

In QNAP QTS, the GPU board assignment is configured in the HD Station application. You can give the video card for the built-in programs of the operating system, for example, for transcoding video streams, or you can select the Passthrough mode for the virtualization station. We select the latter and in the guest OS settings add our Quadro P2000 in the GPU tab on the principle of one video card per virtual machine. We launch it - it does not load. The built-in VNC-console of the hypervisor does not work with the hardware GPU, and remote access via RDP does not connect at all, so it's time to connect via IP KVM Aten, and lo and behold - it worked! Windows 10 booted, the drivers were installed and the NVIDIA logo swirled in the remote desktop window, which means 3D support. Perhaps the graphics card needed to see the monitor connection in order to activate at boot.

The SketchUp editor started almost instantly, feeling the presence of a hardware 3D video core , but 3D Studio Max, which worked perfectly with the virtual VGA driver, refused to start. After downloading a large SketchUp project and playing with objects, I came to the following conclusions: a virtual workstation for working with graphics on a NAS is not fiction, the QNAP QTS 4.3 operating system supports the full range of professional and gaming video cards from NVIDIA, and 3D acceleration works like a direct connection and through remote access protocols. But the example with 3D Studio Max still says that not everything is as smooth as marketers describe to us, and even QNAP itself draws attention to the fact that video cards in their NAS are installed primarily for GPU computing.

And here, by the way, there is complete freedom. First, templates for artificial intelligence frameworks are built into the container hypervisor. Secondly, there is a separate QuAI application for managing various machine learning models on the GPU (Caffe, CNTK, MXNet and Tensorflow are supported), which runs on top of the container hypervisor and on which you can easily monitor the load on the video card. However, this application was not available on our TDS-16489U.

Interestingly, when creating a virtual machine, the snapshot function is automatically enabled for it, and with some configuration changes the hypervisor creates a snapshot itself. Snapshots can be configured on a schedule and viewed in a convenient directory.

Virtual switches allow you not only to configure the network topology by rigidly binding the network port to the guest machine, but also to combine ports before forwarding, as well as enable the loop protection protocol, STP. Note that Qnap Virtual Switch does not have a dedicated network port for management , and if you get carried away with distributing IP addresses of the internal subnet to virtual machines, you may well cut off access to the web interface of the entire NAS. The only thing missing from such an abundance of virtualization is the ability to fully migrate virtual machines from one storage to another. This function is not available at all, and you can transfer the guest OS only by cloning a turned off virtual machine and then importing a copy.

By the way, in terms of importing foreign VMs, everything is more than pleasant: VMX, OVA, OVF and QNAP's QVM formats are supported. We tested porting Ubuntu 18.04, Debian 9 LTS and Windows Server 2016 from VSphere 6.0 to QNAP Virtual Station - everything went smoothly: the copy is deployed to a new directory so you can import the same VM multiple times.

In general, you won't cover all the software functions of QNAP servers in one article, so let's continue our acquaintance with the NAS itself.

Construction QNAP TDS-16489U

TDS-16489U series consists of 4 models with indexes SA1, SA2, SB1 and SB2, differing in processor models and RAM sizes. Our top-end TDS-16489U-SB3 has two Xeon E5-2630s (2.4GHz, 8 cores, 16 threads and 256MB DDR4 ECC memory). Structurally, the NAS is made according to a 1-controller scheme, typical for servers on Intel Xeon and is assembled in a 3U case, which made it possible to place 16 bays for 3.5-inch hard drives with SATA-600/SAS-12G/s interfaces on the front panel.

As many as 3 LSI SAS 12 Gb/s controllers are installed on a separate board in the storage system, so no expanders are used here - all storage devices are directly connected to the controllers and give maximum speed.

At the very beginning of the article, I pointed out that the TDS-16489U has special 2.5-inch bays for SSD-caching, which are accessed from the back of the server. You can install drives with a height of up to 15 mm here, but when installing such hot SSDs as Hitachi Ultrastar SS200, it will not be superfluous to turn on the ventilation of the NAS to the maximum, because at room temperature in idle mode these SSDs easily heat up above 55 degrees, and already at Overheating warnings begin to fire at 66 degrees.

The QNAP TDS-16489U motherboard can be completely removed from the server without removing the storage from the server rack, but servicing the same fans requires removing the upper case cover. The motherboard has two M2 Gen2x4 card slots, which together with the battery module can be used to store the write cache in the event of a power failure. The TDS-16489U itself has such a large amount of RAM that in case of a sudden power outage, the entire cache may not have time to write to one M2 module, therefore, for such cases, they are installed in pairs and never changed. There is no battery pack available commercially, so these compartments are empty.

The storage has a pair of RJ45 Gigabit Ethernet ports and 4 SFP + 10Gbps Ethernet ports. The latter are implemented on the Intel XL710 controller, which does not support iWARP/RDMA, so if you want to install a 40-Gigabit interface, you will have one more reason to do it, especially since the system natively supports the 3rd generation Mellanox ConnectX3 network cards with speeds up to 56 Gbps.

But keep in mind that the machine has only one PCI Express 16x slot, and the other three are PCI Express 8x. 10/40G Ethernet interface cards usually use PCI-E 8x, NVMe cards - 4x or 8x, and only rare cards with 4 NVMe Ultra M2 slots have a PCI-e 16x interface. So in simple terms, the expansion possibilities are above the roof. NAS has a built-in IPMI manager with a separate network port. With a gorgeous Web interface and open SSH access, it is difficult to simulate a situation in which you have to launch the IPMI console. But if you have such situations, you may also need the QRM + package, QNAP's own application for monitoring Supermicro servers on your network.

The motherboard has 16 DDR4 ECC DIMM slots, 8 of which are already filled with 32GB modules, and the maximum amount of RAM can be 1TB. Do not think that this is required only for Big Data, because the server constantly caches information from disk pools in RAM for faster access.

Ecology and environment

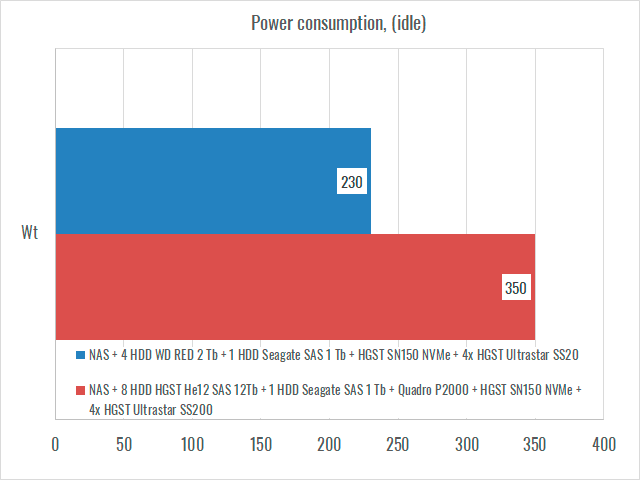

A 650 W fail-safe power supply with two hot-swap modules is responsible for the storage system power supply. Typical power consumption of a device with hard drives should not exceed 360W, but when using expansion cards, the power can reach 400W.

The NAS itself has a very deep case - as much as 530 mm, it is deeper than many industrial servers. The noise level is up to 68 dB, and during operation it is better to install the server in a full-fledged data center with a cold aisle and powerful ventilation. This machine is not intended to be installed in the same space as operating personnel.

Replication and Failover

For fault tolerance, the Doulbe-Take Availability algorithm is used, which real-time incremental data copying from the primary server to the backup. Not only files on shared folders are replicated, but also virtual machines with all settings, as well as snapshots of volumes and LUNs. One of the interesting features of this technology is the backup of a physical server into its own virtual environment, so that in the event of a breakdown of an old Dell or HP machine, while you are looking for spare parts, your server continues to work in the QNAP hypervisor.

There are a lot of backup tools here: various tools for working with backups (Acronis True Image, Archiware P5), clients for cloud storage systems (Azure Storage, Google Cloud Storage, Hicloud S3), synchronization between several NAS and other things like backup to an external JBOD, which can then be put in the trunk and taken to another city if you need to quickly transport tens of terabytes of information. It does not make sense to list all the backup options - there are more than 30 plugins in the "backup" section in QNAP's "marketplace" - reserve for yourself.

Horizontal and vertical scaling

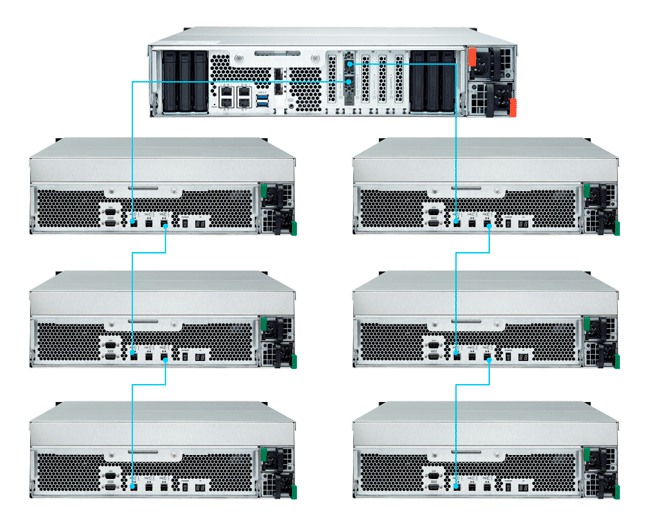

QNAP TDS-16489U allows you to connect up to 8 disk shelves with 12 or 16 bays for 3.5-inch drives (in total - 144 HDD with a total capacity of 1152 TB). The connection is made via SAS-6Gbps or SAS-12Gbps interfaces, for which you will have to install a QNAP SAS-12G2E dual-port interface card in the head unit.

Also among the accessories are 10 Gigabit 2-port NICs with SFP + slots and RJ45 ports, 2-port 40 Gigabit NIC with QSFP + slots and memory modules up to 64 GB. Replacement of processors is not announced by the manufacturer.

Load balancing between head units and horizontal scaling are not declared by the manufacturer .

Warranty & Support

Standard warranty for QNAP TDS-16489U is 3 years and is carried out by authorized service centers throughout Russia. The manufacturer does not currently offer extended warranty packages with fixed response times.

Licenses and add-on packages

All the discussed storage functionality was provided by the built-in functions of the QTS 4.3 operating system and plugins from the official QNAP repository and does not require the purchase and activation of any licenses. Tiering and SSD Cache features, for which some manufacturers ask for money, are available here completely free of charge.

Third party hardware compatibility

QNAP has two lists - white and black for hard drives and SSDs. We recommend that you carefully study these lists before purchasing your device and drives. In our testing, 12-terabyte HGST HE12 hard drives with SAS interface were supposed to participate. In the QNAP compatibility list, such SATA hard drives are recognized as compatible, and 6 and 10 terabyte SAS from the same series are blacklisted. Ours were neither there nor there, and they did not work normally, causing delays in RAID 5 up to 20 seconds. I had to use 2TB WD Red, which is of course a shame.

Also keep in mind that since QTS 4.3, QNAP storage has refused to support third-party PCI Experss NVMe cards - they can be used for SSD cache, but new volumes cannot be created on them. This is some weird policy: what was previously compatible suddenly stops working.

Testing

For testing, we used a test bench with the following configuration:

- Server 1

- IBM System x3550

- 2 x Xeon X5355

- 20 GB RAM

- VMWare ESXi 6.0

- RAID 1 2x SAS 146 Gb 15K RPM HDD

- Intel X520

- Server 2

- IBM System x3550

- 2 x Xeon X5450

- 20 GB RAM

- VMWare ESXi 6.0

- RAID 1 2x SAS 146 Gb 15K RPM HDD

- Mellanox ConnectX-2

- Storage System

- QNAP TDS-16489U-SB3:

- 256 GB RAM

- PNY Quadro 2000

- Aten KN2124VA + KA7169

- HGST SN150 NVMe

- 4x SSD HGST SS200 SAS 400Gb

- 4x HDD SATA WD RE4 7200 RPM 2 Tb

- Soft

- VMWare ESXi 6.0 U1

- Debian 9 Stretch without Intel Meltdown/Specter patches

- VDBench 5.04.6

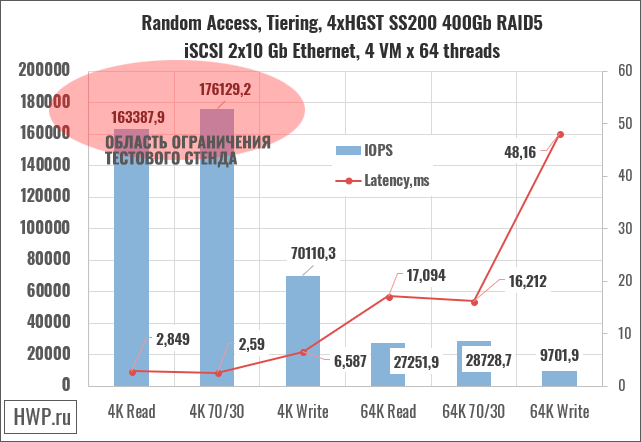

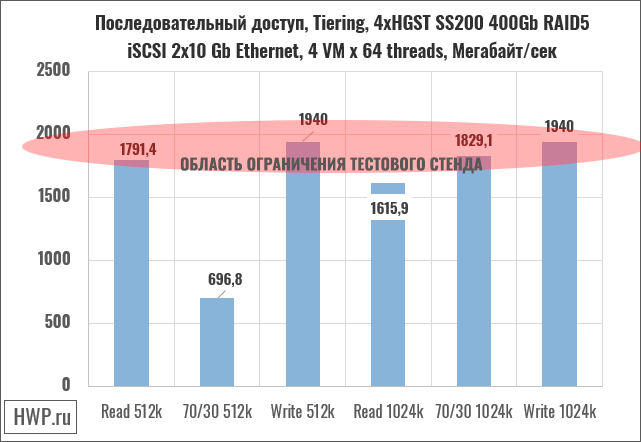

Servers were connected directly to two storage host adapters using DirectAttach cables, Intel XDACBL3M. On test servers running VMWare ESXi, 4 virtual machines with Debian 9 x64 guests were deployed, for which 4 identical LUNs were allocated on the storage system, which were transferred to the QTier performance layer, represented by 4 HGST SS200 SSDs during preparation combined in RAID 5 . Each virtual machine was connected via iSCSI to its own LUN, using a 10-gigabit network port as an uplink of the VMKernel Switch virtual switch. Each virtual machine was running the VDBench benchmark, which was controlled from a dedicated device.

Before starting the main test, the LUN space is pre-filled for 120 minutes to exclude the impact of new empty HDDs and data fragmentation on speed. The following diagrams show the latency for maximum test bench load .

From a machine that declares a performance of 2.6 million IOPS Rnd 4K read, in general, you don't expect anything except that it will rest against the capabilities of the test bench, but this does not happen on some operations records. There are suggestions that this is due to the relatively old by today's standards SAS controllers used in the TDS-16489U. Let's see how this affects real-world problem patterns.

Real World Problem Patterns

From synthetic tests, let's move on to emulating real problems. The VDbench test package allows you to run patterns captured by I/O tracing programs from real tasks. Roughly speaking, special software records how an application, whether it is a database or something else, works with the file system: the percentage of writing and reading with a different combination of random and sequential operations and different sizes of a write and read block.

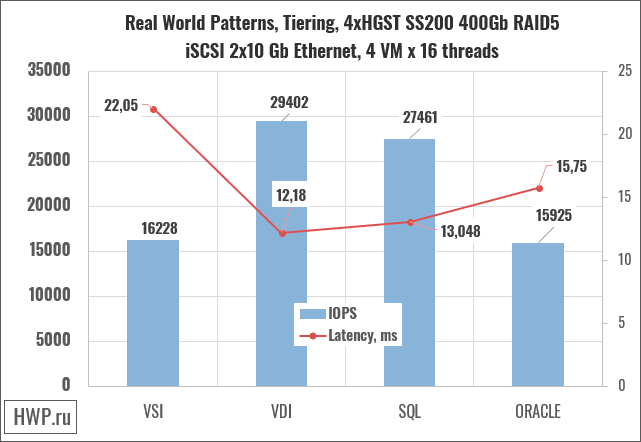

We used patterns captured by Pure Storage for four cases: VSI (Virtual Storage Infrastructure), VDI (Virtual Desktop Infrastructure), SQL, and Oracle Database. The test was run with 16 threads for each virtual machine, which created a request depth of approximately 64.

Surprisingly, the storage system gives out a lot of latency in patterns, but the performance values are still very high.

Purchase price and class

QNAP TDS-16489U is delivered under the order with the calculation of the price for the end customer. According to our information, the price for a device without disks is in the range of 20-25 thousand dollars. Since we do not have the exact value of the cost, it is not possible to calculate economic indicators.

Conclusions

QNAP TDS-16489U no longer belongs to the class of data storage systems, but to a ready-made device of the "server + storage" type in one device, which you can put into the Big Data processing environment, and configure the main applications to work locally. This makes it possible not only to significantly save on the data center network infrastructure, but also to facilitate the management and updating of software.

QTier technology, which is available for the customer free of charge, is really the moment to be guided by when buying, and, if possible, choose an SSD with such a volume that the most demanded LUNs can be completely contained on them, therefore that this algorithm works best when you create new logical slices on a QTier array and they are immediately placed on the Performance layer. Testing with modern SSDs from Hitachi showed that TDS-16489U disk controllers do not reveal the full potential of modern solid-state drives, therefore, when configuring to order, you should give preference to the size of SSDs over their performance.

In the field of virtualization, the focus on machine learning applications and GPU support is worthy of all praise, as well as work on our own object storage server, which, in principle, already today allows you to completely abandon the third-party cloud, access to which , as practice shows, can be closed at any time.

Michael Degtjarev (aka LIKE OFF)