Threadripper vs. EPYC: testing three AMD 32-core processors in server applications

After the recent decision by VMware to change the licensing policy of Its ESXi hypervisor by accepting a limit of 32 physical cores per 1 processor socket, the 32-core milestone has become the front line where AMD reigns Supreme, and if today you choose a 32-core platform for virtualization, containers, or applications, then you can simultaneously access 3 server architectures from AMD.

- First of all, this is a good old-fashioned first-generation EPYC with the code name “Naples”, which today you can buy at a big discount.

- The second generation of EPYC with the code name “Rome”, recently updated with models with a third-level cache increased to 256 MB.

- The game “monster”, Ryzen Threadripper, the second generation, which in this article is given a special place.

As is often the case, AMD has a large selection of 32-core cores for different tasks. We took EPYC 7551p, EPYC 7532 and Threadripper 2990WX to test and compare them in popular server applications:

- MySQL

- REDIS

- NGINX

- TensorFlow

- ElasticSearch 7.6.0

We will find out in which cases it makes sense to choose the good old first EPYC, in which-the newest EPYC Rome with an increased cache, and in which- to throw everything and put any gamer’s dream, Threadripper 2990WX, on the server.

Why do we give a special place to Threadripper?

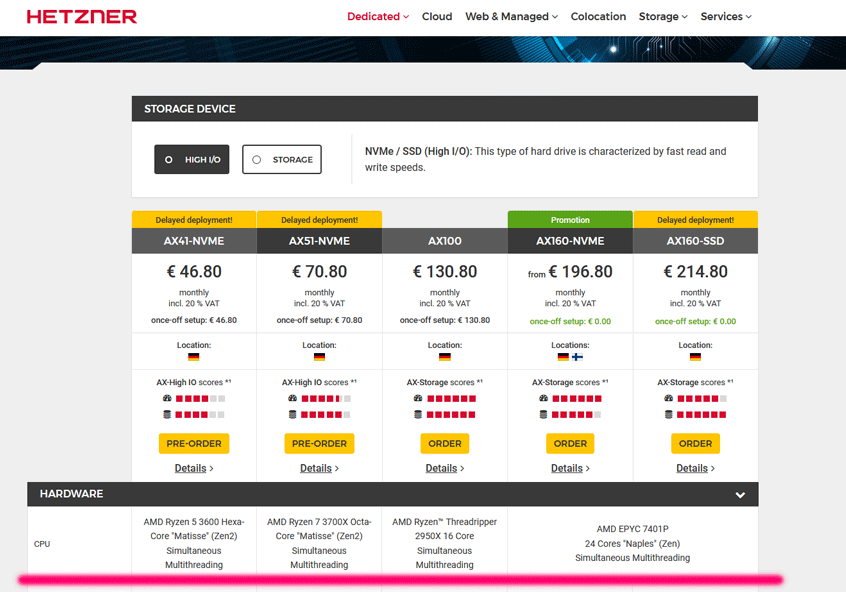

Because recently Hetzner introduced its dedicated servers based on the Ryzen Threadripper processors. I don’t think this is the first use of gaming CPUs in Cloud hosting, but in my memory this is the first time that a world-famous company is proud to offer you a piece of a HEDT server for rent. And I would pass it by if we were talking about some local small hoster, but excuse me Hetzner is one of the ten largest cloud providers in the world, it has an excellent reputation.

And although this company has been caught many times using desktop components, Hetzner is a large thriving business, and if they make such a step, then we need to take an example from them and figure out whether there is an opportunity to save money here, and why buy Threadripper instead of EPYC, because these processors, although they have almost the same socket, but require different motherboards.

Server platform for Threadripper?

In the world there is only one exclusively server motherboard for ThreadRipper of the first two generations, produced by ASRock Rack. This manufacturer likes experiments, and makes for example, a Mini ITX Board for LGA3647, or server boards on Socket AM4. It is on ASRock Rack boards that Hetzner servers with Ryzen processors work. Unfortunately, the deal between ASRock Rack and Hetzner is under NDA, but adding up the bits of information that were collected, I assume that this particular motherboard was used with minimal changes typical for a large customer.

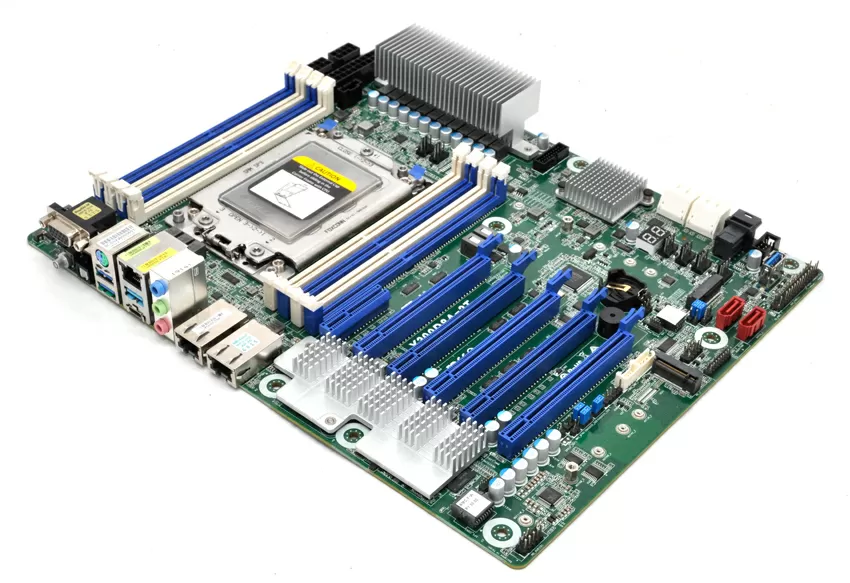

ASRock Rack X399D8A-2T is an ATX platform with two 10-gigabit ports on the most modern controller for 10GBase-T, Intel X550-T2. This motherboard has IPMI monitoring based on the ASpeed AST2500 chip with a dedicated 1 Gigabit LAN port, 8 DIMM slots with ECC support, two M.2 slots for NVME/SATA, 8x SATA and 5 PCI-E 3.0 x16 slots at ( 3 x16 + 2 x8). In many ways the motherboard repeats the ASRockRack EPYCD8-2T for EPYC 7000, but this is understandable: here the same arrangement of memory slots, PCI and rear panel pins.

In terms of cooling, it is also somehow not a lot: the lowest cooler for Threadripper that can be found on the free sale is Supermicro SNK-P0063AP4 or Dynatron A26 2U high, slightly better quality and higher - Noctua NH-U9 TR4-SP3 under 4U. Considering that the Threadripper 2990WX has a TDP of 250 W , it makes sense to look for a liquid-cooled platform, and these are not empty words: so that the Threadripper 2990WX in our test bench does not overheat and reaches its maximum frequencies , I had not only to use a liquid cooling system with a 360-mm radiator, but also to place the test bench in a room with an air temperature of +10 degrees Celsius.

With RAM, the situation is as follows: Ryzen Threadripper processors only support unbuffered memory. They have a 4-channel DDR4 controller, and the ASRock Rack X399D8A-2T server motherboard is limited to 2666 MHz. Buffered RAM modules are not supported by these processors, and therefore large amounts of RAM in servers with Threadripper are not available to you. The list of compatible DIMMs contains models with a volume of 8 and 16 GB, and a total of up to 256 GB of RAM can be installed on the motherboard. This is very low for virtualization, but sufficient for containers or standalone applications that require high processing power. As a confirmation of this, we can look at the servers that uses CloudFlare with 256 GB of RAM.

Otherwise, take the Threadripper, write on its lid with a felt-tip pen "YA EPYC!" and work with it as an "EPYC", and we'll figure out the differences now.

Difference between SoC and traditional CPU

Closing your eyes and holding Threadripper X2990 in your left hand, and EPYC in your right hand, and you will not feel the difference: these processors have almost the same socket, size and weight, with the only difference that EPYC is a full-fledged SoC that does not require installing the south bridge on the motherboard, and Threadripper is a CPU that still needs a chipset. The south bridge is responsible for the PCI Express bus wired to some slots, for SATA ports and strapping. Let's compare the topology of a typical EPYC motherboard with a Threadripper motherboard:

On the ASRock Rack X399D8A-2T board, the chipset is the same as on gaming motherboards: AMD X399, which advanced gamers have already scrapped, and, as they say, it will still serve in servers. From the point of view of the binding functionality, comparing with the same EPYC8D-2T board, there are no drawbacks: 2 SATA ports with DOM support for loading the hypervisor, plus 8 more SATA ports, of which 4 are brought out by the Mini-SAS connector for connecting to the basket or backplane , 2 M-Key ports, one of which can work as OCULink and even a USB port for a USB flash drive with VMware ESXi is soldered on the motherboard so as not to interfere with video cards. Yes, here one of the M.2 slots in SATA mode will use the line from the south bridge, but let's be objective: it makes no sense to occupy this slot with a SATA drive - it asks for NVME of 2280 format, and in this case the drive exchanges data with the processor. By the way, you can combine two M.2 drives in RAID 0/1 from the motherboard BIOS.

Naturally, ASRockRack X399D8A-2T has fewer PCI Express 16x slots than EPYC motherboards, where all slots have 16x bus width (PCI Express 3.0 for the first generation EPYC and PCI Express 4.0 for the second). In practical terms, this means that a Threadripper platform is clearly not designed for 3 or more GPUs like Nvidia Tesla V100 using PCI-E 3.0 16x, but at the same time you can use network cards and HBAs that require bus width PCI Express 8x.

Memory slots are installed along the airflow for optimal airflow in the rack cabinet. If there is a shortage of network switch ports in the rack, you can connect to the IPMI interface of the ASpeed AST2500 chip through any of the 10 Gigabit ports in Out-of-band mode. In general, this motherboard has everything that you are used to in a traditional server, plus 1 more USB Type-C port, which is not found on motherboards on boards under EPYC, and to which you can connect a whole disk shelf like < a href = "https://www.qnap.com/en/product/tr-004u" target = "_blank"> QNAP TR-004U . The board also has a Realtek ALC892 audio codec, which is suitable for audio transmission on an RDP server, but nothing more.

ThreadRipper vs. EPYC: Differences in Memory Controllers

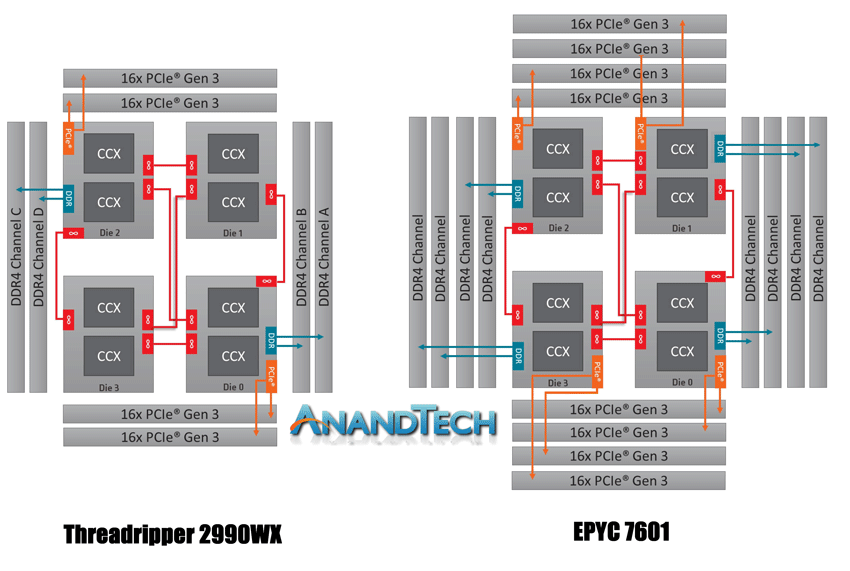

All three processors under consideration have big differences in working with RAM. The second generation ThreadRipper processors use a 4-channel controller, while the first generation EPYC has an 8-channel controller. And here and there, the memory controller is physically located on each of the 4 CCX (Core Complex) crystals with cores and cache memory. It turns out that every 8 cores in EPYC of the first generation use direct access to no more than two memory channels, and the task of the hypervisor is to correctly address the amount of memory allocated for the virtual machine so that virtual processors and virtual memory are served by one processor die.

For Threadripper 2990WX processors, things are much worse: two crystals do not have their own memory controllers at all and access it through neighboring ones, which leads to latency. Moreover, the diagram shows that if you plan to use all 32 processor cores, then in the first generation EPYC you should install all 8 memory modules, and in the Threadripper 2990WX four will be enough. Naturally, the larger your application communicates with RAM, the more noticeable the difference between AMD desktop and server processors will be, but in practice, not everyone needs it.

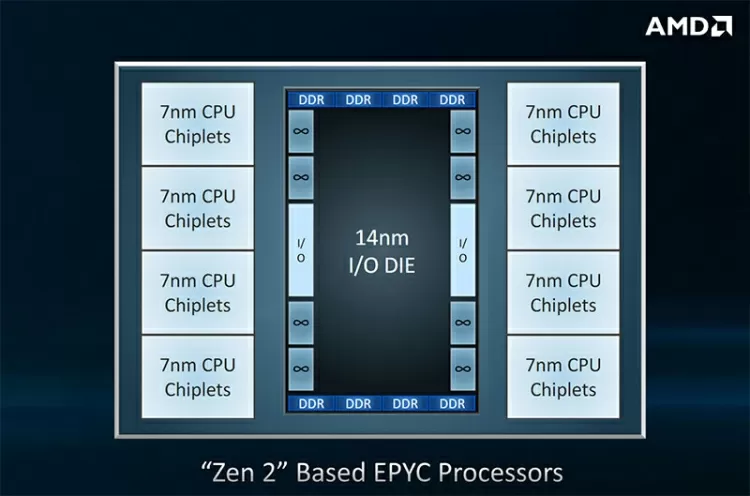

The cardinal change in the new EPYC Rome processors is that the interprocessor switch, PCI Express bus, memory controllers and all the rest of the bundling are removed from the crystals with cores to a separate central chip, the so-called I/O unit. Thus, each processor core got access to all 8 memory channels, which not only simplified the work of the hypervisor, but also increased performance. In theory, this step alone should be enough for EPYC Rome to outperform CPUs with Zen 1 architecture and the same number of cores in all tests, but let's not rush (spoiler alert - a lot depends on the architecture of the application under test and the amount of data).

What EPYC does not have!

Interestingly enough, ASRockRack X399D8A-2T uses a traditional blue-gray text BIOS that (oh, I can't hold back), supports CPU and memory overclocking, and boasts profiles to save overclocking settings. The existing Watchdog timer will reboot your server if it hangs during operation from overclocking. Of course, someone will say that overclocking in a server is not serious, but do not rush to extinguish the candles: there is a whole layer of servers in the world where the CPU frequency is of decisive importance when choosing. Their job is to work 8 hours a day, and then they tend to be overworked or rest. These are servers for HFT (High-Frequency Trading), the task of which is to make money on the exchange by the method of high-frequency trading, that is, placing orders during the period of time while the exchange server requests a repeated network packet from the client who submitted the buy/sell order. Such machines, as a rule, are installed in the same data centers as the servers of the exchange, and their task from the point of view of equipment is to achieve a minimum delay in placing buy/sell orders. In such servers, overclocking, liquid cooling, processors with frequencies of 5 GHz and higher, and even FPGA boards are actively used.

In addition, we have a number of non-critical tasks, such as rendering or calculating models for neural networks, where in case of failure, you can continue the task from the same step. And in general, let's not forget that gamers have processors for years in overclocked mode, without freezes, and some cannot imagine a computer without overclocking at all. For all these cases, ASRockRack X399D8A-2T is ready, but if you don't want to, don't overclock!

About EPYC, it should be said that if you have a processor of a new, second generation, then it differs from the first in the ability to configure NUMA memory configurations with reference to individual groups of cores or the entire socket at once. You may also have access to memory latency settings, but this depends on the will of the motherboard manufacturer, and is extremely rare.

What Threadripper doesn't have

We remember that EPYC is the safest processor, this has been the custom since the first generation on the Naples core, which has memory encryption options , and EPYC Rome added register encryption (!), significantly increasing the isolation of virtual machines. In general, the indicated `` chips '' are practically a mini-revolution with one single, but significant drawback: the most "tasty" functions like AMD SEV work only under the Linux KVM hypervisor. VMWare is expected to support them starting from version 7, and in Windows Server with its Hyper-V it is generally unknown when to wait.

For mundane things like SSE/AVX support, all three processor generations are exactly the same.

|

|

EPYC 7551 |

TR 2990WX |

EPYC 7532 |

| Year of issue | 2017 | 2018 | 2020 |

|

Cores |

32 | ||

|

Streams |

64 | ||

|

L3 cache, MB |

64 |

64 |

256 |

|

AMD SEV |

Yes |

No |

Yes |

|

Memory Channels |

8 |

4 |

8 |

|

Memory type |

ECC RDIMM |

UDIMM, ECC UDIMM |

ECC RDIMM |

| Maximum memory frequency, MHz | 2666 | 2666 | 3200 |

|

Frequency, GHz |

2.0-3.0 |

3.0 - 4.2 |

2.4 - 3.3 |

|

TDP, W |

180 |

250 |

200 |

However, if you are a reputable company with a serious approach to security, then it is better to choose the new EPYC, at least due to the presence of these additional functions for encrypting memory and registers.

VMware support

ThreadRipper processors are not officially supported at all by the ESXi hypervisor, and hardly ever will be, because vMware has not even certified server EPYC 3000 . This does not mean that vSphere will not run on ThreadRipper 2990x - as long as it goes. I tested it, and even migrating virtual machines without stopping between ESXi hosts to EPYC and Threadripper worked without problems. The ASRock X399D8A-2T platform supports SR-IOV and PCI-E Passthrough functions, and you can for example give a SATA controller to a guest operating system for virtual storage.

Everything is fine, there is nothing to complain about, TurboBoost works inside the guest systems with the default settings, but the very realization that no one guarantees you confidence with an uncertified configuration is deeply embedded in the subconscious.

Frequencies

The first EPYC has a sad situation with frequencies: during its development, 180 W was considered something too large for the server market, and due to engineering features, all the cores of the first EPYC could not be overclocked simultaneously in principle. The maximum frequency of 2998 MHz appears here only when 4 cores are loaded, and already when 8 cores are loaded, it will remain achievable only for 2 of them, and the rest will work at 2543 MHz or lower if the active power saving mode is selected. With 16 active threads, the maximum turbo boost frequency will be available only on 1 core, and with 32 loaded cores all of them will line up at 2543 MHz and there will be no acceleration. There is no increase in the thermal package for these processors in the BIOS of our motherboard, and installing LSS will not help: it's not about the temperature, but about the processor itself. It is now possible to put a 250-watt processor in a 1U case, giving a third of the useful volume for the cooling system, and three years ago it was extremely unfashionable and even vulgar.

The beauty of the Threadripper 2990x in its frequencies and absolute limitless power consumption from the outlet: the base frequency is 3000 MHz with an increase to 4200 MHz in turbo mode. It was made for computers with the cost of a 3-year-old foreign car, and there the HEDT buyer will choose either the top one 360-mm an RGB liquid cooling system , or a massive Noctua air cooler, or build a custom dropsy for $ 1,000 more. How you dissipate the heat is not just your concern, it is part of your hobby, your lifestyle and your interests that you exchange on the forums, and AMD only gives you a devourer of any program code so that you can use it correctly, even if in BIOS, you will have to disable half of the cores to reach the maximum frequency. Why do you ask why? The fact is that the top-end frequency of 4174 MHz can be reached when only 2 cores are loaded, already with 8 their frequency will be 3800 MHz. Loaded to the maximum, 16 cores operate at a frequency of 3600-3700 MHz each, and 32 cores together withstand a frequency of 3394 MHz. Some enthusiasts have found that disabling half of the cores in the Threadripper 2990x helps it maintain its maximum clocks consistently and perform better in games.

|

Maximum number of cores working simultaneously at maximum frequency | ||

|

EPYC 7551p |

Threadripper 2990WX |

EPYC 7532 |

|

4 |

2 |

32 |

|

Frequency of each core when loaded into 32 threads, MHz | ||

|

2543 |

3394 |

3296 |

The recently announced EPYC 7532 is an attempt to get the maximum performance out of 32 cores. In fact, this is a variant of the 64-core EPYC 76x2 with 32 locked cores, whose L3 cache was distributed among the remaining cores and amounted to 256 MB per processor (8 MB per core). The price to pay for the increase is the bloated energy consumption: up to 200 watts. I want to immediately draw your attention to the fact that AMD has an EPYC 7542 processor, which also has 32 cores and similar frequencies: 2.35-3.35 GHz, but a strikingly lower TDP - only 155 W. Such a spread in power consumption is explained by the hardware configuration: 4 chiplets with 8 cores in EPYC 7542 and 8 chiplets with 4 cores in EPYC 7532. The price for the increase is the bloated power consumption: up to 200 watts. I would like to draw your attention to the fact that the higher-frequency 7542 with a base frequency of 2.9 GHz, consuming 225 watts, is a step higher. Below AMD has an EPYC 7542 processor, which also has 32 cores and similar frequencies: 2.35-3.35 GHz, but a strikingly lower TDP - only 155 watts. This spread in power consumption is explained by the hardware configuration: 4 chiplets with 8 cores in EPYC 7542 and 8 chiplets with 4 cores in EPYC 7532. Interestingly, there are no restrictions on the number of threads in EPYC lines, there are no AVX/AVX2 instructions: the frequencies of all 32 cores are independent hopping from each other from 1496 to 3296 MHz is the best Turbo Boost plan one could wish for. In general, this is also true for the entire series of modern EPYC Rome 7xx2 - the entire turbo frequency scheme in these processors has been redesigned, and the entire new line is able to keep frequencies above 3000 MHz for all cores.

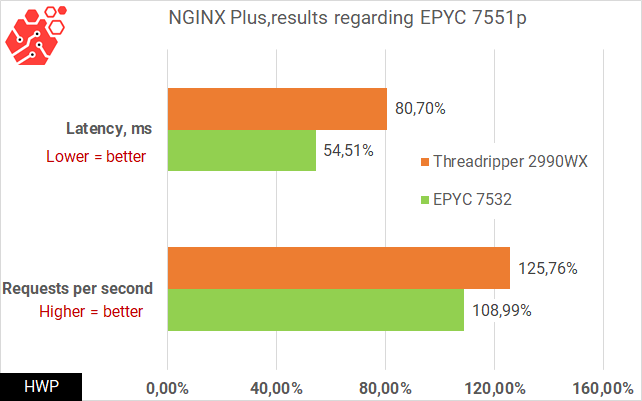

NGINX Plus

NGINX functionality is not limited to Front-End server for web applications. Using it as a reverse proxy for the same databases already creates a significant load on the host and requires high parallelism. It is for this reason (because of the ability to heavily parallelize the load on all cores) that we do not use free NGINX, but commercial NGINX Plus. To load one of the fastest and lightest software products, the test bench must exceed the tested configuration many times in performance, which is impossible in our editorial office. The NGINX team on their blog published the results of my own tests with default settings, and repeating their methodology, I could not even come close to their values. After discussing the situation with colleagues, I decided that our test configuration is sufficient to compare the processors with each other, so we will use relative results, taking the EPYC 7551p readings as 100%.

|

Hypervisor |

VMware ESXi 6.7U3 |

|

Client platform |

VM, 32 vCPU, 12 Gb Ubuntu Linux 18.04 LTS |

|

Server Platform |

VM, 16 vCPU, 16 Gb Ubuntu Linux 18.04 LTS Nginx Plus R20 (1.17.6) |

wrk -t 256 -c 1000 -d 120s http://server-ip/0kb.bin

In terms of minimum latency, the EPYC 7532 offers almost 2x the advantage over the previous generation processors, and this is very important if you need high application response times. And again, returning to AMD's large corporate customers, I want to note that CloudFlare chose the record-fast EPYC Rome for itself, not Threadripper. I do not like to talk about hardware that did not pass our testing, but if the low latency is due to the Zen2 architecture, then the high-frequency 8-core EPYC 7252/7232P/7262 could be the best budget solution for WAF/Proxy/UTM applications from - for this indicator.

Redis

In previous tests, I found that the fastest versions of Redis and MariaDB are shipped in the Oracle Linux repositories, so we will use that OS for databases.

|

Hypervisor |

VMware ESXi 6.7U3 |

|

Test virtual machine |

VM, 32 vCPU, 16 Gb Oracle Linux Server 7.6 Redis server 3.2.12 MariaDB 5.5.64 |

Over the years, non-relational databases have been optimized for the speed of execution of the simplest queries, and it is not surprising that the processor frequency plays a decisive role here. In single-threaded mode, we see the same thing as in the NGINX test: the minimum latency leads the second EPYC to victory.

According to the previous tests, it becomes clear that for simple applications running in 1-2 or several threads, EPYC Rome turns out to be the best solution, and I really want to believe that the situation persists with fewer cores. With increasing load on the same applications, the picture becomes ambiguous. However, let's move on to more complex services.

MariaDB 10.3

Using a MySQL fork, MariaDB 10.3 included with CentOS/Debian distributions, traditionally we create an InnoDB base on 1 million rows, of which we use only 100,000, to load the processor with SELECT queries from a pool stored in RAM. We have a very fast NVME drive that smoothes out latency in logging, so storage performance does not affect performance.

We see a situation typical for previous applications: a huge advantage of EPYC Rome 7532 in latency in 1 thread, which melts as the load increases, yielding to Threadripper 2990WX.

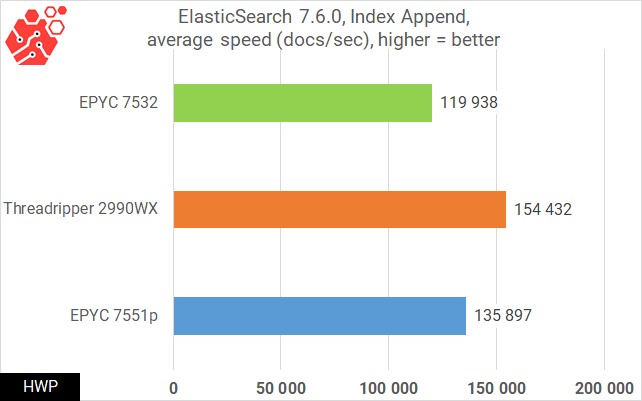

ElasticSearch 7.6.0

If we say that 32 cores are needed to process Big Data, then Elastiс is the best example. Written in Java, this stack for working with statistics and application logs is one of the most requested tools among DevOps and Data Scientists.

|

Hypervisor |

VMware ESXi 6.7U3 |

|

Test virtual machine |

VM, 64 vCPU, 30 Gb Ubuntu Linux 18.04 LTS JAVA Runtime 11 ElasticSearch 7.6.0 |

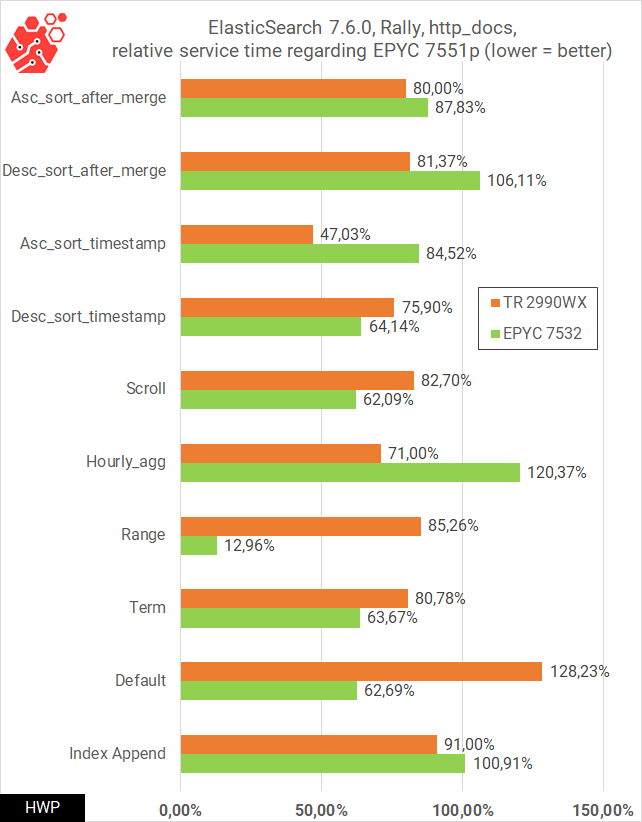

From the built-in tests of the Rally package, I chose http_logs, since this test is quite large: 32 GB of data in expanded form, and the test results take about the same amount. We take two metrics as a basis for measurements, the first of which is adding documents to the index.

When testing in real applications, some results out of the general trend simply defy logical explanation. This is partly the fault of the developers, who make it their goal to write the application, and not the exact benchmark. In part, the error is influenced by the accumulating delays in the software stack, and if you look at the delays, taking the results of the same processor as a unit, then the spread becomes simply colossal.

AMD constantly emphasizes that the new architecture of the epyc rome processors gives up to 40% more speed in Java applications compared to the first generation of epyc, and in the range test I have something to please them: the advantage is almost 10x, but a clear winner in there is no battle between the new server and the old game processor.

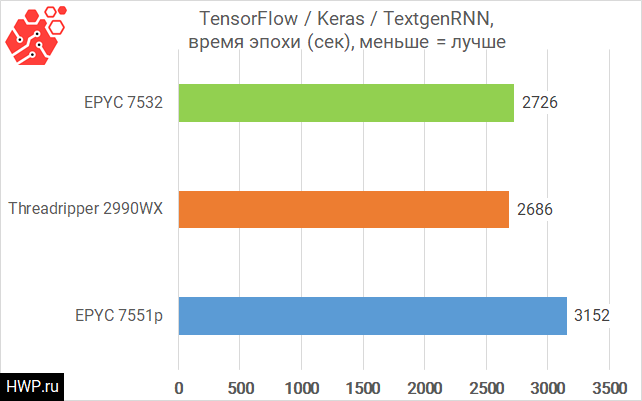

Tensorflow/keras

I am using a problem to build a text generation model based on existing news stories. Using the project textgenrnn , I input a 16MB text file, and start building the model, picking the batch size parameter in such a way that its minimum value will load the cpu as much as possible. Yes, I know that GPU calculations are faster, cheaper and more practical, but building neural networks is not always just a GPU. Where the accuracy of calculations is important or the calculation of several models simultaneously, central processors are still used.

We remember that when loading all 32 cores, Threadripper 2990wx and Epyc 7532 have almost the same frequencies, and all other optimizations of the zen2 architecture are not involved in long mathematical calculations.

Price

At the time of this review, ASRock X399D8A-2T motherboard cost about the same as ASRockRack EPYCD8-2T, around $ 550, which means that you can't save on a platform for AMD Threadripper processors. The situation with the processors themselves is much more interesting:

- EPYC 7551p - 1300 $

- Threadripper 2990wx - $ 1525

- EPYC 7532 - 3250 $

In general, at the time of this review, AMD had 9 (!) options for 32-core processors of the first and second generation on sale: for single-socket servers, for two-socket servers, on the first generation core, on the second generation core with 128 MB of cache L3, on the second generation core with 256 MB of L3 cache, is a real paradise for lovers of choice in the price range from $ 1300 for the EPYC 7551p and up to $ 3400 for the EPYC 7542, but the most affordable epyc rome of the 7452 series will cost only $ 2025.

Remember that epyc supports Registered memory, while Threadripper does not.

- 16 GB ECC Registered DDR4 2666 MHz - 80 $

- 16GB ECC Unbuffered DDR4 2666MHz - $ 110

- 16 GB Non-ECC Unbuffered DDR4 2666 MHz - $ 70

The simplest configuration of a processor, motherboard, and 256 GB of RAM is as follows:

- EPYC 7551p + AsrockRack EPYCD8-2T + 256GB ECC RDIMM DDR4 = $ 2490

- Threadripper 2990wx + ASRockRack X399D8A-2T + 256gb ECC DIMM DDR4 = $ 2955

- Threadripper 2990wx + ASRockRack X399D8A-2T + 256gb Non-ECC DIMM DDR4 = $ 2660

- EPYC 7532 + ASrockRack EPYCD8-2T + 256GB ECC RDIMM DDR4 = $ 4440

Also, do not forget that for epyc 7532 it makes sense to buy the fastest memory DDR4 3200, which will add even more to the cost of the machine.

Conclusions

Applications of different nature show completely different results, and the combination of "faster/more expensive" is not always turns out to be better. We found out that:

- for tested simple low-threaded applications the epyc rome 7532 is the best choice and for vps rental under 1c it is the best solution. Most likely, the trend will continue on other software products with the same load pattern.

- - exactly the same can be said about the work of 1c Enterprise in conjunction with a MySQL server in an average company with a hundred users

- - at the same time, in a multi-threaded 1C-MSSQL bundle, the first Epyc 7531p shows the same speed as the EPYC Rome 7532, so you can save a lot here.

For databases with a large number of connections, the Threadripper 2990wx with its high frequencies is better suited, and in applications related to big data and machine learning, it is faster. New Epycs with increased to 256 MB L3 cache are installed in the same motherboards as the first epyc with the Naples core, and all you need to do is flash the latest bios. If you already have a server running on the first epyc, and you want to change the processor to epyc rome, then first make sure that BIOS with ZEN-2 support can be uploaded to your motherboard. The first motherboards had a 16-megabyte ROM chip, and new processors need a 32-megabyte one. When purchasing a Threadripper server platform, you can take a motherboard that is made on the same production line as the world's most server boards, you will have ecc memory and NVME RAID. For such installations, the Asrock Rack X399D8A-2T is the only one, and therefore the best buy.

Well, as for psychological barriers and prejudices, I would be glad to tell you how much you lose by choosing a gaming cpu instead of a server one, but apart from official support from vmware I can not remember anything. Anticipating questions like "what about the next generation of threadripper". I'll just say there are no server motherboards for them yet. As soon as they appear, we will definitely test it.

Mikhail Degtyarev (aka LIKE OFF)

16/03.2020