What CPU uses Cloudflare: intel not inside!

We designed and built the Cloudflare network in such a way that we could quickly and inexpensively increase bandwidth; so that any of our servers in every city could work with any of our services; and so that we could effectively move customers and traffic through our network, a Cloudflare representative points out in his blog. - We use common, commercially available hardware, and our software automatically manages the deployment and execution of our developers 'code and our customers' code on our network, so that they don't even have to worry about the servers being used. Because we manage the execution and prioritization of code running on our network, we can optimize the performance of our highest-level clients and make efficient use of idle capacity on our network.

An alternative approach would be to run multiple fragmented networks with specialized servers designed to perform certain functions, such as firewall, DDoS protection, or workers. However, we believe that this approach would lead to a loss of free resources and reduce the flexibility to create new software or implement the latest available hardware.

After selecting a data center, we use Unimog technology, Cloudflare's custom load balancing system, to dynamically balance requests between different server generations. We distribute the load at different levels: between cities, between physical clusters located throughout the city, between external Internet ports, between internal interconnects, between servers, and even between logical CPU threads inside the server.

As demand increases, we can scale by simply adding new servers, points of presence, or cities to the global pool of available resources. If a server component fails, it is assigned a low priority or removed from the pool altogether for later repair. This architecture allowed us to not have specialized Cloudflare staff in any of the 200 cities, instead relying on the help of specialists from the data centers where we host our equipment.

Gen X servers

Recently, we started implementing the tenth generation of servers, "Gen X", already deployed in major cities in the United States and in the process of shipping worldwide. Compared to our previous server (Gen 9), it handles 36% more requests, but costs significantly less. In addition, it allows you to reduce the processor's L3 cache misses by about 50% and reduce the 99% latency of the NGINX service by up to 50%. At the same time, the processor used has a 25% lower heat dissipation per physical core.

We were particularly impressed with the 2nd generation of AMD APU processors because they proved to be much more efficient for our customers workloads. As the pendulum of technology leadership swings back and forth between suppliers, we wouldn't be surprised if our choices change again over time.

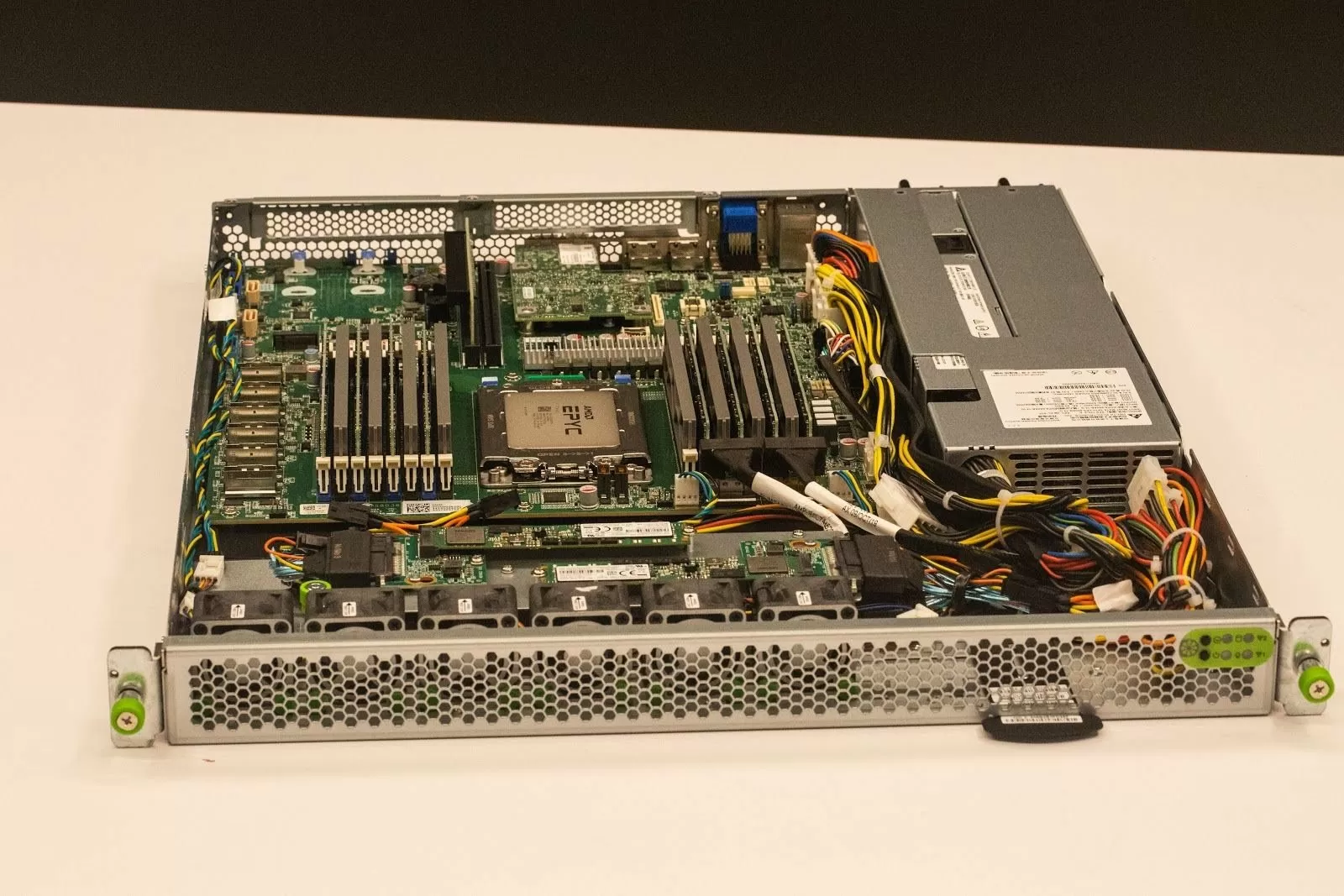

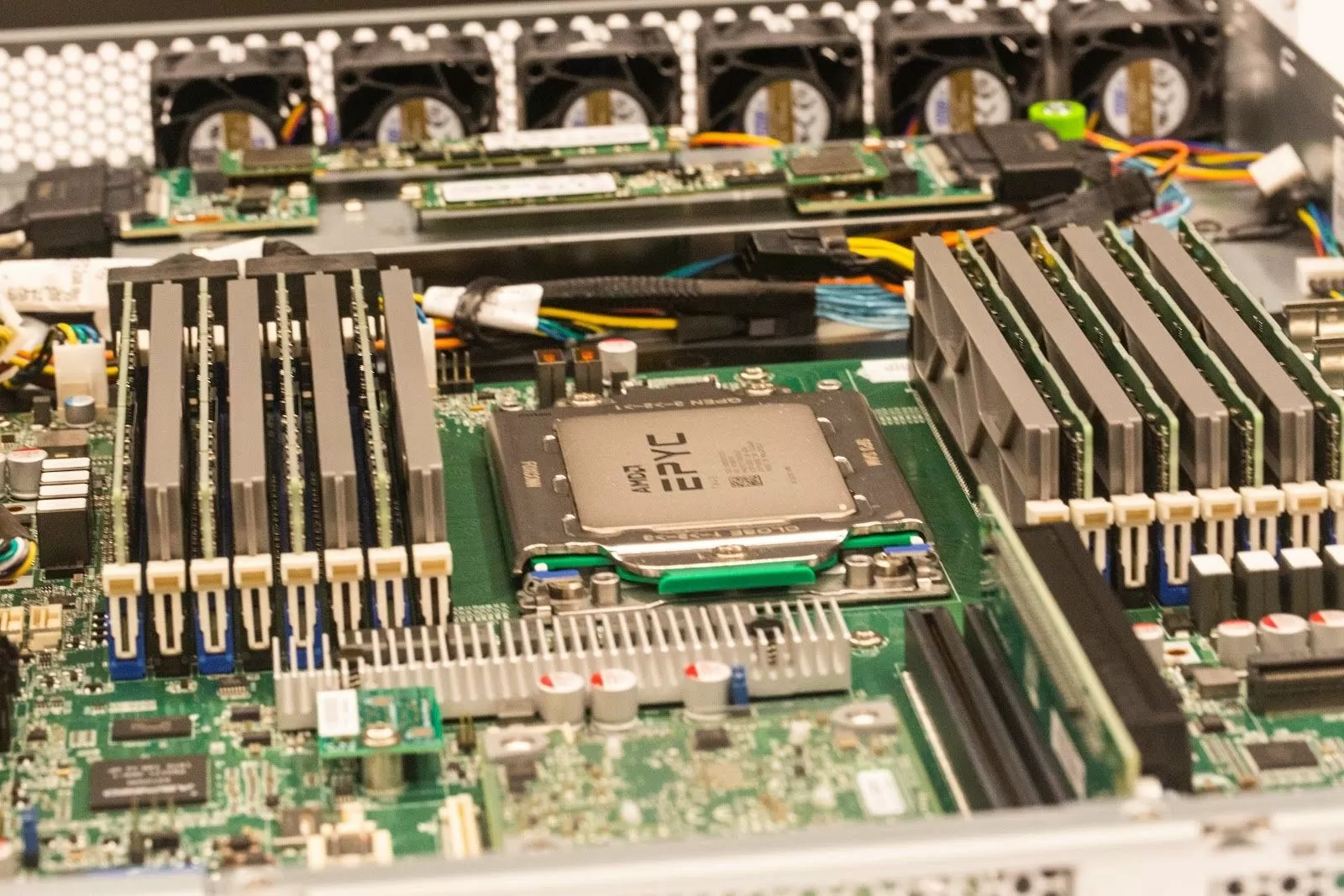

For the tenth generation of our servers, we chose a single-processor configuration based on AMD EPYC 7642. This processor has 48 cores (96 threads), a base clock speed of 2.4 GHz, and 256 MB of third-level L3 cache. Although the rated power (225 W) may seem high, it is lower than the total TDP in our 9th-generation servers (on customized, commercially unavailable 24-core Intel processors with a clock speed of 1.9 GHz, which have a TDP value of 150 W. approx. ed). Even though AMD has a 64-core processor option, the performance gain for our software stack and using this version would not be convincing enough to go to the extra expense.

We have deployed AMD EPYC 7642-based servers in six Cloudflare data centers; it is significantly more powerful than the dual-core pair of high-performance Intel processors (Skylake as well as Cascade Lake) that we used in the last generation.

Readers of the CloudFlare blog may remember our experiences with ARM processors. We even ported our entire software stack to work on ARM, and have maintained it ever since, although this step requires a bit more work for our programmers. We did this for our test project of servers based on Qualcomm Centriq, which was later closed. While none of the ARM processors currently available are of interest to us, we still look forward to increasing the number of cores in x86 processors in 2020, and look forward to the day when our servers will be a mix of x86 (Intel and AMD) and ARM.

Computing power is the most expensive item in the server budget. Our approach to server design is very different from traditional content delivery networks designed to deliver large object video libraries. They usually use servers that are focused on storing large amounts of data, and redesigning such machines to offer serverless services is excessively capital-intensive. Our heaviest loads are just the same serverless applications and firewall, due to which the size of the network packet in our network is very small.

Our new Gen X server has one free PCIe slot for installing an additional card, which is useful if it can perform some functions more efficiently than the main processor. Perhaps it will be a GPU, FPGA, SmartNIC, ASIC, TPU or something else, time will tell.

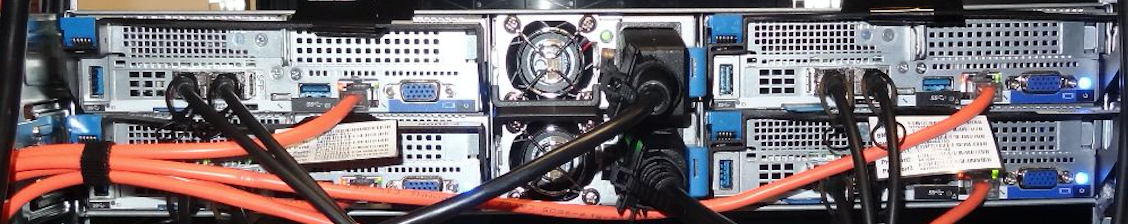

4-node Generation 9 Intel-based server

We abandoned our multi-node chassis in favor of the traditional 1U form factor, designed for easier maintenance in the data center.

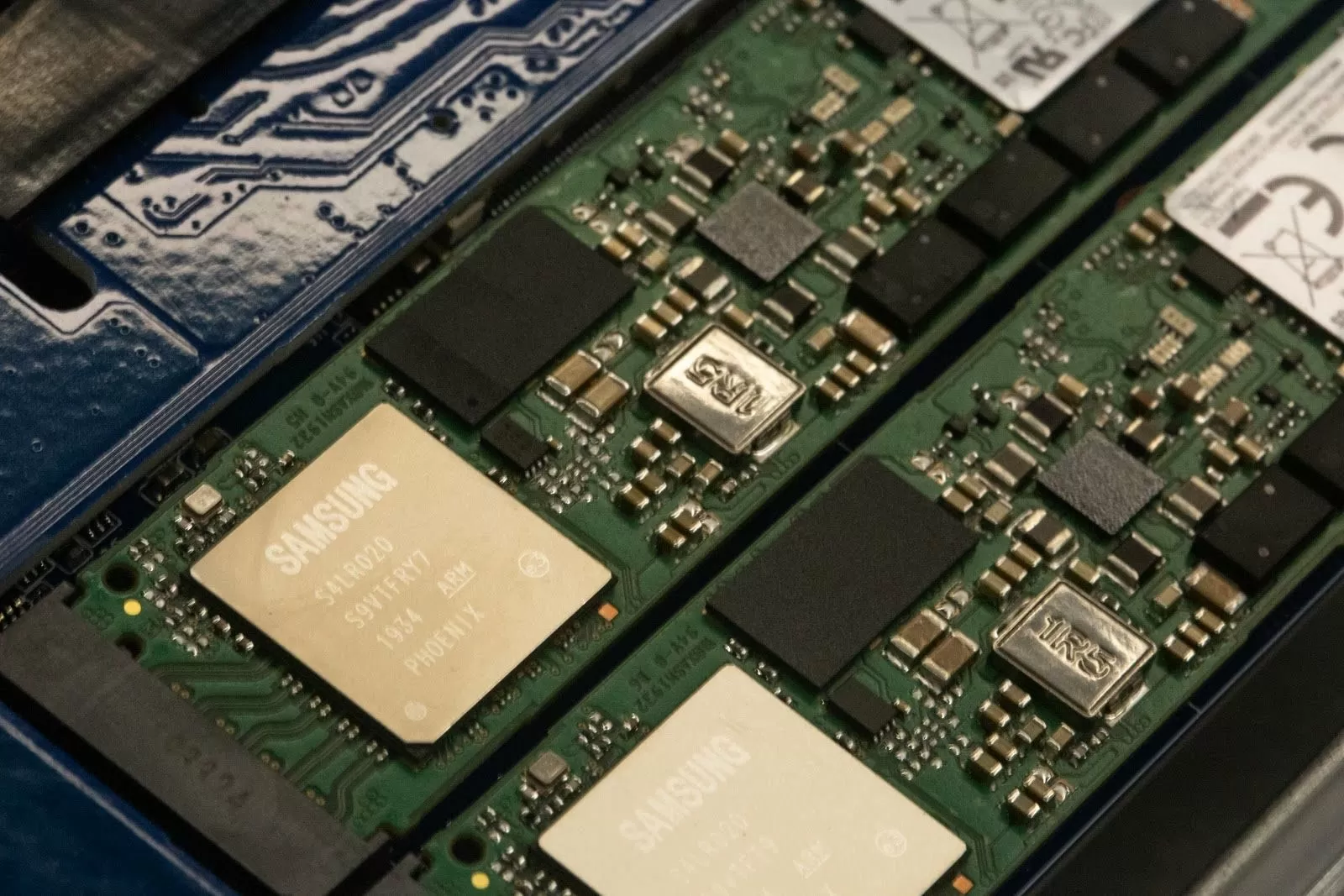

RAM, Network, SSD

Since the performance of our servers traditionally depends on the CPU, in the Gun X server, components such as RAM and SSD have not changed much. We continue to use 256 GB of RAM, as in the previous generation, but with a higher frequency of 2933 MHz. For storage, we still use about 3 TB per node, collected by three NVMe drives (instead of SATA).

This gives us better performance in IOPS, which allows us to use full-disk encryption using LUKS. For network interfaces, we continue to use the adapter 2-port Mellanox boards with a speed of 25G.

Ron Amadeo

25/02.2020