Qualcomm Cloud AI 100 AI accelerators for data centers

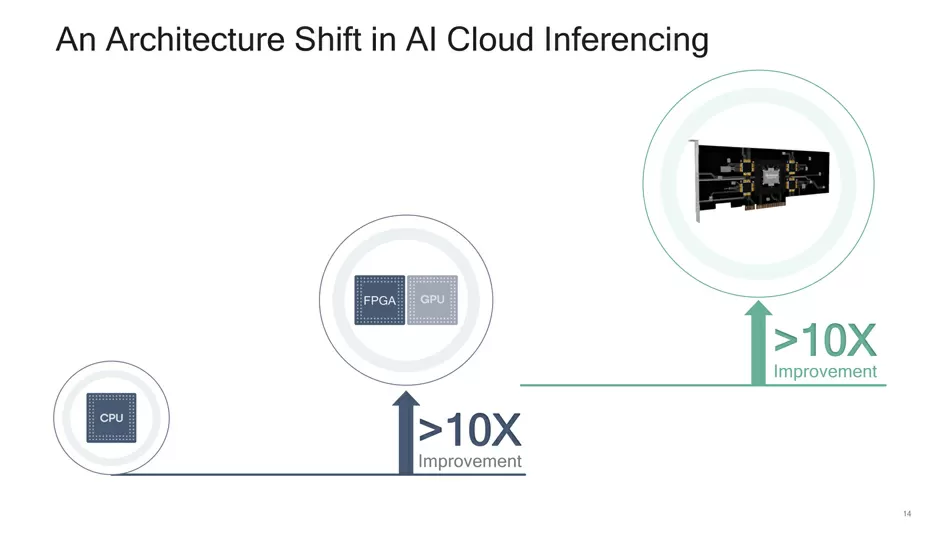

The new market, which did not exist at the beginning of this decade, has become the center around which all modern scientific achievements and huge revenues revolve over the past few years. But this modern era of artificial intelligence is still in the nappy stage, and the market has not yet found a ceiling; data centers continue to buy AI accelerators in bulk, and the deployment of technologies is accelerating more and more already in consumer processors. In a market that many believe is still booming, processor manufacturers around the world are trying to figure out how they can grab a bigger share in one of the greatest new markets. Simply put, the AI gold rush is in full swing, and right now everyone is lining up to sell their tools.In terms of the underlying technology and the manufacturers behind it, the AI gold rush has attracted the interest of experts from all corners of the tech world. From large GPU and CPU manufacturers to small FPGA and ASIC manufacturers, everyone has rushed to produce artificial learning solutions designed for personal and industrial applications.

Among this hierarchy, datacenters are the best market for any processor manufacturer: fast-growing, expensive, with huge budgets, and still living on old technologies, virtually untouched. And now Qualcomm is entering this market.

Qualcomm Cloud AI 100 Family

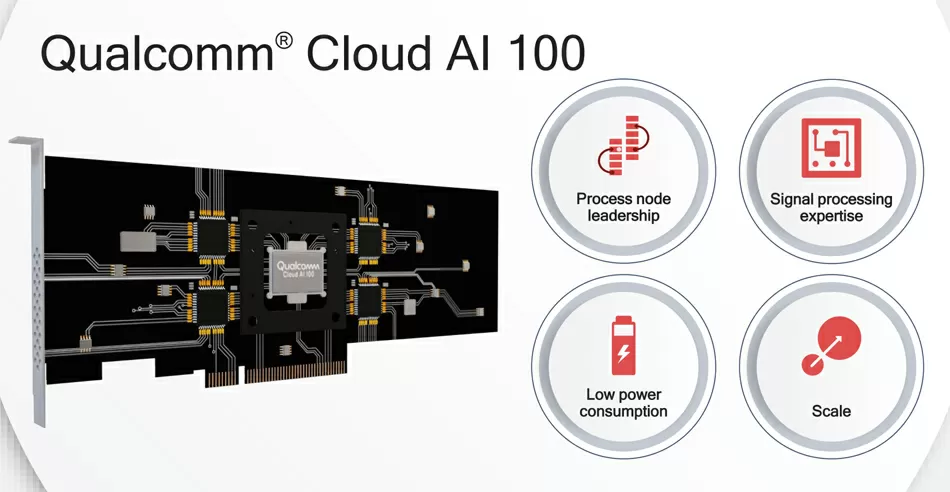

Today, Qualcomm announced that they are entering the AI accelerator market with the Qualcomm Cloud AI 100 family of chips. Designed from the ground up for the artificial intelligence market, this chip should be released as early as 2020. At the same time, Qualcomm emphasizes that the new chip will be compatible with existing software for artificial intelligence applications.

But of course, you can say that 2020 is still a long way off, and what Qualcomm has announced is more of a "statement of intent", a kind of demo version, a teaser, than a real product. The first samples will be available only at the end of this year, and commercial deliveries will begin in 2020. Simply put, we have at least a year, but in the meantime, major clients of the server world have heard that a new star has been lit in the market dominated by NVIDIA...

Qualcomm Cloud AI 100 architecture: this is an ASIC for datacenters

As usual, there is very little information, but it is known that the chips will be manufactured using the 7-nm process at TSMC factories. The company will offer various expansion cards, but it is currently unclear whether they are actually developing more than one processor, or whether the processors will be combined on a single Board. However, Qualcomm has already made it quite clear that the new accelerators will be used for calculations based on already trained AI models, that is, they will relate to AI processing devices.This is a very important clarification, because in this case, Qualcomm chips can only be used to output AI data, similar to Google's TPU. Of course, Qualcomm is not the first company that came up with the idea of using ASICs for artificial intelligence, but as you have seen, all other ASICS are designed for the low-end segment of the market. These are mainly USB devices for laptops or external boards for experimenters or for embedding in some devices. In any case, these are not data center solutions like Qualcomm Cloud AI 100.

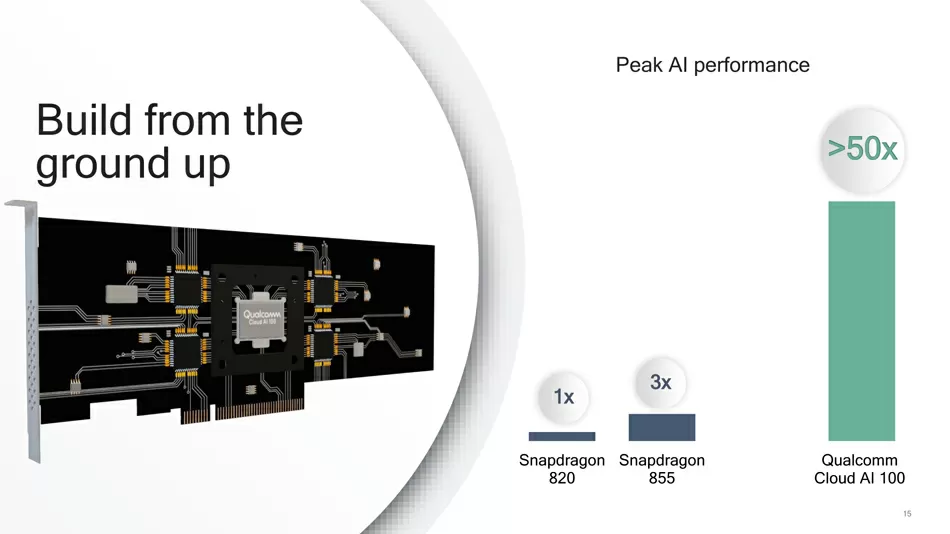

Qualcomm already has a good positive experience of using AI cores in Snapdragon 820 and Snapdragon 855 processors for mobile devices, but at the same time, the manufacturer has not yet had a full-fledged AI chip, especially designed for the data center market.

In addition, given the size of Qualcomm, we can say that they will not have problems with production volumes. Of course, this factor will not affect the competition with Intel and Nvidia, but it will certainly abstract Qualcomm from hundreds of small startups developing their ASICS-and for artificial intelligence.

AI-processing market

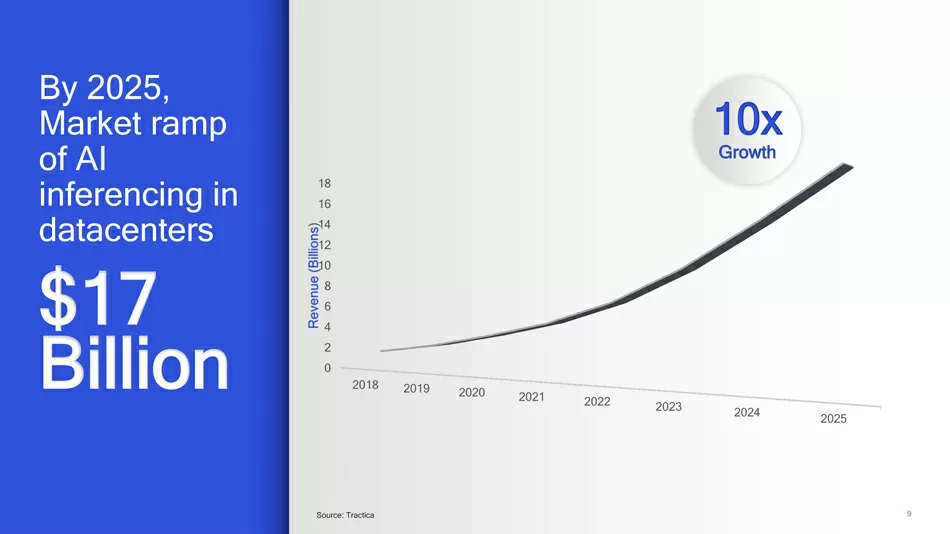

Interestingly, if we are talking about GPUs, they can be used for universal tasks: training models and implementing them, but in General, these are completely different tasks and completely different markets. I-processing includes tasks such as gesture recognition, video, audio, and any other work based on pre-trained models. The simplest example is when smartphones automatically process your selfies. Of course, we are used to the fact that AI processing is carried out on a final device, such as a smartphone, but at the same time, experts predict the growth of AI processing in data centers by 10 times, and the volume of the AI accelerator market by 2025 will be $ 17 billion!

Training a neural network requires a large number of resources that are spent only once. Subsequently, the resulting model can be used and sent to entire farms consisting of processing accelerators. That is, typical consumers who use AI in business will buy more processing accelerators than GPUs for training.

The most important issue here is software support. Nvidia's success is also determined by the existing software ecosystem that The company has nurtured over the past 10 years. To date, leaders in the field of AI frameworks have already formed: TensorFow, Caffe2 and ONNX, which Qualcomm promises will be supported, but in General, this support is crucial for a successful start.

Ron Amadeo

12/04.2019