Huawei OceanStor 2200 V3 review - entry-level block virtualization storage

Huawei storage systems are relatively new, and today the Chinese manufacturer successfully competes with brands such as Dell/EMC, Netapp and HPE. Following the principle of "more features for less money", Huawei, even in entry-level storage systems, uses technologies that competitors can only find in top-end models. The company ", the official partner of Huawei, provided us with the OceanStor data storage system 2200 V3 for rigorous and comprehensive testing.

The OceanStor series is designed to store large amounts of enterprise data, and even the youngest model OceanStor 2200 V3 is already scalable up to 300 disks with a total volume of 2.4 PB, these are file and block access, these are FC, FCoE interfaces and Ethernet with iSCSI support, it is 12 Gbps SAS for head-to-shelf connectivity, a 2-controller design without a single point of failure, and support for block-based hard disk virtualization, a technology Huawei proudly calls RAID 2.0+.

RAID 2.0+ - HDD block virtualization

Traditional RAID can be compared to a wall where every brick is a hard drive or SSD. It is desirable to have bricks of the same type and size, and if you pull out one or two, the wall will sway, and if three, even RAID 6 will collapse, taking all the stored data with it. It makes no sense to combine 7200 and 10,000 RPM disks in traditional RAID arrays, when replacing a "brick", rebuilding an array can take up to several days, inside the array all disks are equal, and if you distribute data to "cold", "warm" and hot, please allocate an SSD for hot data and create a separate 10/15K SAS HDD RAID array for warm data. Of course, you still need to reserve 2 or 3 disks of different types for hot swapping, but this is the reality of traditional RAIDs, and you have to put up with this even in very expensive storage systems.

It's a completely different matter when you take completely different hard drives and/or SSDs and divide their space into equal blocks, let's say 128 kilobytes each. And now from these blocks you assemble a RAID array according to any of the traditional schemes - from a simple RAID 1 mirror to RAID 60. In the two cases, the controller operates not with physical media, but with the space inside them, and here you have truly unlimited possibilities. The simplest is the ability to create multiple simultaneous RAID arrays of different types on the same hard drive pool. This could be useful for creating a small fast disk group, but the technology went further - the Huawei OceanStor 2200 V3 controller itself is able to separate data into "hot" and "cold" and move them inside one RAID array to faster media - 15K HDD or SSD . In the terminology of the developer, RAID 2.0+ technology divides the space of a disk group into chunks, chunk groups, and extents. These terms are not found in the storage settings, so if you are interested in how this works, we offer you slides from the manufacturer's presentation.

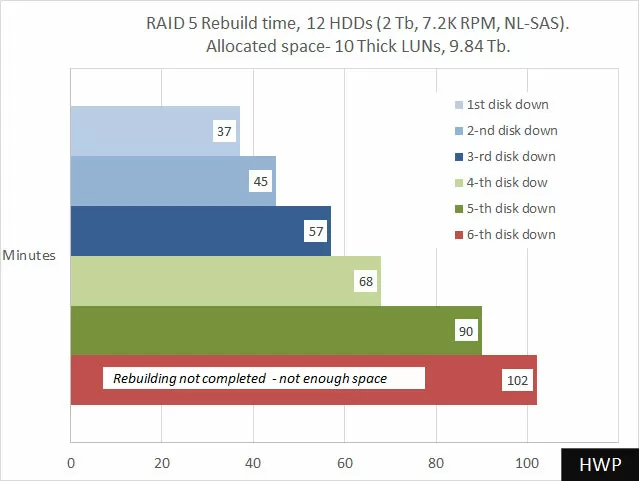

Reliability of the assembled arrays is a matter of pride for Huawei: just imagine - not only does it take 30-40 minutes to restore a 12-disk array, moreover, you can do without a Hot Spare disk at all (as a hot substitution is not a physical disk, but the free space of the pool), so also the traditional RAID 5 will withstand the fall of two, three, four, or & hellip; nine hard drives - there would be free space. With each HDD failure, the OceanStor 2200 V3 controller automatically redistributes data to unoccupied areas of hard drives, and after 30-40 minutes your array is alive and well again, and having lost one HDD, it will survive the failure of the next hard drive. Let's test this array survivability feature!

Huawei OceanStor 2200 V3 came to our laboratory in a configuration with 12 NL-SAS hard disks of 2 TB each. For testing, all disks were combined into a common pool, inside which a RAID 5 was assembled with one virtual Hot Spare disk. The usable volume of the array was 20 TB, inside which 10 LUNs were created with a total volume of 9.6 TB. We sequentially turned off one hard drive, measuring the recovery time of the array.

The system survived the failure of 6 disks in 12-disk RAID 5 , and after the failure of the fifth HDD, the array was restored to Online state, and the free space on the sixth disabled hard drive ran out , and the rebuild happened only partially, leaving the array in a degraded but working state.

What to do in this case? The answer suggests itself: by deleting one of the LUNs, we freed up space and the reconstruction process started further. At this point, it was decided to stop testing, since failure of even 50% of hard drives in storage systems never occurs in practice, but Huawei OceanStor 2200 V3 will withstand such a scenario, as long as the drives do not crash at the same time .

Automatic reduction of Thin LUNs

Another interesting technology is SmartThin Data Shrinking, which optimizes the space occupied by LUNs. In general, this is an evolution of the Thin Provision principle for logical volumes, with the only difference that the storage controller automatically detects zero data blocks and excludes them from the logical disk. We created a 10GB test LUN to see how it worked, then wrote 9.5GB of data to it and immediately deleted it.

As you can see in the screenshots, even after emptying the contents of the LUN, it occupies only 10% of its maximum volume on disk space. If you remember that traditional LUNs of the Thin Provision type, even after deleting their contents, tend to grow to the maximum specified volume, the result of Huawei OceanStor 2200 V3 is impressive, because once you "inflate" a logical disk and then delete unnecessary information from it, you do not need re-create the LUN again to free up space on the storage system. Data Shrinking does not work “on the fly” and takes some time. In our case, space was freed at a speed of about 1 GB/min.

Mountable Snapshots

All storage vendors have Snapshot functions today, and you might not have focused on Hypersnap technology if it weren't for one difference. In OceanStor 2200 V3, you can mount each snapshot as a full LUN without affecting the original data. This is very useful when forking a live application. Well, let's say you want to refine some software: create one, or better, two snapshots, connect one snapshot as a LUN and work inside it as if you were working with the initial data on a logical disk. Any changes you make within a snapshot will not affect other snapshots or the original LUN.

When the task is complete, simply delete the unnecessary snapshot or roll it back to the original LUN to make your fork the main data source for the updated application.

The three functions listed above are an example of how software simply changes our understanding of the capabilities of a storage system. I confess, they impressed me so much that I broke the usual order of preparing the review and put the results of some tests above the description of the hardware part of the device, and now is the time to fix the deed and see what the OceanStor 2200 V3 is "in hardware".

OceanStor 2200 V3 Construction

Structurally, Huawei OceanStor 2200 V3 is made according to the active-active dual-controller scheme, traditional for SAN devices, with full duplication of components. The chassis can have 12 bays for 3.5 "media, and 25 bays for 2.5" drives or SSDs. The storage system only works with Huawei branded hard drives, and the vendor currently only offers SAS-12G media, including 7200 RPM NL-SAS high capacity and SSD.

At the configuration stage, it should be borne in mind that there is no 3.5 "for OceanStor 2200 V3" SAS hard drives with spindle speeds of 10 and 15 thousand rpm - these drives are available only in 2.5-inch format, so if the head unit has 3.5 " bays, for high-speed SAS drives you will have to buy an SFF expansion shelf. As for SSDs, they are available in both formats with the same capacity.

By default, the OceanStor 2200 V3 ships with two controllers with 1 active slot for PCI Express 4x 3.0 host adapter modules. The controller itself has 4 1Gbps Ethernet ports on board with support for link aggregation, which in itself already gives a good outward link speed, and allows you to completely abandon additional interfaces if performance is not critical.

The controllers use 16-core processors, but the manufacturer does not disclose either the type of CPU or their characteristics. For non-volatility of RAM, CBU - modules containing powerful supercapacitors are installed. In the event of a power outage, the contents of the cache are written to the SSD in the controller and can be stored there indefinitely until the system is turned on again. CBUs are designed for the lifetime of the device and do not require maintenance . Memory is also important: The OceanStor 2200 V3 can act as a NAS using file access via NFS, CIFS, HTTP and FTP. So, to implement this functionality, the total storage capacity is required to be 32 gigabytes, so if you need file access, order Huawei OceanStor 2200 V3 with the maximum memory size.

To control the storage system, as many as 3 RJ45 ports are installed on each controller. The first two are for accessing the web interface and through the console from the command line. They differ in that on the first one you can change the IP-address, and on the second it is hard-coded so that the engineer does not look for it during maintenance. And the third is generally a COM port for direct connection to the terminal (RJ45-DB9 cable is supplied in the kit).

If the project assumes a high-speed connection, then Huawei has 8 types of host adapter modules for optical and copper media for you:

- 4 Port 1Gb ETH I/O module (BASE-T)

- 4 Port Smart I/O module (SFP +, 8Gb FC)

- 4 Port Smart I/O module (SFP +, 10Gb ETH/FCoE (VN2VF) FC/Scale-out)

- 4 Port Smart I/O module (SFP +, 16 Gb FC)

- 2 Port Smart I/O module (SFP +, 10Gb ETH/FCoE (VN2VF) FC/Scale-out)

- 2 Port Smart I/O module (SFP +, 16Gb FC)

- 4 Port 10Gb ETH I/O module (RJ45)

- 8 Port 8Gb FC I/O module (with built-in transceivers)

In our test storage system, 2 host adapters with part number V3L-SMARTIO8FC were installed, in the list above they are highlighted in bold. All Smart I/O adapters support 8FC/16FC and 10G Ethernet, and speed is limited only by bundled transceivers. That is, having once purchased an 8-Gigabit Smart I/O host adapter, you can subsequently change the SFP modules to 16-Gigabit ones and get a higher interface performance, but it should be borne in mind that when connecting via FibreChannel, all ports of the host board must work at the same speed. When connected via iSCSI, direct access to iWARP/RDMA memory is supported, which is happily reported by the VMware ESXi hypervisor when installing storage.

All components are cooled by two twin fans installed in the power supplies. The absence of additional fans allowed to reduce the depth of the case to 488 mm. Feels good for the environment and energy bills, but more importantly, the Huawei OceanStor 2200 V3 is so quiet that it can be installed in the same space as staff , in a closed telecommunication cabinet.

The faceplate is not interesting and the best thing to do is to cover it with a decorative overlay, leaving the status indication, power button and luminous device ID in sight, which is very useful when you are standing in front of several identical devices and cannot tell them apart. But really - Huawei head units and disk shelves look like twin brothers, and since we remembered about them, let's talk about scaling.

Vertical scaling

Up to 13 disk shelves can be connected to the OceanStor 2200 V3 head unit via 12 Gbps SAS interface, for which two MiniSAS HD ports are installed on each controller. Supports 24-drive LFF 4U expansion modules and 25-drive SFF 2U enclosures. As already mentioned, the total number of disks per head unit can be 300 pieces, and the total volume - 2.4 PB.

Scale-out is not available in the OceanStor 2200 V3 series, and the most you can count on is the installation of redundant storage systems, between which logical volume mirroring or data replication will be configured, including in real time.

Web interface

The web interface works using Adobe Flash, so everything here is beautiful and modern, and many menu items are conveniently called with the right mouse button. We will connect storage systems via iSCSI, but the configuration mechanism via FC is the same.

We start by creating a disk pool, inside which it will be possible to define RAID arrays in order to cut them into LUNs. We give all 12 disks to the common pool and set up RAID 5 on it, allocating 1 virtual disk for Hot Spare. Next, you need to decide on the binding of LUNs to clients, and here you have to understand the tricky hierarchy of which client is given which LUN.

Logical disks and their snapshots are grouped here, and they are already presented to hosts. A host in storage terminology is a logical grouping of different iSCSI initiators that can belong to different physical or virtual servers. It is the identity of the iSCSI initiator to the host that determines whether the client sees the LUN or not. At the "host" level, it would be possible to complete the hierarchy, but Huawei decided that hosts should also be defined in groups, and these groups of hosts and LUNs should be linked together.

Each physical port of the SmartIO controller is a separate iSCSI target with a specific IP address. To bind a LUN to an iSCSI target, you need to create a host, a host group, add active iSCSI client initiators to the host, create a group of LUNs and configure such routing in the Mapping tab. By the way, a given route can be rigidly tied to a physical port, more precisely, again to a group of ports. If you have worked with simpler storage systems before, you will have to do a little brain work, but taking this hierarchy for granted, you can batch create hundreds of LUNs in a matter of seconds and bind them to your client machines with almost no restrictions.

I am very pleased with the built-in performance monitoring, which displays the intensity of requests to a physical port, disk pool, RAID array and each LUN separately. What's more, a standalone storage utilization forecasting tool will show you trends in disk usage and machine utilization. This allows you to plan ahead for the necessary upgrades to avoid sudden bottlenecks in your IT infrastructure.

When you activate licenses for advanced software functions, you will be presented with new tabs with programs for configuring mirroring and LUN migration, configuring fault-tolerant configurations, and managing tiering. Let's take a look at some of these paid features.

LUNs can be mirrored within one storage system, but on different disk pools. This feature is needed to improve the reliability of critical applications, but given how well the OceanStor 2200 V3 resists RAID failure, it's hard for me to say why it might be needed in real life. But LUN migration will allow, when installing a new storage system into an existing heterogeneous infrastructure, to transfer logical disks from Dell, IBM or HP storage without interrupting the work of clients, while maintaining the ID number of the logical disk. We tested this feature trying to transfer LUNs from Synology DS1511 using iSCSI protocol, but to no avail, storage systems could not see each other, and Dell or HP products were not at hand.

Things like "LUN cloning" or remote replication are not surprising today, and except for the little things, Huawei has no special achievements here, but what you should pay attention to is SmartMotion and SmartQoS. The SmartMotion algorithm allows you to dynamically add space to LUNs from pools, and vice versa, reclaim it .

Smart QoS is a traffic prioritization system set for each LUN. The principle of operation here is the same as in network switches: with a high number of concurrent requests, operations with a higher priority LUN are initially processed, they are allocated more CPU resources, cache, network interfaces and, of course, disk performance. That is, you can use SmartQoS to adjust the performance of a LUN so that it does not interfere with others, or vice versa, to ensure the highest performance for any important LUN.

One of the most demanded features in disk systems is Tiering, multi-level data storage. A special algorithm collects statistics on access to chunks and transfers the most requested data to SSD or high-speed HDD. Today, many have it, but not every storage system will allow you to set the time during which you need to collect data. Huawei understands that at night or on weekends, your infrastructure can have high backup traffic, the place of which is on NL-SAS disks, so using the calendar you can specify the days of the week and hours when Tiering is trained and when not. .

But what is missing in the Web interface is information about the load of processors and RAM, as well as the temperatures of storage components. When looking for performance bottlenecks, CPU and RAM utilization is the first place to start, especially when you have so many software functions that deal with data blocks. Well, since we're talking about speed, it's time to move on to testing.

Testing

In our configuration with NL-SAS disks, Huawei OceanStor 2200 V3 does not claim to be a performance champion, so we make a correction in advance for the lack of SSD cache and more or less fast magnetic media.

For testing, we used a test bench with the following configuration:

- Server 1

- IBM System x3550

- 2 x Xeon X5355

- 20 GB RAM

- VMWare ESXi 6.0

- RAID 1 2x SAS 146 Gb 15K RPM HDD

- Intel X520-DA2

- Server 2

- IBM System x3550

- 2 x Xeon X5450

- 20 GB RAM

- VMWare ESXi 6.0

- RAID 1 2x SAS 146 Gb 15K RPM HDD

- Mellanox ConnectX-2

- Storage System

- Huawei OceanStor 2200 V3:

- 16 GB RAM

- 2 x SmartIO 4 SFP + FC 16 Gbps / ETH 10 Gbps

- 12x HDD NL-SAS 7200 RPM 2 Tb

- RAID 5

- Soft

- Debian 9 Stretch

- Without Intel Meltdown / Specter Patches

- VDBench 5.04.6 Benchmark

Uninterruptible power supply Inelt Monolith III 2000 RT was responsible for safe and stable electricity, which showed excellent results in our comparative testing Small Business UPS .

Servers were connected directly to two storage host adapters using DirectAttach cables, Intel XDACBL3M. On test servers running VMWare ESXi, 8 virtual machines were deployed with Debian 9 x64 guests, for which 8 identical LUNs were allocated on the storage system. Each virtual machine was connected via iSCSI to its own LUN, using a 10-gigabit network port as an uplink of the VMKernel Switch virtual switch. Each virtual machine was running the VDBench benchmark, which was controlled from a dedicated device.

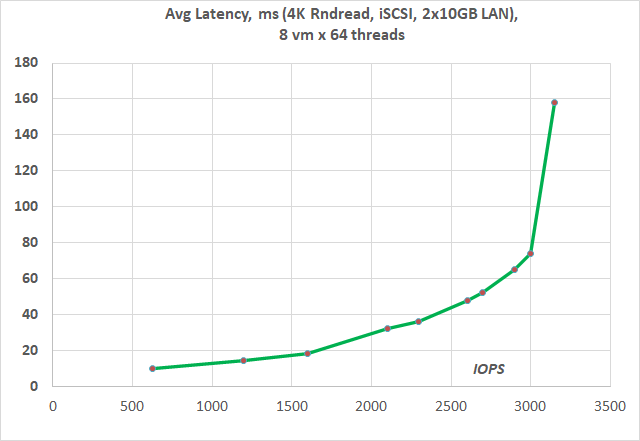

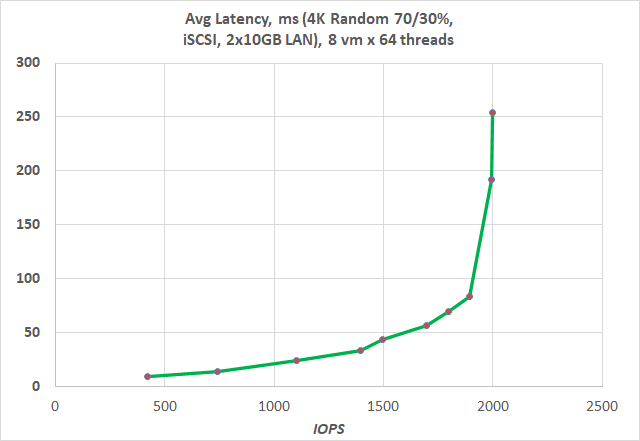

Before starting the main test, the LUN space is pre-filled for 120 minutes to exclude the impact of new empty HDDs and data fragmentation on speed. We start with a 4K Random Access benchmark with a different number of threads to determine how many threads we will be doing the main testing on.

The results show that with a load of 8 clients with 64 threads (total 512 threads), the speed starts to decrease slightly and the queue depth grows strongly. This means that we will test in 8 virtual machines mode with 64 threads each.

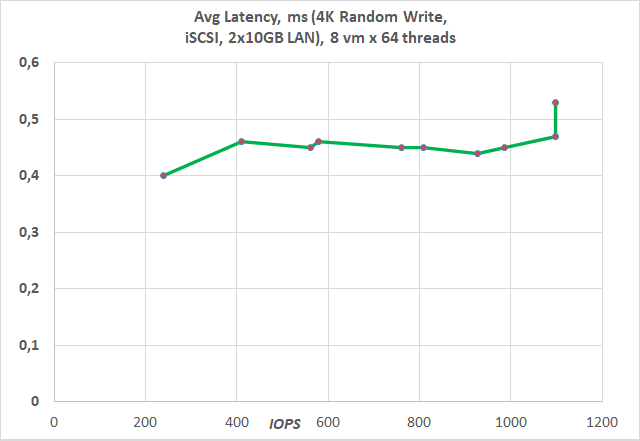

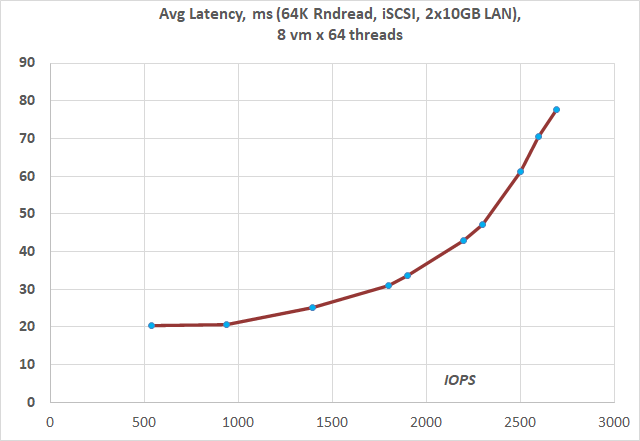

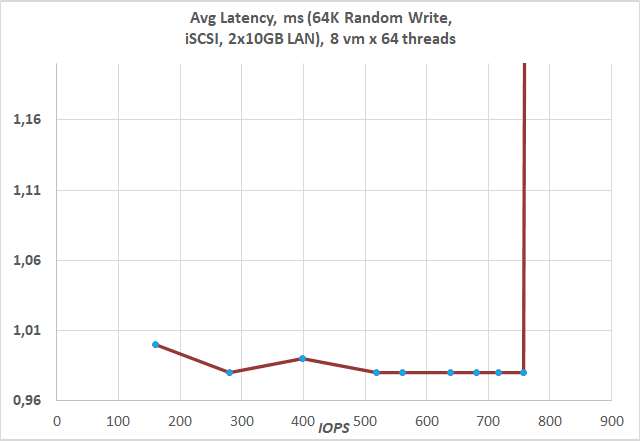

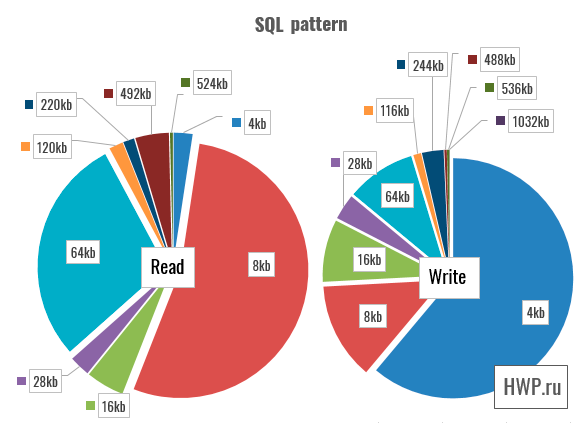

The speed of random access is quite predictable, while all write operations are clearly in the controller cache, and practically do not depend on the load. Let's look at random access with a transaction size of 64 KB, a test that is recommended for Microsoft SQL Server.

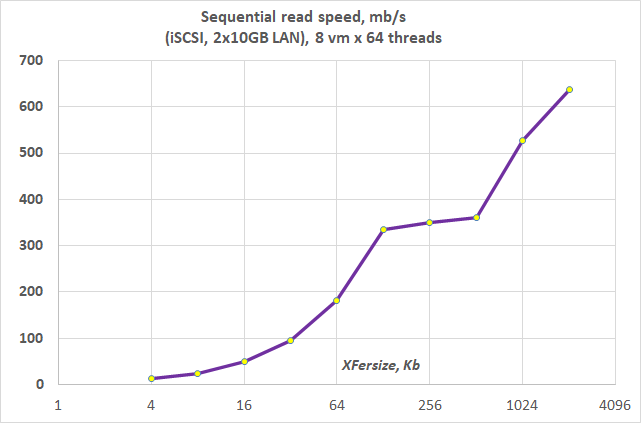

We were unexpectedly pleased with the reading test, which showed only a 30% decrease in speed compared to the test of random reading of 4Kb blocks. Let's see what happens with sequential reading.

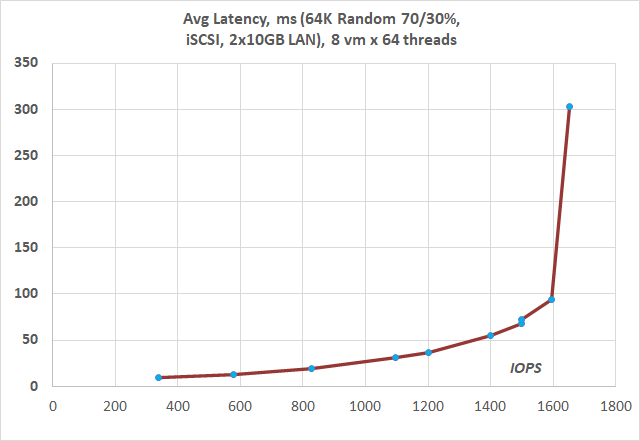

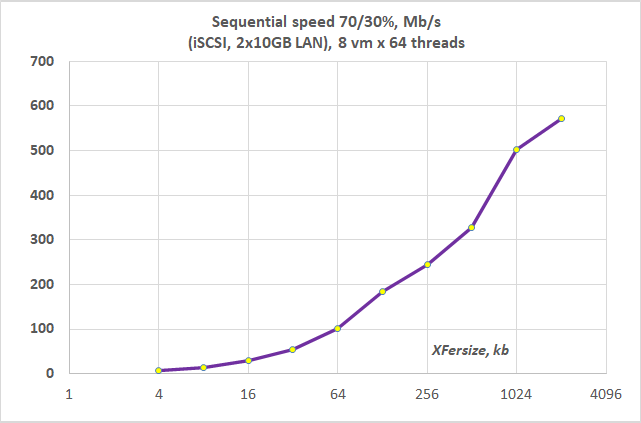

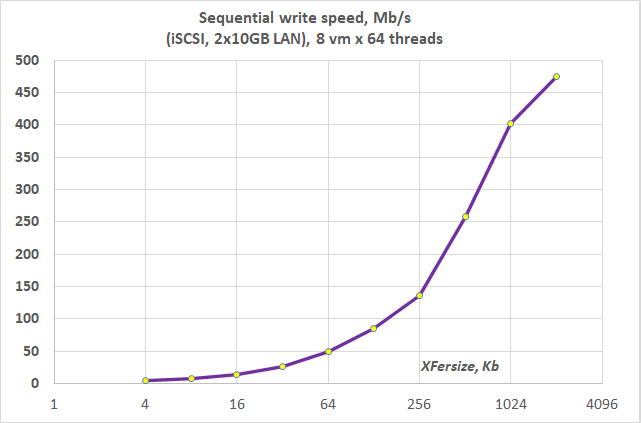

Sequential access is a bit disappointing: 12 NL-SAS drives can and should deliver over 1 gigabyte per second, but neither reads nor writes come close to that figure. I assume that this is due precisely to block virtualization, or rather, to the distribution of chunks across physical disks.

Real World Problem Patterns

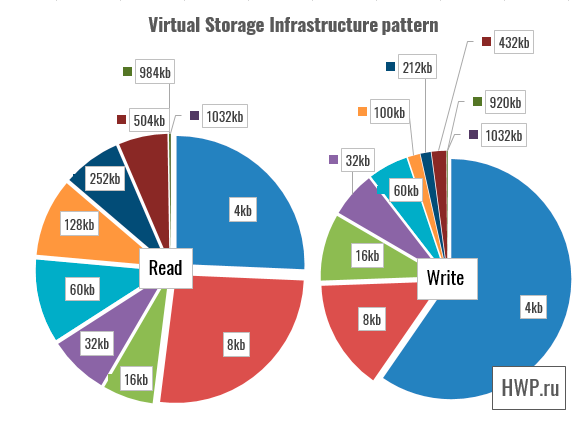

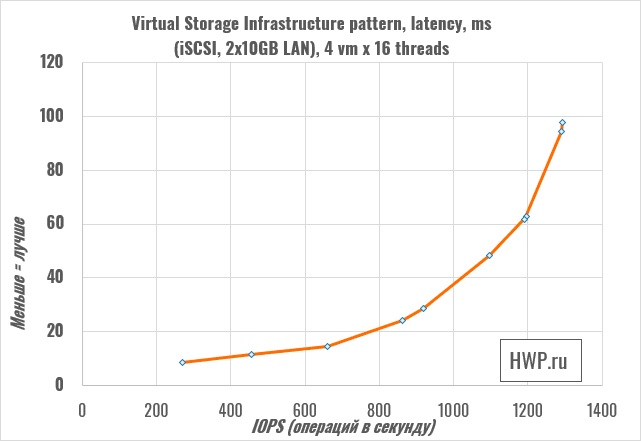

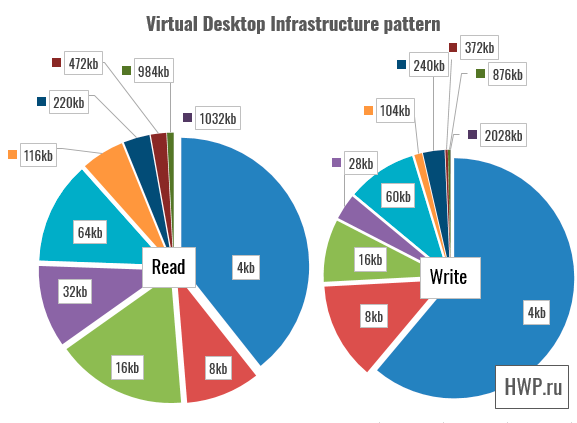

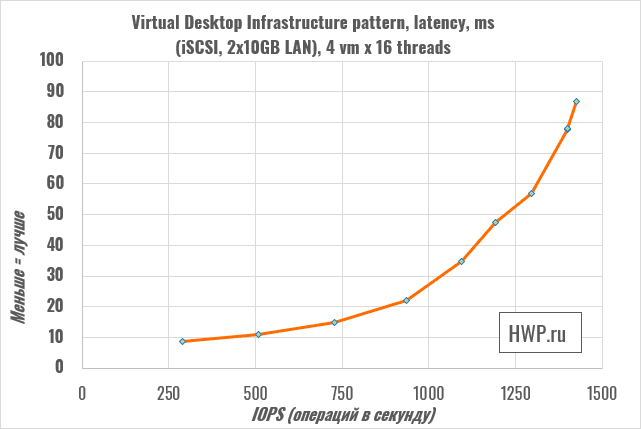

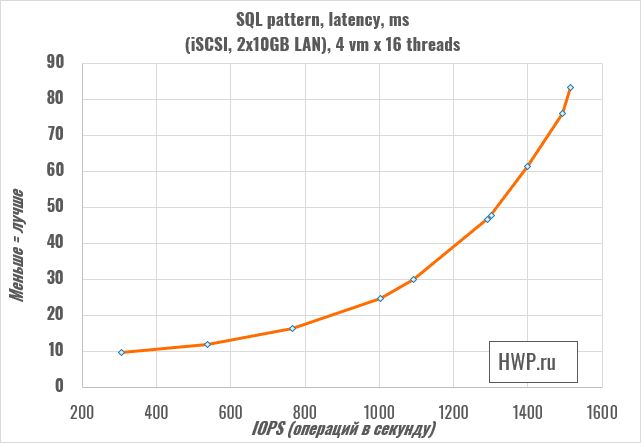

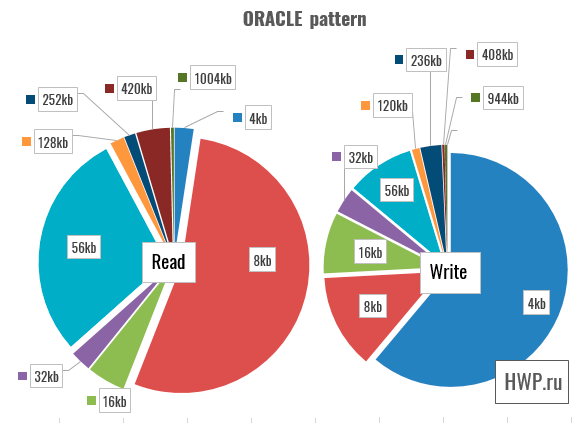

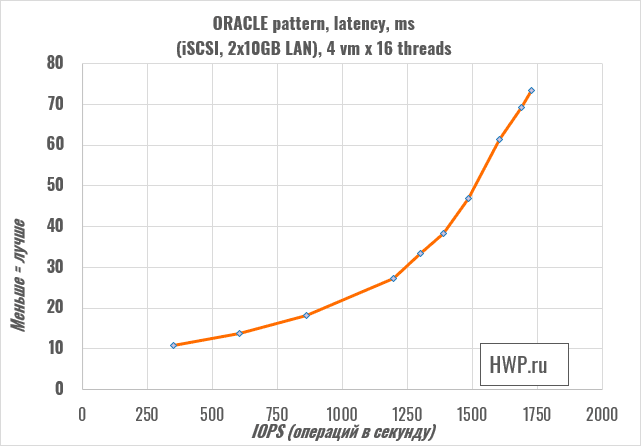

From synthetic tests, let's move on to emulating real problems. The VDbench test package allows you to run patterns captured by I/O tracing programs from real tasks. Roughly speaking, special software records how an application, whether it is a database or something else, works with the file system: the percentage of writing and reading with a different combination of random and sequential operations and different sizes of a write and read block. We used patterns captured by Pure Storage for four cases: VSI (Virtual Storage Infrastructure), VDI (Virtual Desktop Infrastructure), SQL, and Oracle Database. The test was run with 16 threads for each virtual machine, which created a request depth of approximately 64.

Moving from virtual applications to databases

NL-SAS hard drives are of course not designed for databases and virtual applications, and Huawei OceanStor 2200 V3 in our configuration can be used for databases if the disk system load is about 800 IOPS. the latency grows further.

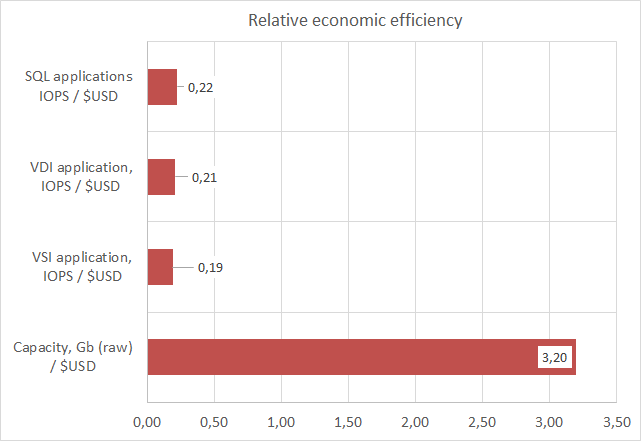

Cost Effective

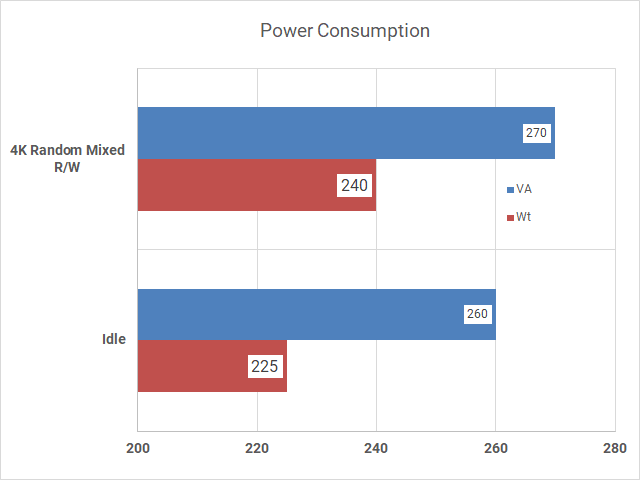

We start counting money by measuring the energy consumption of the assembled storage system in idle mode and under load.

Power consumption varies very little, which is typical for a machine with a large number of hard drives. It is also impossible to say that Huawei OceanStor 2200 V3 heats up in any way perceptibly - the cooling system operates at minimum speed during the entire test - excellent!

It is Huawei's policy regarding licenses that they are installed for the entire life of the device, but after 3 years support and updates for advanced features will cease. Our configuration used one Basic Software for Block base license, which includes the following features:

- Device Management

- HyperSnap

- HyperCopy

- SmartThin

- SmartMotion

- SmartErase, SmartConfig, SystemReporter, UltraPath

You do not need to purchase this license - the specified functionality is available immediately upon purchasing the device.

List of licensed features that are not included in the cost calculation:

- Performance Speedup Solution Suit (about 458 thousand rubles)

- SmartTier

- SmartQoS

- SmartCache

- Integration Migration Solution Suit (about 390 thousand rubles)

- SmartVirtualization

- SmartMigration

- HyperMirror

- Data Protection Software Suit (about 400 thousand rubles)

- HyperClone

- HyperReplication

These features are included when you purchase a separate feature license or bundle of licenses.

The cost of the storage system without electricity costs was 390,750 rubles , which is very cheap for such a device assembled with hard drives.

And due to its low price, Huawei OceanStor 2200 V3 shows a very high cost per gigabyte for the head unit.

Extended Warranty Packages

The standard device warranty is 3 years, this period can be extended by purchasing the appropriate service packages until June 30, 2025 (End of Support date for this model). You can also use packages of extended warranty service:

- 9х5 Next Business Day with a visit to the installation site

- 24x7x4H onsite

- 24х7х2H with on-site visit

Service is carried out by the authorized service centers and branches of the company.

Conclusions

Huawei OceanStor 2200 V3 is an example of how a very functional storage system for SAN applications can be purchased for relatively little money. Perhaps the most important plus of this model is the RAID 2.0+ technology, thanks to which you can sleep soundly when one hard disk crashes out of the RAID 5 array: in 40 minutes the array will be in the Healthy state, and if free space allows, you can slowly wait for a new disk from warranty repair, without fear of data safety. Such a fault-tolerant solution, which duplicates all components at the hardware level, and at the software level solves the long-term problems of RAID arrays, fits perfectly into the concept of a file archive or storage system for software developers. Since this is still the entry level, and the price here is of great importance, you can use the OceanStor 2200 V3 to store backups even without host adapters: eight Gigabit ETH ports are enough for iSCSI backup of an entire small office.

Of course, in the initial price segment one cannot do without drawbacks, and among them I can count the lack of horizontal scaling, support for file access in the 16 GB version and an inadequately expensive license for replicating and cloning LUNs, because these features are generally free, even on cheaper devices.

Fortunately, you can do without LUN replication and cloning, or, if necessary, assign these tasks to some virtual server with Rsync installed. And for the money saved, it is better to purchase an extended warranty package, because not every data storage system worth 400 thousand rubles can be provided with an official service with a round-the-clock visit of a specialist to the data center. For many state-owned enterprises, the extended warranty is not only the personal peace of mind of the head of the IT department, but also an excellent way to limit the supply of analogues when purchasing equipment at auction, and apparently Huawei is well aware of this.

Mikhail Degtyarev (aka LIKE OFF)