Huawei Ascend is a unified platform for AI and ML

The field of artificial intelligence today looks so promising that every major chip manufacturer has already released their solutions, or they will do it in the near future. The real players in the AI market, on whose solutions it is possible to build the infrastructure, are nothing: Nvidia with the CUDA platform, its eternal competitor AMD, and Huawei itself. No, of course you can still talk about Google TPU, but it is not available for sale.

As a rule, the introduction of artificial intelligence in an enterprise goes through two stages. The first is the process of machine learning, in which processors (GPU, TPU) process huge amounts of data, while forming some kind of model. As a rule, for machine learning, developers use cloud services, or equipment installed in a company's data center, because if you associate artificial intelligence with servers packed to capacity with video cards, then you are thinking about the process of machine learning.

The second stage is the application of the trained model to a specific device, for example, in a surveillance camera or on a server that processes data from a sensor farm. For these purposes, you need a processor with a different architecture than for machine learning, with lower power consumption and cost, because it is at this stage that AI goes to the masses.

Of course, the question of compatibility arises: will one software model, designed for the GPU, take into account all the optimizations of the processor on which it will be able to work in the future? Will you dynamically retrain it with new data, and will you have to convert it every time between the frameworks in which you are developing? In general, while this industry is still raging, and everyone contributes something of their own, it is better to play it safe.

Why Huawei?

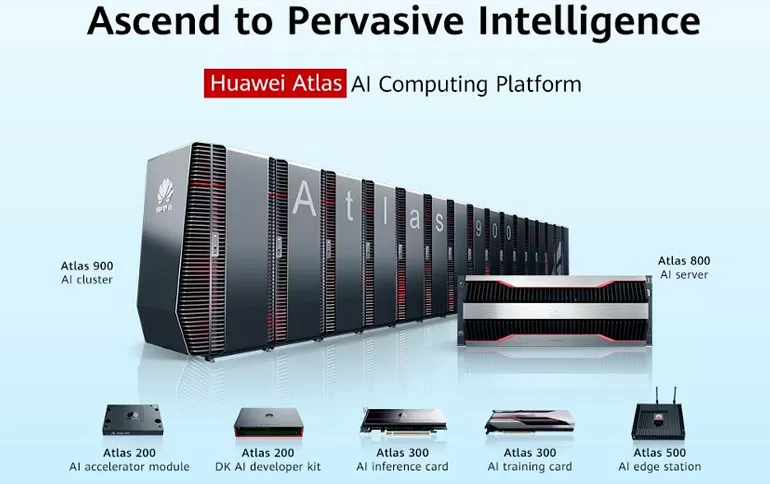

First, Huawei is the only company that has single-brand solutions across the entire AI cycle, from machine learning systems to edge servers and end devices such as surveillance cameras. From top to bottom, you have a single vendor, a single hardware architecture, and a single software framework.

Secondly, Huawei is always a diversification of American IT technologies, which is critically important for state-owned companies. China does not impose sanctions, does not have the habit of prohibiting and restricting or meddling in other technologies.

Thirdly, traditionally Huawei is cheaper than similar solutions from American brands (HP, Nvidia, Cisco, Dell), and as a rule, by a notable 30% or more.

Fourth, Huawei has been at the forefront of technology for a long time, and its Ascend 910 AI platform is able to rival those in the AI market for over 10 years. Let's take a look at the Ascend 910 processor family.

Huawei Ascend 910

Of course, the Chinese comrades have absolutely trouble with imagination: smartphones, processors for smartphones and chips for artificial intelligence are produced under the name Ascend, and the whole line: from small to large. Naturally, we are interested in the Ascend 910 itself - "what Nvidia is afraid of."

Firstly, the Ascend 910 is a "chiplet" that uses both computing cores and the fastest HBM2 Aquabolt memory from Samsung in one package. This is a very important step because memory bus bandwidth is a key factor in the performance of machine learning systems. In the Ascend 910 chip, it is 1.2 TB/s. Computing crystals contain blocks for matrix multiplication and ARM cores, as well as blocks FP16 and INT8 to speed up computations that do not require high precision.

|

|

Ascend 310 |

Ascend 910 |

|

Codename |

Ascend Mini |

Ascend Max |

|

Architecture |

DaVinci |

DaVinci |

|

FP16 |

8 TeraFLOPS |

256 TeraFLOPS |

|

INT8 |

16 TeraOPS |

512 TeraOPS |

|

Number of video channels for decoding H.264/H.265 1080p, 30FPS |

16 |

128 |

|

Power consumption, W |

8 |

350 |

|

Process technology, nm |

12 |

7 |

The Ascend 910 has a computational capacity of 256 TFLOPS FP16 and 512 TOPS INT8, which is enough to decode 128 Full HD video streams. Since the chiplet structure of the processor allows you to vary its power and performance already at the stage of processor production, for less resource-intensive tasks you can use the same architecture in a more economical package - Ascende 310.

For example, if the Ascend 910 is supposed to be used for machine learning, then for the AI output - the Ascend 310 processor with a power of only 8 W and a performance of 16 TOPS @ INT8 and 8 TOPS @ FP16.

Since AI systems can scale horizontally, Huawei has both “building blocks” and large blocks of the Atlas series to build AI infrastructure.

Huawei Atlas 800 Family

For large-scale deployments, Huawei offers Atlas 800 servers for installation in the company's data center. Please note: the machine learning solution is delivered exclusively in the form of a ready-made Atlas 800 server (Model 9000) using Huawei Ascend 910 Neuroaccelerators or already a high-performance Atlas 900 cluster.

The high power consumption of the Ascend 910, which is 310 W, limits the scope of these processors - only in the form of FPGA boards of the "mezzonine" type, on which massive heat sinks are installed. Ascend 910 processors are not yet available as expansion cards, so they cannot be installed in a regular server or workstation. The Huawei Atlas 800 Model 9000 Machine Learning Servers are configurable when ordered and accommodate 8 Ascend 910 processors in a 4U chassis. As a platform, 4 Huawei Kunpeng 920 processors with ARM64 architecture are used (reviewed in detail Review of Kunpeng 920

).

The Atlas 800 Model 9000 server has 2 PFLOPS FP16 performance, 8 100 Gigabit network interfaces, it can be air cooled or liquid cooled, and has an efficiency of 2 PFLOPS/5.5 kW. This model is intended to be used in HPC environments to build AI models in exploration, oil production, healthcare and smart city environments.

Again, I will reproach our Chinese comrades for the lack of elementary imagination, because they named two completely different servers for different needs ... Huawei Atlas 800 Model 3000 and 3010. These two servers are intended for the next phase of using AI - calculations of existing models in the fields of analysis video surveillance, text recognition, as well as in "smart city" systems. Physically, these machines are 2-processor 2U servers in which Atlas 300 expansion cards are installed performing AI calculations.

Huawei Atlas 800 Model 3010 is based on two Xeon Scalable processors, and supports up to 7 Atlas 300 boards, and model 3000 is based on two Kunpeng 920, and the total number of installed AI boards is already at least one, but more - 8. Another argument in favor of Huawei's ARM64 architecture. With integer INT8 operations, the performance of Atlas 800 is 512 TOPS and 448 TOPS, which allows analyzing 512 and 448 HD streams for Model 3000 and Model 3010, respectively.

Huawei Atlas 500 Model 3000 is generally something unique for the modern world of artificial intelligence - a peripheral AI server. Designed to be installed at an end-site, be it a store, a drilling rig, or a plant workshop, it has a fanless design with passive cooling. At the same time, the server has an amazing operating temperature range: from -40 to +70 degrees Celsius, that is, no special heated cabinets are required for its installation.

The main purpose of Huawei Atlas 500 is to analyze streams from video surveillance cameras. The server is powered by an Ascend 310 processor, which can simultaneously decode 16 video streams at 1080p @ 30 FPS.

As befits an Edge server, there is high-speed Wi-Fi and LTE for Upstream streams and two Gigabit RJ45 for Downstream connections to a video surveillance network. That is, you can install the Huawei Atlas 500 in completely self-contained facilities and connect directly to a loudspeaker and microphone, for example, to play warnings or provide communication between staff and a virtual operator.

The server has a built-in 5 TB hard drive for storing some fragments of digital video archives. Typical power consumption of Huawei Atlas 500 ranges from 25 to 40 W, depending on the availability of the hard drive.

By default, Huawei Atlas 500 is powered by 24V DC, and even a power supply is not included in the package. The server seemed to be specially designed to be installed in cabinets on telecommunication masts and poles, which are now used to house cellular repeaters and smart city components.

But if your enterprise infrastructure is already based on solutions from another vendor, but you need Huawei capabilities as an AI platform, choose an Atlas 300 card. Each such board has 4 Ascend 310 processors with a total performance of 64 TOPS INT8, which allows decoding 64 threads simultaneously. Yes, you are not mistaken, these are the PCIe cards that are installed in the Atlas 800 (Model 3000) servers, which we talked about above, and what is interesting - these are 1-slot adapters with passive cooling and no additional power supply. What does this mean in practice?

And the fact that Huawei Atlas 300 can be installed in almost any case of any computer, even in a NAS (if needed) or a 1U server: you only need a PCI Express 3.0 16x port. Again, the Ascend 310 chip is very undemanding to cooling, and the Atlas 300 board can operate at ambient temperatures up to 55 degrees Celsius.

In total, 32 GB of RAM is installed on the Atlas 300 board, but the memory is distributed across four processors, so no more than 8 GB can be allocated for one task. But in the system, the controller is defined as 4 AI-accelerators, which means that 4 independent tasks can be run on each card.

Interestingly, not only an existing server, but any other "smart" device can be made even smarter by installing an Atlas 200 module with an Ascend 310 processor. This small box can be installed on surveillance cameras for face recognition, robots or drones ... It has a serial output interface for connection to contactors and a minimum power consumption of only 9.5 W. And at the same time, it makes it possible to decode the same 16 channels of H.264 video with a resolution of 1080p.

For software developers, Huawei is releasing the Atlas 200 model with the Ascend 310 processor. It is a small box that consumes only 20W and has a gentleman's set of I/O ports (USB, RJ45, I/O). With its help, you can test the solution for validating the operation of the algorithm at the facility before purchasing and installing equipment, and, in principle, you can “feel” the work of AI on the same processor that will be installed in production conditions. Well, since we come to the software, we should talk about the AI platform from Huawei

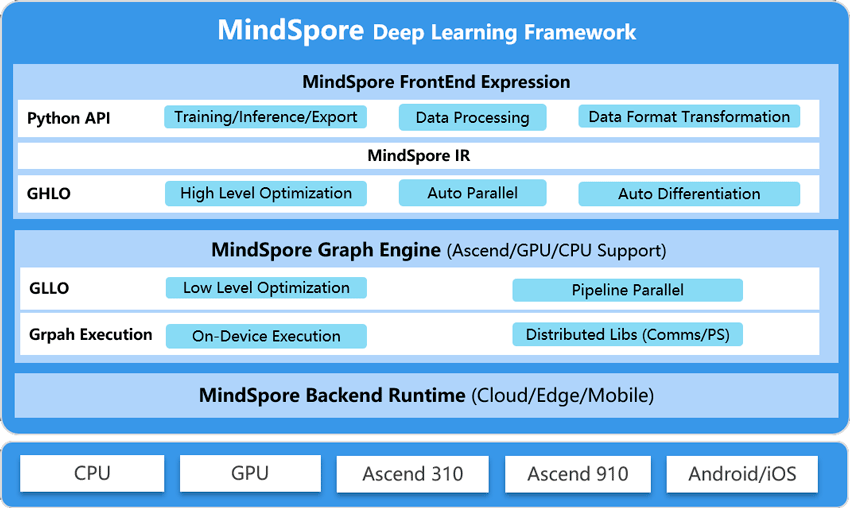

Mindspore

Huawei has not limited itself to the release of drivers for its Ascend series products, no, this step is not for such a large giant. Instead, Huawei launched its own AI platform MindSpore , aligning with Google (Tensorflow) and Facebook (PyTorch ). This is a free framework that you can use to program in Python 3.7.5, and just think - in fact, Huawei has its own "Tensorflow". At the time of this writing, so far it was available in two versions: 0.1.0-alpha and 0.2.0-alpha.

And the best part is that Huawei understands that in today's open-world environment, creating a community of developers requires support of all standards, so MindSpore supports both x86-series CPUs, Nvidia GPUs with CUDA 9.2/10.1 libraries, and of course Ascend 910. You can install MindSpore via PiP or in the Anaconda virtual environment. The packages are currently available for Ubuntu x86, Windows-x64 (CPU only) and EulerOS (aarch64 and x86).

Of course, it is clear that Huawei is now at the beginning of the development of Mindspore, and much will depend on the adoption of the framework by the programming community. However, from the point of view of prospects, the plans of the Chinese are downright Napoleonic:

MindSpore Development Directions:

- Support for a wider variety of models (classic models, generative adversarial network GAN, recurrent neural network, transformers, reinforcement learning, probabilistic programming, AutoML and others)

- Extending APIs and Libraries to Improve Programming Capabilities

- Optimizing Huawei Ascend Processor Performance

- Evolution of the software stack and performing computational graph optimizations (adding additional optimization capabilities, etc.).

- Support for more programming languages (not just Python)

- Improving distributed learning by optimizing automated scheduling, data distribution, and more

- Improvements to the MindInsight tool to make it easier for the programmer

- Improved functionality and security for Edge devices

Note the expansion of programming languages: today it is only Python, but it is quite logical to expect support for R, LISP, Smalltalk and Prolog.

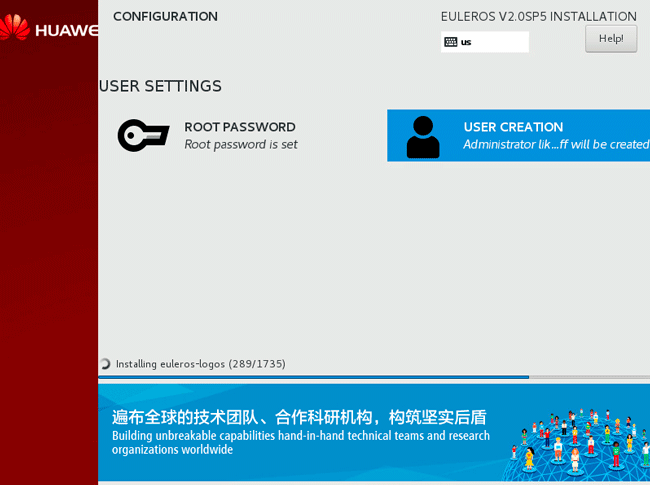

EulerOS

If you have not heard what EulerOS is, then this is not surprising, because the announcement of this operating system took place quite recently - in April 2020. Of course, Huawei needed its own Linux distribution, which can be offered as a platform for ARM64 architecture and applications for AI, and they took CentOS as a basis, solved its main problem (the delay in security updates by organizing a round-the-clock support team), and received CC security certificates EAL4 + and CC EAL2 +, and the result is a product that has the same architecture as Red Hat or Oracle Linux, making it easy to upgrade to.

Huawei's repositories contain a whole bunch of open source enterprise software, including for network security, working with big data, building storage networks, migration, cloud management, databases, etc.

EulerOS is available as a container image in the official docker repository and for download on the official site as an iso image.

MindSpore: perspective view

Today, Huawei has everything to confidently gain a foothold in the artificial intelligence market: an open platform that can compete with the sore throat Tensorflow, a full cycle of hardware solutions from machine learning systems to data output. Plus - its own operating system and its own server platforms, with the maximum level of localization, on which you can build mono-brand solutions protected from political troubles.

Well, I would like to say separately that in 2019 Huawei opened its own center of competence in the field of artificial intelligence at the Moscow Institute of Physics and Technology (MIPT), that is, in the future, the number of domestic developers for the Huawei platform will grow.

Recommendations when ordering

Huawei is an example of a company that breaks out into the lead in any business and competes with those who have set their de facto standards. Many of us should learn from their persistence in expanding their presence in all technological areas of the modern IT world. Today, the company is starting to build its own chip production facilities so as not to depend on Taiwanese TSMC, which will make its products even more independent from US trade policy. At the same time, the company is investing in the future generation of IT specialists, in its own software platforms, development environment and hardware. There is no other company on the market that offers a similar synergistic platform yet, so I have no doubt that Huawei Mindspore will become the same standard as Tensorflow/CUDA. Yes, today it is also profitable to do projects on a platform that gives a price advantage when ordering a ready-made solution, but still before ordering, even if you plan to use AI solutions exclusively from Huawei, using Mindspore or another framework, I still recommend checking how well the code from platforms converts to your needs: Tensorflow and Caffe ... Pytorch is on the way And others

Mikhail Degtyarev (aka LIKE OFF)

06/11/2020