Smart chips will smooth the rejection of Moore's law in data centers

So if Moore's law slows down and we can't count on faster processors being released every year, what does that mean for data center technologists and architects?

Obviously, one of the areas in which chip production will develop is multi-chip packaging, where many silicon matrices are assembled together in a common case that looks like a single chip from the outside. For example, lift the lid on AMD's new EPYC processor and you'll see eight 7nm processor chips plus a ninth 14nm I / o chip. Another example is the demonstration by TSMC of a giant interposer, which confirms by its existence that Moore's law is still in effect.

Of course, the technology of packaging processor cores in a chiplet is something of a cheat, and from this innovation you will not get all the charms of reducing the price and improving performance, as from Moore's law and scaling Dennard. So whatever you say, but the multi-core layout will be a bright, but short-lived vector of processor development. Much more interesting is the development in two areas:

- Fundamental technological innovations such as carbon nanotubes, optical and quantum computing

- Architectural innovations beyond the CPU and the growth of domain processing

The number of computers versus the performance of each

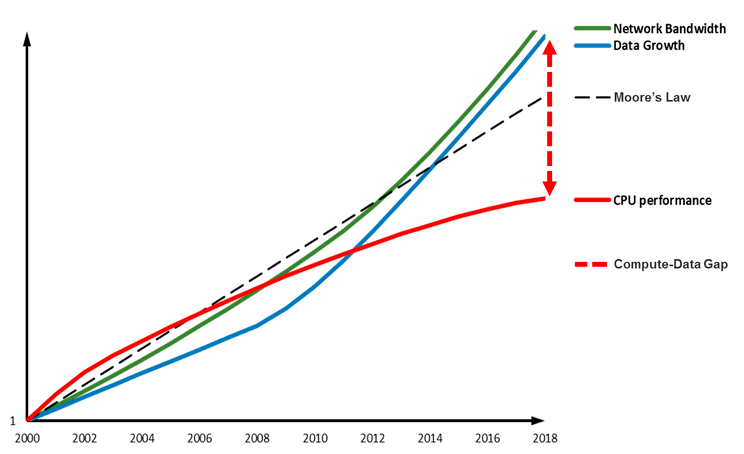

First of all, we must take into account that progress in increasing the speed of processors is slowing down, while the trend for increasing data volume continues without weakening. The result is a computer-data gap that will increase over time:

If the speed of servers is not growing, the solution is to increase their number and use high-performance networks. Microsoft Azure cloud data centers are a good example of cluster computing and massive global scale:

The reason why innovation at the data center level is important for cloud hyperscalers is that data sets and calculations performed in the cloud simply cannot be performed on a single computer or even a rack of servers. The scale of these modern workloads requires huge parallelism, and once you start performing calculations in parallel on different computers, the response time of the slowest machine becomes more important than the average latency. This, in turn, means that deterministic delays become vital, which requires hardware implementations.

But as microservices and distributed computing become the norm, network latency optimization is becoming a critical element of data center architectural innovation. Scaling calculations works very well for workloads that are easy to run in parallel, but inevitably there are parts of the task that are serialized, whether it is periodically syncing data between nodes or just getting additional data to continue processing. But here comes another law that imposes a fundamental limit on how quickly a task can be completed.

The death of Moore's law corresponds to Amdahl's law

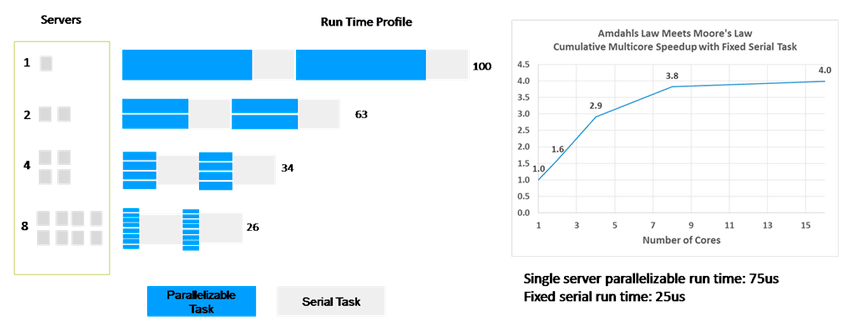

Around the same time that Gordon Moore first formulated his law, another computer genius noticed that parallel computing is limited to parts of a task that must be performed sequentially. In 1967, gene Amdahl introduced his law of the same name, according to which the execution time will be accelerated only up to a certain point. Amdahl's law is related to the acceleration of a computational problem, which consists of a part that can be accelerated by parallel execution using multi-node or cluster computing, and the remaining part that must be performed sequentially. His conclusion was that the advantages of parallel computing are limited to the sequential part of the problem as follows:

- Execution time = T(1-P) + T (P/S)

- Where

- T is the time required to complete the task

- P - part of the task that can be accelerated

- S-factor of task acceleration

- P=75% means that 3/4 of the task can be accelerated by running parallel on multiple nodes

- S=2 with two nodes, which means that the accelerated part of the task runs twice as fast

It turns out that the more computing nodes are added, the faster the parallelized part of the task is executed, but at the same time the sequential part becomes a larger and larger part of the total execution time. As more nodes are added, the sequential task dominates the overall execution time. In the example above, the sequential part of the task, which was initially only 25% of the total single-node runtime, increases to 73% of the total execution time with 8 nodes, limiting the future benefits of scaling.

Therefore, to achieve scalability and reliability, modern workloads (including storage, database, big data, artificial intelligence, and machine learning) have been designed to run as distributed applications

Smart network and domain processors come to the rescue

Amdahl's law sets a practical limit to the benefits of parallelization, but it is not the same as the fundamental limit that the laws of physics impose on a semiconductor that slows down Moore's law. Further acceleration is possible simply by redefining the overall task in such a way as to make previously serialized operations parallelizable.

This requires a deep understanding of distributed applications and a complete rethinking of complex algorithms and data flows. When the workload is considered in depth and holistically, there are huge opportunities for innovation and acceleration of the computing, storage, and network vectors of the system. For example, operations that were previously performed sequentially on the CPU can be parallelized on domain-specific computing machines, such as GPUs, which are very well suited for parallel execution of graphics and machine learning tasks.

In addition, many of the serialized tasks associated with periodic data exchange between nodes in a synchronization point using the collective operations to allow the continuation of parallel computing. These serialized tasks can easily be accelerated, which requires an intelligent network that processes data as it moves and heralds the emergence of a new class of domain processors called ipus or I / o processors. A good example of an MPC is the Mellanox Bluefield-2 SOC (System-On-a-Chip), which the company announced at the VMworld 2019 conference.

It's clear that as Moore's law becomes less relevant, we see scientists looking for ways to speed up things that haven't been accelerated before. New approaches will appear not only at the level of chips or servers, but also at the level of data centers, including smart networks. This is why the innovations of the next decade will come from those who understand the problems that need to be solved holistically – including computing systems, storage, networks, and software.

Ron Amadeo

30/09.2019