OAM format for OCP and Intel Nervana NNP L-1000 platforms

Facebook not only buys hardware, but is also developing a new data center standard known as the Open Compute Project to achieve high data density and efficient heat dissipation when you have thousands of servers. Last week, Facebook introduced a new form factor, the Open Accelerator Module, designed to house ASIC processors that will eventually replace GPUs in machine learning. Today, such processors are being developed not only by Nvidia, but also by Intel, Google and many other companies. It is believed that AI-processors are the future, but the main difficulty in using them is huge heat dissipation, for which modern data centers are simply not ready.

Facebook OCP OAM Module

The Emerald Pool family server systems support up to 8 OAM modules. This is how each module looks in the schematic diagram:

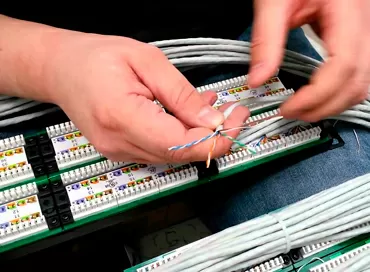

This is what the OAM module looks like with the cooling system installed:

Agree, it is a bit like Nvidia SXM2 modules. Different topologies can be used when scaling OAMs:

Key features of OAM modules:

- Supports 12V and 48V power supply

- Up to 350W (12V) and up to 700W (48V) TDP

- Dimensions 102mm x 165mm

- Support for one or more ASICs per module

- Up to 8 PCI-E x16 connections

- Supports one or two high speed x16 connections per host

- Up to 7 x16 high speed connections

- Up to 8 accelerator modules in one system

- Compatible with standard 19 '' racks

The OCP Accelerator Module project has received the support of leading IT companies, including Intel, AMD, Nvidia, Baidu, Microsoft, Google, Huawei and others.

Facebook Zion Accelerator Platform

Facebook also introduced a platform for OAM accelerators, in which you can combine up to 8 CPUs and 8 OAM modules, connecting these devices with high-speed communication lines.

Each 2-socket server pod plugs into a shared cage like server blades, and up to 8 OAM pods can be used on the same platform.

Please note - each processor has access to a network connection and an accelerator.

Each node of the Zion server platform is a 2-processor Xeon LGA3647 machine. Pay attention to the size of the heatsinks on the processors. Each CPU has its own OCP 3.0 network module.

The accelerator platform is actually a footprint for 8 OAM modules. The distance between the heatsinks is minimal, no chips or batteries are visible on the motherboard.

Each OAM has two pins that carry data and power. These are unified connectors that allow the use of accelerators from different manufacturers. Again, the idea of such a connection is very similar to the Nvidia SXM2.

Each module weighs at least a kilogram, and installation does not require any special tool, as is the case with Nvidia accelerators.

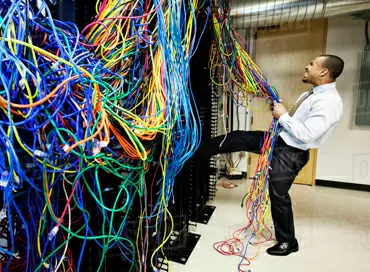

Huge PCI Express cables are used to connect the processors and accelerators. Yes, the developers have come up with nothing better than pulling PCI-E out.

Each of the PCI Express slots provides connection via 8 PCI-E x16 buses (a total of 128 PCI-E lanes), which is more than in the arsenal of 2-processor servers based on Xeon Scalable, that is, there is still a margin of interconnect speed.

Intel Nervana NNP L-1000

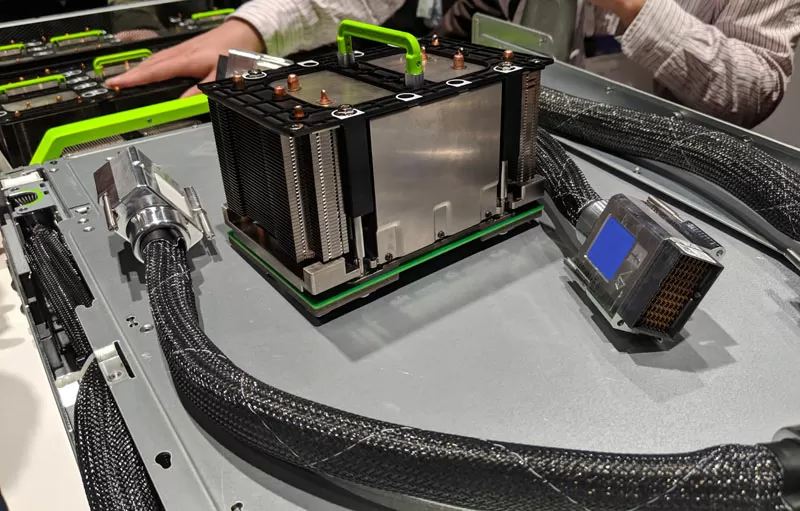

Intel is using the Open Compute Project Accelerator Module (OAM) form factor for its new NNP platform, which is clearly targeting the GPU market, where Nvidia Tesla is leading. At the OCP Summit 2019, we got an introduction to the Intel Nervana NNP L-1000 module, as well as the accelerator system topology. For NVIDIA, OAM could be a clear threat to DGX-1 and DGX-2 (h) servers.

The Intel Nervana chip uses HBM2 memory, as can be clearly seen in the photo above, it is expected to be 32 GB, like the Nvidia Tesla V100.

On the example of Intel Nervana, you can see what a "processor socket" is OAM modules.

The Spring Crest processor family allows you to scale compute nodes up to 32 processors (read OAM modules) in a single system. Naturally, so many modules will not fit in one chassis, but PCI Express cables allow you to distribute one compute node over several physical chassis.

Similar systems with a capacity of up to 13.9 kW will be on sale before the end of 2019. Do not forget that we are talking about machine learning acceleration modules, which additionally need servers on conventional x86 processors.

Conclusion

In practice, the OAM architecture allows up to 32 accelerators to be connected to a single host without using expensive NVSwitches or Infiniband interconnects. It is clear that the main goal of the developers was to undermine Nvidia's leading position in the market for systems for machine learning and artificial intelligence. No doubt Nvidia will find something to answer, especially given the information about its purchase of Mellanox.

Ron Amadeo

19/03.2019