NVMEoF: how does the fastest data protocol work?

In recent years, we have seen an increase in the performance of data storage technologies, which eventually reached the physical limits due to outdated data exchange protocols in data centers. Despite the use of 100GbE and new networking technologies such as InfiniBand, these legacy protocols continue to slow down the performance of flash drives because they are isolated within devices. In this article, we will talk about the non-Volatile Memory Express (NVMe) specification. Next, we'll focus on NVMe over Fabrics (NVMe-oF) and NVMe over RDMA over Converged Ethernet (NVMe over RoCE), new Protocol specifications designed to address problem points in modern storage networks.

Since low latency and high bandwidth have always been important for clouds and data centers, we often talk about NVMe over Fabrics. This specification appeared less than 10 years ago. And because it is relatively new, people often have a misconception about it and what benefits it brings to the business. NVME-oF technology is constantly evolving and gaining popularity in the it industry, and many vendors have started to release solutions with NVMe-oF support for the corporate sector. And today, in order to meet the level of technology development for data centers, it is important to have a correct understanding of what NVMe-oF is, the capabilities of the specification and the performance that it can offer. And also understand how it can be adopted and used in conjunction with various new solutions.

NVMe и NVMe-oF

Flash arrays built entirely on flash memory (All-Flash Array — AFA) appeared as a response to the need for higher performance in data centers. Capable of delivering 1 million IOPS operations per second without much effort, they are significantly faster than existing data storage technologies on the market. However, many of these arrays continued to use the not entirely new SATA SSD storage technology, based on the Advanced Host Controller Interface (AHCI) command Protocol and supporting the IDE. AHCI was originally designed for hard drives, not for faster-speed solid-state drives. This technology, which is based on SCSI, has led to the emergence of a so-called "bottleneck" in modern solid-state drives and array controllers, since the SATA III bus with AHCI does not allow for data exchange speeds of more than 600 MB/s.

|

Typical throughput

|

||

|

Protocol |

Teoretical throughput

|

Practical throughput

|

|

SCSI |

2.56 Gbps

|

320 Mb/s

|

|

SATA III

|

6 Gbps

|

600 Mb/s

|

|

InfiniBand

|

10 Gbps

|

0.98 Gb/s

|

|

10G FibreChannel

|

10.52 Gbps

|

1.195 Gb/s

|

|

PCIe v4.0

|

16.0 GT/s

|

31.5 Gb/s (slot x16)

|

|

PCIe v5.0

|

32.0 GT/s

|

63 Gb/s (slot x16)

|

To fully realize the potential of solid-state drives, we need technology that can exchange data at a higher speed. NVMe is the specification that allows solid-state drives to realize the potential of flash memory. This technology was introduced in 2014, and its main goals were to increase the speed of applications (reduce response time) and introduce new and improved features. There are many nvme-based SSD form factors, and the most well — known are AIC (expansion card), U. 2, U. 3, and M. 2. An NVMe-based solid-state drive connects to a computer or server via a high-speed Peripheral Component Interconnector Express (PCIe) bus, to which it connects directly. NVMe reduces additional CPU work and reduces the time between operations, increasing the number of I / o operations per second and throughput. For example, NVMe SSD offers write speeds of over 3000 MB / s, which is 5 times higher compared to a solid-state drive equipped with a SATA interface, it is 5 times higher and 30 times higher compared to a hard disk.

NVMe supports multiple I / o operations performed simultaneously, which means that large-scale tasks can be broken down into smaller ones that will be processed independently of each other. This is somewhat similar to the operation of a multi-core processor that processes multiple command streams simultaneously. Each processor core can work independently of other cores and solve individual tasks.

Another advantage of NVMe over AHCI is the queue Depth (QD). While ACHI and SATA can handle queues of 32 (1 queue of 32 commands), NVMe can handle queues of up to 65,000 commands each. This not only reduces latency, but also increases the performance of servers that process a large number of requests in parallel.

| The queue depth | ||

|

Protocol |

Queue depth

|

Command depth

|

|

SAS |

1

|

254

|

|

SATA (AHCI)

|

1

|

32

|

|

NVMe

|

65 000

|

65 000

|

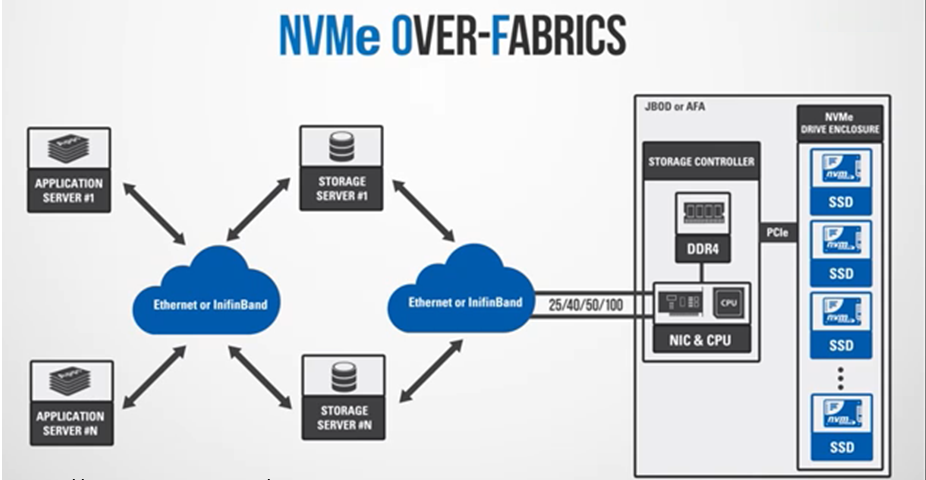

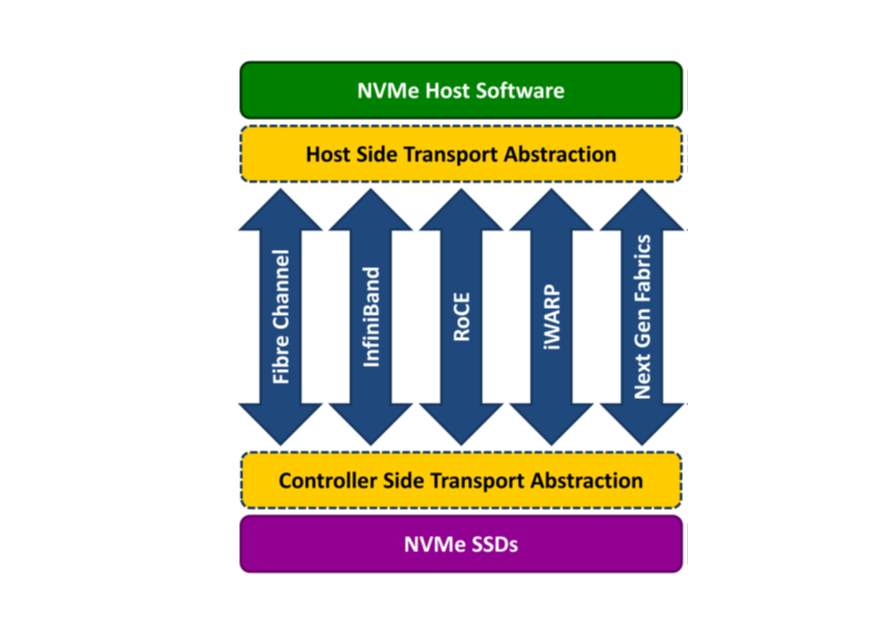

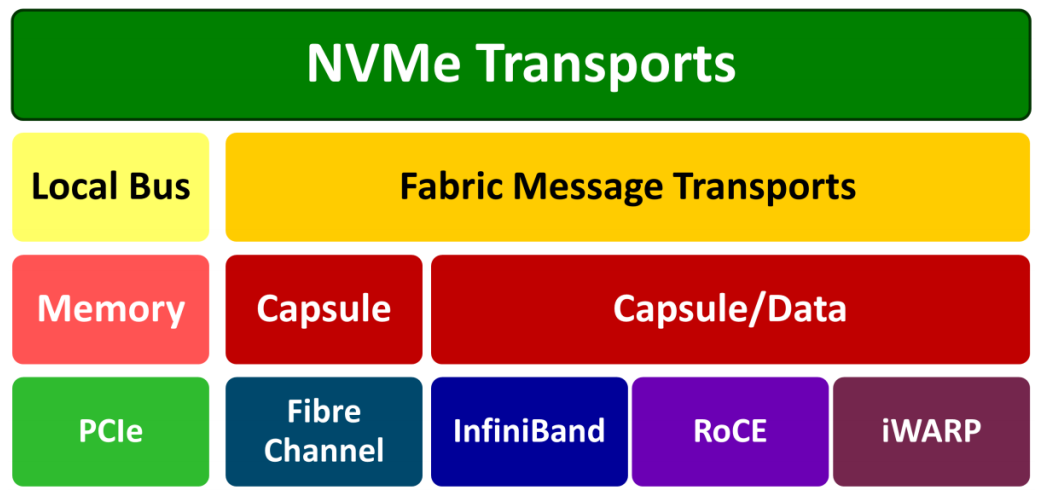

In 2016, a new specification, NVMe — oF, was released. It allowed you to realize the high-speed advantages of the NVMe protocol used for connecting arrays in a network factory, using Ethernet, Fiber Channel, RoCE or InfiniBand as transport. Thus, NVMe-oF does not use the PCIe bus as a transport, as in the case of NVMe, but an alternative transport Protocol. The concept of a network factory involves sending and receiving messages without shared memory for endpoints. To transmit data through a network factory, NVMe commands and responses are placed in so-called capsules, each of which can hold several commands or responses.

In the case of NVME-oF, targets are represented as namespaces, which is equivalent to a LUN in the SCSI Protocol. NVME-oF allows servers to communicate with these drives over much longer distances, while maintaining an ultra-short delay time that lies within microseconds. In short, we get higher speed of data exchange between systems and flash drives without any appreciable increase in the delay time of the factory. The short response time is partly due to the NVMe queue length we mentioned above. NVMe-oF supports queues of the same length as NVMe - 65 thousands. Thanks to this outstanding value, the NVME-oF specification is applicable for building powerful parallel architectures between servers and storage devices, where each individual device will have its own queue.

Realization NVMe over Fabric

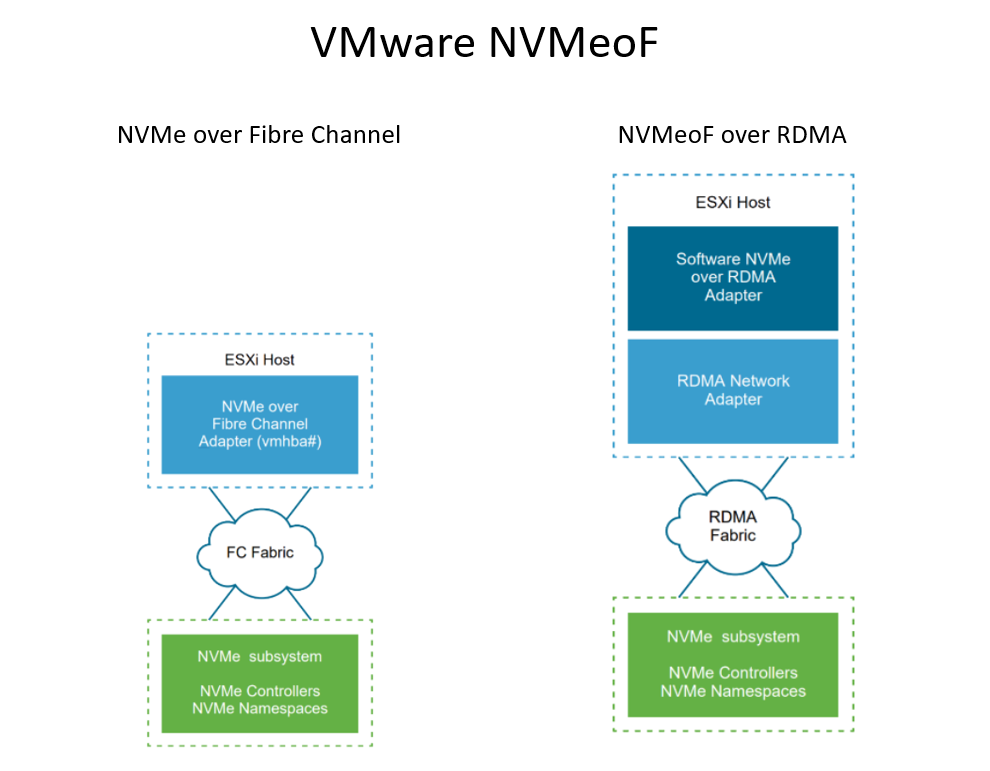

There are 3 ways to implement NVMe in the fabric — with the help of RDMA, Fiber Channel and TCP.

NVMe-oF over RDMA

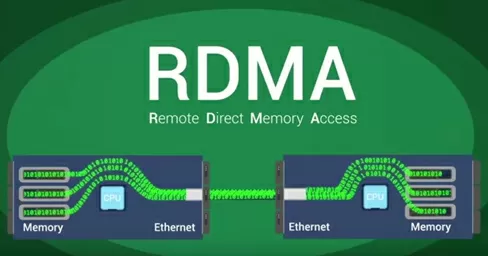

This specification is based on Remote Direct Memory Access (RDMA) technology and allows data and memory to move between computers and storage devices over a network. RDMA is a way to exchange information between the RAM of two computers over a network without involving the processors, caches, and operating systems of these computers. Since RDMA works without the operating system, it is usually the fastest mechanism for transmitting data over the network, which loads the system to the least extent.

The TCP Protocol is used for data transmission over IP networks. RDMA implementation is usually implemented using the Virtual Interface Architecture model, via a converged Ethernet network (RDMA over Converged Ethernet — RoCE), using InfiniBand, Omni-Path, or iWARP technologies. The most popular ones today are RoCE, InfiniBand, and iWARP.

NVMe over Fibre Channel

The NVMe over Fibre Channel (FC) variant is often referred to as FC-NVMe, NVMe over FC, or sometimes NVMe/FC. Fiber Channel is a reliable Protocol for transferring data between arrays and servers that is used in most corporate storage networks. Here, commands are encapsulated inside FC frames. It is based on standard FC rules and the standard FC Protocol, which provides access to shared NVMe flash memory. Although SCSI commands are encapsulated inside FC frames, interpreting them and translating them into NVMe commands requires resources and affects performance.

NVMe over TCP/IP

This is one of the newest ways to implement NVMe-oF, which involves transmitting data using the TCP Protocol over IP (Ethernet) networks. Data is transmitted inside TCP datagrams over physical Ethernet networks. Compared to RDMA and Fiber Channel, TCP is a cheaper and more flexible alternative. In addition, compared to RoCE, which is also designed for data transmission over Ethernet networks, NVMe over TCP/IP works more like FC-NVMe, since they both use message semantics for I/O.

Whether you use NVMe-oF based on RDMA, Fibre Channel, or TCP, you get a self-contained NVMe-based solution. Any implementation provides the high performance and low response time inherent in NVMe.

NVMe over RDMA over Converged Ethernet (RoCE)

Among the protocols with RDMA technology, RoCE stands out. We know what RDMA and NVMe-oF are. And now we have Converged Ethernet (CE), RDMA support over Ethernet. CE is like an improved version of Ethernet. It is also called Data Center Bridging and data Center Ethernet. It encapsulates an InfiniBand transport packet over Ethernet. This solution uses the Link Level Flow Control mechanism to ensure zero losses, even if the network is overloaded. The RoCE Protocol allows you to get a lower latency compared to its predecessor, iWARP.

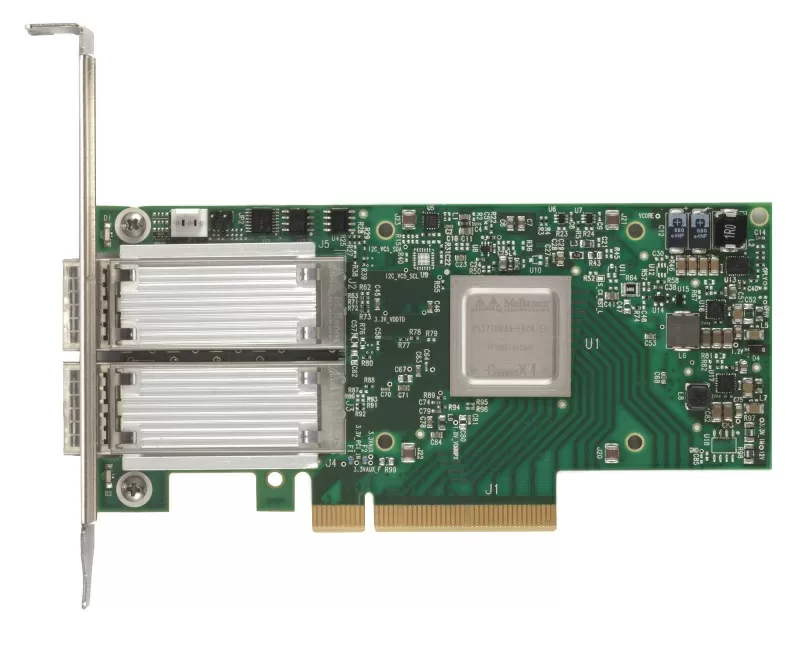

There are two versions of RoCE — RoCE v1 and RoCE v2. RoCE v1 is an Ethernet Layer 2 (channel) Protocol. It allows you to connect two hosts that are in the same Ethernet broadcast domain. Therefore, it cannot be used for routing between subnets. The second version, RoCE v2, is based on the UDP/IPv4 or UDP/IPv6 Protocol. RoCE v2, in turn, belongs to the Ethernet Layer 3 (Internet) layer, and here you can route packets. Support for RoCE v2 in software is still infrequent, but the number of corresponding products is gradually increasing. For example, it was added to Mellanox OFED starting with version 2.3, as well as to the Linux kernel 4.5.

NVMe over RoCE introduces a new type of storage network. This Protocol offers the existing level of performance of enterprise data services and the wide range of hardware and software that is inherent in storage networks. Although the RoCE Protocol takes advantage of Converged Ethernet, It can also work in non-converged networks. To use NVMe over RoCE in a factory, the Converged Ethernet technology must be supported by the network card, switch, and flash array. In addition, the network card and flash array must support RoCE (such network cards are abbreviated as R-NIC). Servers with R-NIC and flash arrays with NVMe over RoCE support will connect to existing CE switches automatically.

Поддержка NVMe-oF over RoCE в продуктах VMware

VMware has added support for nvme-based shared storage. Support for NVMe over Fibre Channel and NVMe over RDMA for external connections was introduced in vSphere 7.0. ESXi Hosts can use RDMA over Converged Ethernet v2 (RoCE v2). Access to NVMe storage via RDMA is performed by the ESXi host using the R-NIC adapter on your host and the SW NVMe over RDMA storage adapter. Both adapters must be configured to detect NVMe storage.

NVMe over RDMA requirements:

- the NVMe array supports the RDMA Protocol (RoCE v2);

- compatible ESXi host;

- Ethernet switches support a lossless network;

- the network adapter supports RoCE v2;

- SW NVMe over RDMA adapter;

- the NVMe controller;

When configuring NVMe-oF on an ESXi host, there are several recommendations to follow:

- do not use different protocols to access the same namespace;

- make sure that all active paths are presented to the host;

- instead of NMP, HPP (High-Performance Plugin) should be used for NVMe purposes);

- for your NVMe purposes, you must have dedicated lines, VMkernels, and RDMA adapters;

- dedicated line with Layer 3 VLAN or Layer 2;

- restrictions:

- Namespaces-32;

- Paths=128 (maximum of 4 paths/namespaces on the host).

Conclusions

An increasing number of users store data in the cloud. And this drives demand for internal storage for data centers with faster data exchange rates. The NVMe specification offered a more modern way to connect flash-based drives. Thanks to NVMe and NVMe-oF, we were able to take full advantage of flash memory. Today, NVMe-oF and its variants are seen as the future of information storage technologies. These drives and systems are considered the heart of data centers, because every millisecond is important in a factory. NVMe technology reduces the number of memory-mapped I / o commands and adapts operating system device drivers for higher performance and reduced latency.

NVMe is becoming increasingly popular due to its ability to work with multiple queues simultaneously at low latency and high throughput. Although NVMe has found application in personal computers, increasing the processing speed of video and video games, as well as in other solutions, the real advantages of This specification are most noticeable in the corporate environment, in systems using NVMe-oF. The information technology, artificial intelligence, and machine learning markets continue to evolve, and the demand for higher-performance technologies is steadily increasing. Today, it is common to see companies such as VMware and Mellanox release more NVMe-oF products and solutions for enterprise customers. With modern and powerful parallel computing clusters, the faster we can process and access our data, the more valuable it will be for our business.

Sergey Popsulin

23/08.2020