Guide for migration from Intel Xeon to AMD EPYC: debunking myths, avoiding pitfalls

Consider a typical situation: in your company, it’s time to expand your data center, or replace outdated equipment, and your supplier suggests that you consider a server on an AMD EPYC (ROME) processor. It does not matter whether you have already made a decision or are just thinking, this article is for you.

A friend of mine, a stock trader, likes to repeat that the biggest money is earned on the change of trend, and a successful stock player should be able not only to see this change (which is actually simple), but also to accept it for yourself, changing the style of work under the new realities (which is actually difficult).

AMD-fever in full swing

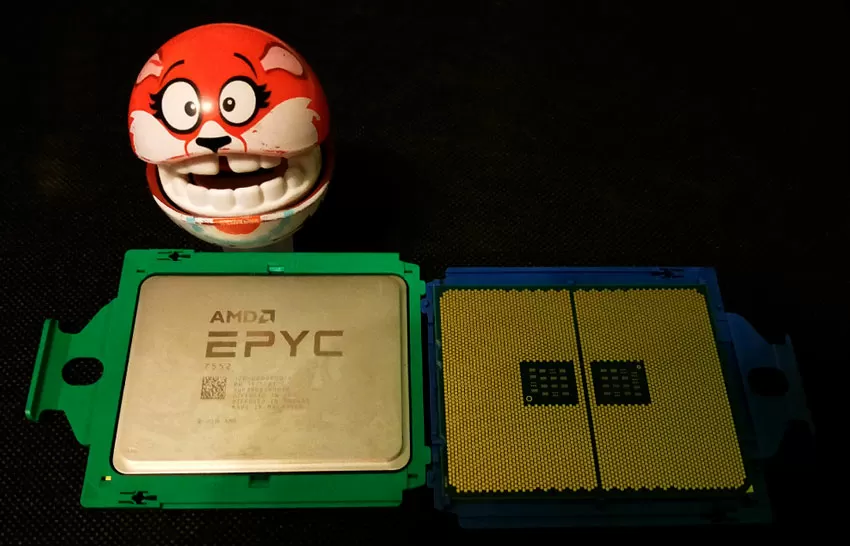

Today, it is safe to say that astrologers have announced 2019 - the year of AMD. The company celebrates one success after another, Ryzen processors for desktop PCs are knocked out in sales leaders among enthusiasts, the chiplet layout allows you to release a 1-socket server with 64 cores, EPYC server processors with a Rome core set one world performance record after another, recently replenished with the results of the Cinebench rendering benchmark, and all such records have already accumulated more than a hundred (this, by the way, is a topic for a separate article). Here are some curious comparisons from AMD’s marketing materials:

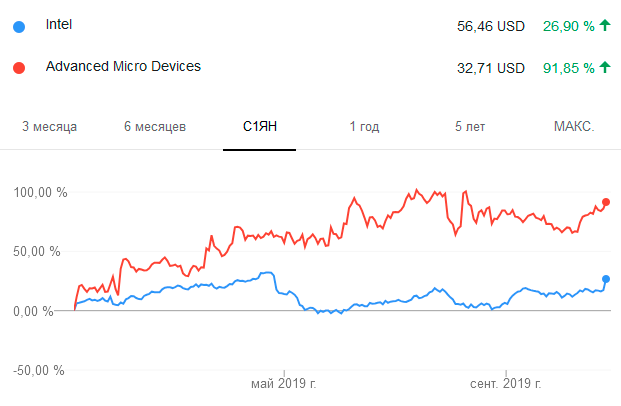

Even VMware in its blog confirms that 1-socket configurations have become powerful enough for certain tasks. AMD shares have doubled since the beginning of the year, and flipping through the headlines, you feel like in another reality: about AMD-only good, but what about Intel?

Yesterday’s it trendsetter is today forced to fend off security concerns by slashing prices on senior processors and investing a crazy $ 3 billion in dumping and marketing to stand up to AMD. Intel shares are not rising, the market is captured by AMD fever.

Yesterday's IT trendsetter is now being forced to fend off security concerns by slashing prices on older processors and investing a crazy $ 3 billion in dumping and marketing to counter AMD. Intel shares are not growing, the market is captured by AMD-fever.

What does AMD offer?

First of all, AMD entered the market with a serious proposal: “1-socket server instead of 2-socket”. For an ordinary customer, this is a kind of promise, meaning that AMD processors have so many cores and they are significantly cheaper, so if you previously bought a 2-processor machine for 64 threads, today in a 1-processor machine you can have 128 computational threads (64 physical cores with Hyperthreading) in addition to the mass of memory strips, disks and expansion cards. In general, even in relation to the first generation of EPYC it is-tens of thousands of dollars of savings from each server. Let’s use the calculation of last year’s HPE Proliant server configurations as an example.

At the server configuration stage, the difference between 1-processor and 2-processor configurations is about 30%, and it will be the greater the simpler your server. For example, if you do not install 2-terabyte SSDS in each server, the price difference between Intel and AMD will be 1.5-fold. Now the most interesting thing is the cost of software licensing for the servers configured by us. Today, most software server platforms are licensed per processor socket.

|

Pricing comparison for a 32-core server | ||

|

Component/Server |

AMD EPYC |

Xeon Platinum |

|

Platform |

HPE ProLiant DL325 Gen10 4LFF |

HPE ProLiant DL360 Gen10 4LFF |

|

Processor |

1x HPE DL325 Gen10 & nbsp; AMD EPYC 7551P (2.0 GHz/32-core/180W) FIO Processor Kit & nbsp;

|

2 x HPE DL360 Gen10 Intel Xeon-Platinum 8153 (2.0GHz/16-core/125W) FIO Processor Kit & nbsp;

|

|

Memory |

16 x HPE 32GB (1x32GB) Dual Rank x4 DDR4-2666 CAS-19-19-19 Registered Smart Memory Kit & nbsp;

| |

|

SSD & nbsp;

|

4x HPE 1.92TB SATA 6G Mixed Use LFF (3.5in) LPC 3yr Wty Digitally Signed Firmware SSD & nbsp;

| |

|

Power supply |

2 x HPE 500W Flex Slot Platinum Hot Plug Low Halogen Power Supply Kit

| |

|

Price, $ |

34,027 |

44 525 |

At the server configuration stage, the difference between 1-processor and 2-processor configurations is about 30%, and it will be the greater the simpler your server is. For example, if you do not install a 2TB SSD in each server, then the price difference between Intel and AMD will be 1.5 times. Now the most interesting thing is the cost of software licensing for the servers we configured. Most software server platforms today are licensed per processor socket.

| Comparison of software licensing costs for selected servers | ||

|

|

1 x AMD EPYC

|

2 x Xeon Platinum

|

|

Citrix Hypervisor Standard Edition |

763 $ |

1526 $ |

|

Citrix XenServer Enterprise Edition |

1525 $ |

3050 $ |

|

Red Hat Virtualization with Standard Support |

2997 $ |

2997 $ |

|

Red Hat Virtualization with extended & nbsp; support |

4467 $ |

4467 $ |

|

SUSE Linux Enterprise Virtual Machine Driver, Unlimited |

1890 $ |

3780 $ |

|

VMWare vSphere Standard with Basic Support |

1847 $ |

3694 $ |

|

VMWare vSphere Standard with Extended Support |

5 968 $ |

11 937 $

|

More - more! When using 64-core EPYC Rome, you can get 256 threads in one AMD server with two CPUs, and in an Intel server - 112 using Xeon Platinum 8276 - 8280 processors. We do not consider Xeon Platinum 9258 processors with 56 physical cores for reasons, disassembled & nbsp;

Looking at the presentation slides, you still need to be aware that in your case both Intel and AMD speed may be different, and the picture for Epyc (Rome) will be less rosy on single-threaded applications that depend on high frequencies. However, the criteria for data security for both platforms are exactly the same. And AMD not only has not discredited itself in this area, but, on the contrary, has SEV technologies for the physical isolation of virtual machines and containers within a single host, without the need to recompile applications. We talked about this technology in detail, and we recommend that you read our article. But even in spite of all of the above, forgive the pun, but there remains a feeling of understatement. Ordinary system administrators and large IT directors, when it comes to AMD, sometimes ask completely childish questions, and we answer them.

Question # 1 - compatibility of the existing stack on AMD

Needless to say, all modern operating systems including Windows Server, VMware ESX, Red Hat Enterprise Linux, and even FreeBSD support AMD EPYC processors for both application launching and virtualization. But some IT specialists have questions: will virtual machines created on a server with Intel Xeon under AMD EPYC work? Let's just say: if everything were simple and smooth, this article would not have arisen, and even considering that the virtual machine communicates with the processor and I/O components through an interlayer in the form of a hypervisor, there are enough questions. At the time of this writing, compatibility with major operating systems when installed on bare metal looks like this:

|

Operating system: type/version

|

AMD EPYC 1 (Naples) compliant

|

AMD EPYC 2 (Rome) compatible

|

|

Red Hat Enterprise Linux |

7.4.6 (Kernel 3.10)

|

7.6.6

|

|

Ubuntu Linux |

16.04 (Kernel 4.5)

|

18.04 (Kernel 4.21)

|

|

Microsoft Windows Server

|

2012 R2 (2013-11)

|

2012 R2 *

|

|

VMware vSphere

|

6.7

|

6.5 U3

|

|

FreeBSD |

-

|

-

|

* At Microsoft, we can say straightforwardly: the latest Windows Server 2019 (released after October 2019) supports new processors, as they say, & quot out of the box. The first generation AMD EPYC is generally supported by all three versions of Windows Server without restrictions, and to install Windows Server 2016 on 64-core AMD EPYC 77x2, you must first disable SMT in the BIOS, and if you decide to install Windows Server 2012 R2, then in the BIOS you also need to disable X2APIC . With EPYC processors with 48 cores or less, everything is much simpler: you do not need to disable anything in the BIOS, but if you suddenly decide to roll Windows Server 2012 onto a machine with two 64-core EPYCs, then remember that in 2012 there is still no one I didn’t think that one server could fit 256 logical cores, and this operating system only supports 255 logical cores, so one core goes out.

Only FreeBSD is sad: in the latest stable release 11.3 from July 2019, there is not a word about AMD EPYC, even the first generation. I have a very biased attitude towards FreeBSD: an operating system that does not support many network cards, which is updated every six months, and to which publishers simply cannot make a normal bootable .ISO image, freely writable by Rufus or Balena Etcher, they personally tell me that you must have very good reasons to use fryakha in 2019 on bare iron. And I would discount this operating system, but FreeBSD has popular security gateways like PFSense and OPNSense, as well as storage systems with ZFS (Nexenta , FreeNAS). Let's check if this means that you will not be able to use the specified distributions on bare metal?

| Testing AMD EPYC and FreeBSD Distributions Compatibility

| ||

|

Operating system: type/version

|

AMD EPYC 7551p

|

AMD EPYC 7552

|

|

PFSense 2.4.4 P3

|

Starts up and runs

| |

|

OPNSense 19.7

|

Starts up and runs

| |

|

FreeBSD 11.3

|

Failed to install OS

| |

|

FreeNAS 11.2 U6

|

Starts up and runs | |

In general, what does "start" mean about FreeBSD? The Intel X550 network card, integrated into the motherboard, saw and configured only FreeNAS on the fly via DHCP, while the network gateways were to dance with a tambourine.

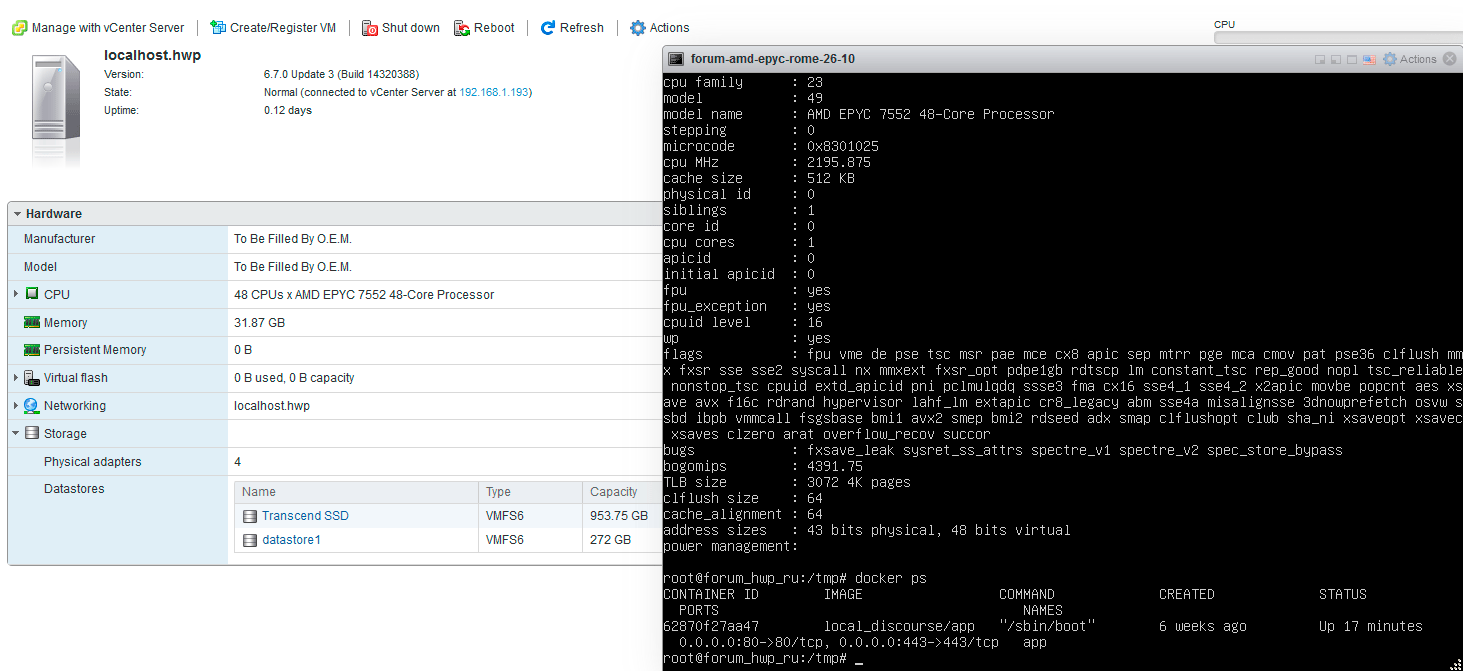

A representative of Nexenta in a letter recommended that we not engage in nonsense, but launch their virtual product Nexenta VSA in a virtual machine under ESXi, especially since they even have a plug-in for vCenter that makes it easier to monitor storage systems. In general, starting from the 11th version, FreeBSD works great under ESXi, and under KVM, and under Hyper-V, and before we move on to virtualization, let me summarize an intermediate result that will be important for our further research: when installing an operating system on AMD EPYC 2 (Rome), you need to use the latest distributions of your operating systems compiled after September 2019.

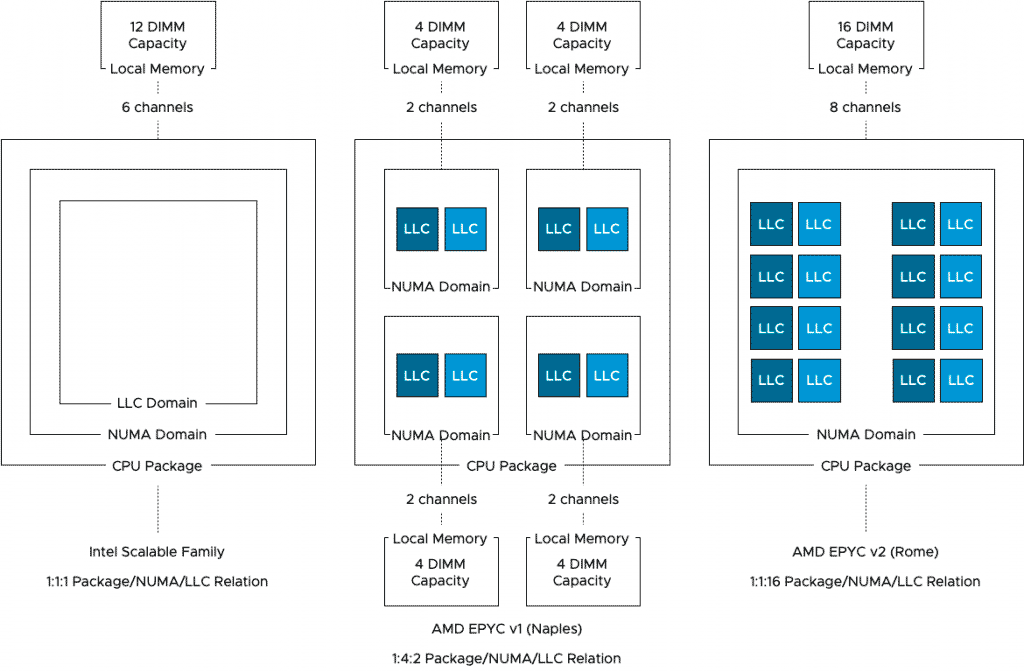

Question # 2: What about compatibility with VMWare vSphere

Since AMD EPYC is native to the clouds, all cloud operating systems support these processors flawlessly and without limitations. And it’s not enough just to say that it “starts up and runs.” Unlike Xeons, EPYC processors use a chipset layout. In the case of the first generation (7001 series), there are four separate chips with their own cores and a memory controller on the common CPU case, and a situation may occur when the virtual machine uses computing cores belonging to the same NUMA domain, and the data lies in memory strips connected to The NUMA domain of another chip, which causes an extra load on the bus inside the CPU. Therefore, software vendors have to optimize their code for architectural features. In particular, VMWare has learned to avoid such distortions in the allocation of resources for virtual machines, and if you are interested in details, I recommend reading this article . Fortunately, in EPYC 2 on the Rome core, these layout subtleties are not available due to the layout specifics, and each physical processor can be initialized as a single NUMA domain.

Those who become interested in AMD processors often have questions: how will EPYC interact with competitors' products in the field of virtualization? Indeed, in the field of machine learning so far Nvidia reigns supreme, and in network communications - Intel and Mellanox, which is now part of Nvidia. I want to give one screenshot showing the devices available for forwarding to the virtual machine environment, bypassing the hypervisor. Considering that AMD EPYC Rome has 128 PCI Express 4.0 lanes, you can install 8 video cards in a server and forward them to 8 virtual machines to speed up Tensorflow or other machine learning packages.

Let's make a small lyrical digression and configure our mini-data center for machine learning needs with Nvidia P106-090 video cards that do not have video outputs and are designed specifically for GPU computing. And let evil tongues say that this is a `` mining stub '', for me it is a `` mini-Tesla '', which perfectly copes with small models in Tensorflow. When assembling a small workstation for machine learning, installing desktop video cards in it, you may notice that a virtual machine with one video card starts up perfectly, but in order for this whole structure to work with two or more GPUs that are not intended for work in a data center, you need to change the PCI initialization method -E devices in the VMware ESXi config file. We enable access to the host via SSH, connect under the root account

vi/etc/vmware/passthru.map

and in the opened file at the end we find & nbsp;

# NVIDIA

and write (instead of ffff your device ID will be)

10de ffff d3d0 false

10de ffff d3d0 false

Then we reboot the host, add vidyuhi to the guest operating system and turn it on. Install/run Jupyter for remote access `` a la Google Colab '', and make sure that training the new model is running on two GPUs. & Nbsp;

Once I needed to quickly calculate 3 models, and I launched 3 Ubuntu virtual machines, forwarding one GPU to each one, and accordingly, on one physical server, I simultaneously counted three models, which you can't do without virtualization with desktop video cards. Just imagine: for one task you can use a virtual machine with 8 GPUs, and for another - 8 virtual machines, each of which has 1 GPU. But you should not choose gaming video cards instead of professional ones, because after we changed the initialization method to bridge, as soon as you turn off the Ubuntu guest OS with the forwarded video cards, it will not start again until the hypervisor is restarted. So for home/office, such a solution is still bearable, but for Cloud-data centers with high Uptime requirements it is no longer. & Nbsp;

But these are not all pleasant surprises: since AMD EPYC is a SoC, it does not need a south bridge, and the manufacturer delegates to the processor such pleasant functions as forwarding a SATA controller to the virtual machine. Please: there are two of them, and you can leave one for the hypervisor, and give the other to the virtual software data warehouse.

Unfortunately, I can't show how SR-IOV works with a live example, and there is a reason for that. I'll save this pain for later and pour out my soul further down the text. This function allows you to physically forward one device, such as a network card to several virtual machines at once, for example, Intel X520-DA2 allows you to share one network port into 16 devices, and Intel X550 - into 64 devices. You can physically forward one adapter into one virtual machine several times in order to tamper with several VLANs ... But somehow this feature is not very useful even in cloud environments.

Question # 3: What about the Intel-based virtual machines?

I don’t like simple answers to difficult questions, and instead of just writing “it will work”, I’ll make the task as difficult as possible. First, I will take a host to U1, not the most recent version of the hypervisor, while the AMD machine will have ESXi 6.7 U3 installed. Secondly, I will be using Windows Server 2016 as a guest operating system, and I am even more biased towards this operating system than about FreeBSD (you remember, I said my "phi" above in the text). Thirdly, under Windows Server 2016, I will run Hyper-V using nested virtualization, and inside I will install another Windows Server 2016. In fact, I will emulate a multitenant architecture in which a Cloud provider leases a part of its server for a hypervisor, which can also be leased for rent or use under VDI environment. The difficulty here is that VMware ESXi is transferring processor virtualization functions to Windows Server. This is somewhat reminiscent of forwarding a GPU to a virtual machine, but instead of a PCI board, you can use any number of CPU cores, and you don't need to reserve memory: lovely, and that's all. Of course, somewhere out there, behind the scenes, I already transferred from the Intel host to EPYC and the Pfsense gateway (FreeBSD), and several Linux, but I want to say to myself: "if this construction works, then everything will work." Now the most important thing: I install all updates on Windows Server 2016, and turn off the virtual machine by opening VMware VSCA. It is very important that the virtual machine should be turned off in the Off state, because if the far VM in Hyper-V remains in the Saved state when Windows Server is turned off, it will not start on the AMD server and you will need to turn it off by pressing the button. Delete state '', which can lead to data loss.

Turn off the virtual machine and go to VCSA for migration. I prefer to do cloning instead of migrating, and am copying Window Server 2016 to an EPYC host. After passing the validation for compatibility, you can add some processor cores to the virtual machine and make sure that the boxes for nested virtualization are enabled. The process takes a few minutes, we turn on the virtual machine already on the AMD server, wait for Windows to boot slowly, and turn on the guest operating system in the Hyper-V manager. Everything works (for more details on why everything works, HPE wrote in its document on the transition from Intel to AMD, available here).

And if you don't take some borderline states of container virtualization, then everything is the same with Docker and Kubernetes. For clarity, I will port Ubuntu 18.04 inside Docker in a container. As soon as the transfer is completed, I disconnect the network for the new virtual machine and start it, waiting for everything to boot. After waiting for the Redis caches to load on the forum clone, I quickly turn off the network on the old virtual machine and turn it on on the new one. Thus, the downtime of the forum for me is about 10-15 seconds, but there is a time gap from the moment the copying starts and until it starts on a new host, which even between two SSDs fits somewhere in 5 minutes. If someone created a topic or wrote an answer during these 5 minutes, it will remain in the past, and it is useless to explain to the user that we left an 8-core Xeon for a 48-core EPYC: our forum, like the rest of Docker, works into 1 core, users will not forgive us 5 minutes of breaking.

Question # 4: What about live migration?

Both VMware and Red Hat have technologies for live virtual machine migration between hosts. In this process, the virtual machine does not stop for a second, and during the transfer, the hosts synchronize the data until they are convinced that there is no temporary gap in the state of the original and the clone, and the data is consistent. This technology allows you to balance the load between nodes within the same cluster, but even if you break your head - between Intel and AMD virtual machines will "make money" are not carried over.

|

VMware vSphere contains functionality that allows masking (in fact, making it inaccessible to virtual machines) extended sets of instructions, bringing to a common denominator all servers in the vSphere cluster, which makes it possible to do vMotion loads between servers with processors of different generations. In theory, it is possible to mask all instructions that are not included in the AMD64 specification, which will allow virtual machines to be "live" between any servers with AMD64-compatible processors. The result of such a scenario is the maximally reduced capabilities of the processors, all additional instruction sets (AES-NI, SSE3 and higher, AVX in any form, FMA, INVPCID, RDRAND, etc.) become inaccessible to virtual machines. This will lead to a very noticeable drop in performance or even inoperability of applications running in virtual machines running on such servers. For this reason, VMware vSphere does not support vMotion between servers with processors from different manufacturers. While this can technically be done in certain configurations, vMotion is only officially supported between servers from the same vendor (although processors of different generations are allowed). |

If quite roughly, then the whole point is that the processor driver translates extended CPU instructions into the virtual machine, while Intel and AMD have a different set of them. And a running virtual machine, one might say, relies on these instructions, even if it does not use them, and having started, say with AES-NI support (Intel), you will not replace this function with AES (AMD). For the same reason, live migration from new processors to old ones, even between Intel and Intel or AMD and AMD, may not be supported. & Nbsp;

|

In order for vMotion to work between servers with different generations of processors from the same manufacturer, the EVC (Enhanced vMotion Compatibility) function is used. EVC is enabled at the server cluster level. At the same time, all servers included in the cluster are automatically configured in such a way as to present to the virtual machine level only those instruction sets that correspond to the processor type selected when EVC was turned on. & Nbsp; |

It is possible to disable extended instructions, limiting the virtual machine's access to the CPU's capabilities, but firstly, this can greatly reduce the performance of the virtual machine, and secondly, the virtual machine itself will have to be stopped for this, changed the configuration file and started again. And if we can stop it, then why do we need these dancing with configs - transfer it in the off state and that's it.

If you dig into the KVM documentation , you might be surprised to find that migrating between Intel and AMD claims, although it is not explicitly stated, that it is `` profitable '', and this question periodically pops up on the net. For example, on Reddit, a user claims that under Proxmox (a Debian-based hypervisor), his virtual machines were transferred to `` hot ''. But for example, Red Hat is categorical in its vision: no live migration - that's it.

|

On the Red Hat Virtualization (RHV) virtualization platform, this operation is technically not possible, since the Intel and AMD extended instruction sets are different, and Intel and AMD servers cannot be added to the same RHV cluster. This limitation was introduced in order to maintain high performance: the virtual machine is fast precisely because it uses features of a particular processor that do not overlap between Intel and AMD. On other virtualization platforms, migration within a cluster is theoretically possible, however, it is usually not officially supported, as it causes a serious blow to performance and operability. |

Of course, there are crutches, such as Carbonite. Judging by the description, the program uses virtual machine snapshots with subsequent synchronization, which allows it to transfer VMs not only between hosts with different processors, but even between clouds. The documentation put it mildly that the migration manages to achieve "almost zero downtime", that is, the machine still shuts down, and you know what is surprising to me personally? There is a complete information vacuum around this problem with the lack of live migration: no one shouts or demands from VMware: `` Give us vMotion compatibility between Intel and AMD, and don't forget ARM! '' and this is due to the fact that uptime at the level of "five nines" only one category of consumers is needed.

Question # 5: Why doesn't anyone care about question # 4?

Let's say we have a bank or an airline that has seriously decided to reduce OPEX by saving on licenses, leaving Xeon E5 for EPYC 2. In such companies, fault tolerance is critical, and is achieved not only through redundancy by means of clustering, but also at the expense of the application. This means that in the simplest case, some kind of MySQL runs on two hosts in Master/Slave mode, and a distributed NoSQL database generally allows one of the nodes to be dumped without stopping its work. And here there are absolutely no problems at all to stop the backup service, move it where required and download it again. And the larger the company, the more important fault tolerance is for it, the more flexibility its IT resources allow. That is, where we treat IT as a service, we reserve the service software itself, no matter where and on what platform it runs: in Moscow on VMware or in Nicaragua on Windows.

Absolutely another matter-cloud providers. For them, the product is a working virtual machine with uptime of 99.99999%, and to turn off the client VM for the sake of moving to EPYC is nonsense. But they are not tearing their hair out from the lack of live migration between Intel and AMD, and the point here is in the philosophy of building a data center.

Data Centers are built on the principle of “cubes” or “Islands”. Let’s say one “cube” is a computing cluster on absolutely identical servers + storage that serves it. Inside this cube there is a dynamic migration of virtualok, but the cluster never expands and VMs from it to another cluster do not migrate. Naturally, a cluster built on Intel will always remain so, and at one time it will either be upgraded (all servers will be replaced with new ones), or disposed of, and all VMS will move to another cluster. But upgrades/replacements of configurations inside the “cube” do not occur.

- I see a competent approach to sizing based on the definition of a “cube” for a compute node and a data store (storage node). A hardware platform with a certain number of CPU cores and a given amount of memory is selected as the computing cubes. Based on the features of the virtualization platform, estimates are made of how many “standard” VMS can be run on one “cube”. From such" cubes " clusters/pools where VM with certain requirements to performance can be placed are typed.

- The basic principle of sizing is that all servers within the resource pool-the same capacity, respectively, and their cost is the same. Virtual machines do not migrate out of the cluster, at least when they are running in automatic mode.

And if the cloud provider wants to save on licenses and just on iron in terms of CPU core, it will just put a separate “cube” of a dozen or two servers on AMD EPYC, configure the storage and put into operation. So it doesn’t matter even to cloud users that the client virtual machine can’t be transferred from Intel to AMD: they just don’t have such tasks.

Additional question: do you have a fly in the ointment? We have a bucket

Let's be honest: all the time AMD was not on the server market, Intel was earning itself the status of a bulletproof supplier of solutions that work even if you like them on their heads, and AMD did not rest on its laurels, did not sit on the defensive, but I just skipped all the lessons that the market endured, and when managers were throwing mud at the old Opterons in the smoking rooms, AMD had nothing to answer, and the fears in our head push us to the fact that any problem on an AMD server is an AMD problem, even if the SSD just flew out, the electricity was turned off, or a new update hung up the host, you still `` had to take Intel. '' We need Moses to lead people in the wilderness for 40 years to get rid of these prejudices, but for now it's too early for him to come.

Let's start “for health”. AMD always strives to maintain the long life of processor sockets, because unlike Intel, they do not force motherboards to be thrown away with each next generation of processor. And despite the fact that it is not customary in the Enterprise segment to make processor upgrades, this makes it possible to unify the server park for planned purchases that are spaced in time. And if Dell EMC and Lenovo launched new servers under EPYC Rome without support of the first generation EPYC 7001, and apparently will support the next generation EPYC Milan, HPE, albeit with restrictions, already allows the installation of the first two generations of EPYC in their DL325 servers and DL385 10 Gen.

From a circuitry point of view, a motherboard for AMD EPYC is just socket (s) and slots with wiring: no south bridge, no PCI Express dividers, all additional chips are peripheral controllers for Ethernet, USB 3.x and BMC ... The motherboard has nothing to break, it is as simple as a drum, and the concept of AMD's powerful single-processor server is especially impressive. But, if you look at the market for self-assembled solutions, it is as if AMD specifically could not pass by a particular puddle. The fact is that EPYC boards for self-assembly are not always compatible with EPYC 2, and it is not a matter of support for DDR3200 memory or the new PCIe standard. To support EPYC ROME, a BIOS chip with a volume of at least 32 MB is required, and on platforms for the first generation of EPYC, 16 MB is quite often. Fortunately, changing the flash chip means you don't need to re-solder the socket, and it is easy to do this, but the situation itself is still unpleasant. Therefore, if you look closely, in addition to the procedures for replacing BIOS chips, Supermicro and second-tier manufacturers are quietly launching either new boards and platforms or updating revisions of previously released boards.

In addition, by opening the section Known Issues in the vSphere 6.7 U3 release and we see that we have two issues concerning EPYC ROME 2. Of course, Intel is also not infallible: the SR-IOV function with the ixgben driver (our X520-DA2 network test device) can be buggy, causing the host to reboot. Bravo! This is not a palm-sized processor that has a week without a year - it is a card that is 10 years old and is found in 4 out of 5 servers with 10 Gigabit networks.

For me, all of the above means that if we look at the trinity of `` Intel, AMD, VMware '', then there is no good boy here, and 100% confidence that the stack working today will work after the update, on Intel, Nobody can guarantee AMD or Arm. Well, if we live in such a world where any issue of reliability is solved due to redundancy at the application level, then what difference does it make whether the company beat its thumbs for 10 years in the server market, or built the image of a mega-supplier that collapsed with the very first announcement of Meltdown/Specter , and continues to fly into the abyss - only the wind in your ears!

Conclusion: Recommendations for IT directors

For a very long time, on forums, blogs and social media groups, experienced specialists with certificates and completed profiles will say that it is too early to switch to AMD, and no matter what failures Intel pursues, they will be followed by two decades of dominance in data centers, overwhelming market share and perfect compatibility. It is good that most of the people follow the imposed behavior model, they do not see that the market has turned around and the strategy needs to be changed. My friend, a stock trader, used to say that the more people bet on a fall, the more the asset will shoot up, so all a competent specialist has to do is pick up a calculator and count.

AMD is interesting for a simple comparison of the cost of a server, its performance in modern multi-threaded applications allows you to significantly consolidate servers and even migrate to single-socket servers, reducing the overall power consumption of the racks. The situation with OPEX with annual fees for licenses of many applications is also interesting for analysis.

AMD's marketing is ineffective, and at least in the Russian cloud-market there remains a completely unplayed card for increased security of virtual machines by isolating VM memory, what is we wrote earlier. That is, now there are opportunities to get price-level benefits at EPYC and functionality both when consolidating a data center, and when building a cloud from scratch.

After you count the prices, of course, you need to test “hands". To do this, you do not need to take the server to the test. You can rent a physical machine from a cloud provider, and deploy your applications on bare metal. When testing non-compute applications, note that all cores in AMD’s Turboboost run at frequencies above 3GHz. Plus, if there is iron at hand, try to set in the BIOS increased or decreased thresholds of CPU power consumption, this is useful for large data Centers function.

Mikhail Degtyarev (aka LIKE OFF)

11/11.2019

Artem Geniev , Business Solutions Architect "

Artem Geniev , Business Solutions Architect " Vladimir Karagios , head of the group of architects for solutions

Vladimir Karagios , head of the group of architects for solutions