Configuring HP StorageWorks MSA 2000sa disk systems

How the storage systems are configured in some cases depend on their fault tolerance and performance. For administrators of the popular HP StorageWorks MSA2000sa storage systems, it is important to know some of the intricacies of creating a consolidated storage environment based on these disk arrays.

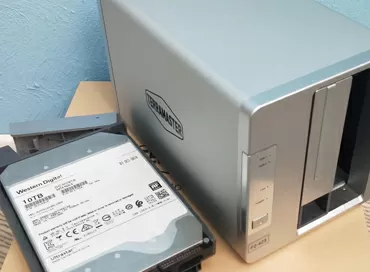

MSA2000sa disk arrays in a 2U case are designed to form a consolidated storage environment for small and medium-sized enterprises, as well as for organizing disk storage of the second or third level in remote offices and branches of large companies. For entry-level and mid-level systems, you can purchase a system in a basic (minimum) configuration with one controller, and then upgrade it with a second controller, or immediately buy a solution with two controllers. Maximum system capacity is 5.4 TB with SAS drives or 12 TB with SATA drives in the 12-bay main enclosure. If you add three additional disk shelves with 12 bays each, the system capacity can be increased to 21.6 TB for SAS drives and up to 48 TB for SATA. Up to four host servers can be connected directly to one MSA2000sa system using the SAS interface.

How many controllers do you need?

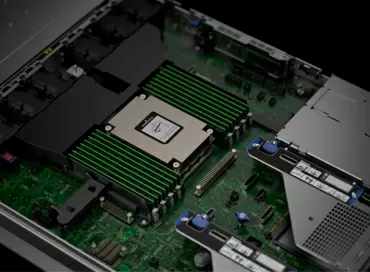

To ensure high data availability and performance, a configuration with two controllers is preferable, but in some cases it is quite justified to use one.

Dual controller configuration provides higher application availability, because if one controller fails (the probability of such an event is extremely low), its functions are to manage RAID arrays and disk cache memory, restarting data protection services and assigning host ports & mdash; are 'picked up' a redundant controller, and a failed node can be replaced without shutting down the storage device. An additional advantage of this configuration is the ability to increase performance by balancing the I / O processing load.

Cache mirroring is applied in a dual controller configuration. Thanks to automatic transmission in & lt; & lt; broadcast & gt; & gt; (broadcast) of the data being written to the backup cache, the load and latency for the main controller cache are reduced. When power is removed, cached data is immediately written to the flash memory of both controllers, eliminating the risk of data loss. By implementing "broadcast" recording, you can better protect your data without sacrificing performance and response time.

Single-controller configuration has the potential to become a single-point-of-failure (SPOF) - one controller can serve up to two host servers (with a direct connection ) with an access speed of 3 Gb / s, and if it fails, the host server loses access to the storage system.

Of course, one controller is cheaper than two, and this configuration is justified for solutions where high data availability is not required and short-term downtime to restore access is acceptable. Similar configurations are used when providing redundancy at the server level, for example, in two-node clusters, where each server connects to its own controller and the failure of the latter leads to the failure of the corresponding node. There is another option for building a high availability storage system using a volume manager, with its help a mirrored copy of data is maintained on two independent storage systems, each of which has one controller. In case of failure, the data is restored from a backup copy.

Direct connection

In a Direct Attach Storage (DAS) scheme, the storage system is directly connected to the host server. Due to the absence of switches (storage switches), this solution is economical, as in the solution using the MSA2000fc array (fiber channel), shared storage is allowed, but its capabilities will be limited by the number of external controller ports. A significant advantage of this storage system is support for direct connection of four host servers on one port or two host servers on two ports without using a switch.

When implementing a solution with DAS connection, it is recommended to use a scheme for connecting host servers via two ports.

Virtual disks

A virtual disk (vdisk) is a group of disks combined into a RAID group, and individual virtual disks can be formed with different RAID levels. A virtual disk can contain either SATA or SAS devices. but you cannot mix them. The HP StorageWorks MSA2000 system can have up to 16 virtual disks per controller - a maximum of 32 virtual disks in a dual-controller configuration.

When creating disk groups, it is more expedient to enlarge virtual disks rather than split them. In RAID 3 configurations, in terms of efficient use of space, there are many " small & quot; virtual disks are ineffective - if there is only one service disk in a group of twelve disks (checksums are stored on it), and eleven are occupied by data, then in four groups containing three disks each, there will be four service disks, and eight useful ones.

The volume of a virtual disk can exceed 2 GB, therefore, when creating large disks in RAID arrays with parity, the number of service disks can be reduced, however, in general, to work with logical Volumes larger than 2 TB may require special versions of operating systems, NVA adapters, and application support.

By the way, the device MSA2000 sa supports virtual disks up to 16 TB. RAID 0,3,5,6,10 can have up to 16 drives (1TB per SATA device, or 16TB total). RAID 50 can have up to 32 drives (1TB per SATA device, or 32TB total).

When organizing large amounts of external memory, you should carefully weigh the advantages and disadvantages of multiple larger virtual disks versus a large number of smaller virtual disks with fewer drives. To increase disk efficiency (but not performance), you can create virtual disks larger than 2 TB and divide them into multiple logical volumes up to 2 TB. The maximum supported virtual disk size is determined by multiplying the number of disk devices in a given RAID configuration by the largest volume of one device.

It is best to add virtual disks, spreading them evenly across both controllers. If you connect at least one virtual disk to each controller, the controllers begin to work according to the principle y "active-active" ( active- active). This configuration provides resource efficiency when using two controllers in the storage system MSA2000 sa. In addition, to prevent data loss in the event of a disk shelf failure ( shelf enclosure), the entire virtual disks must be “stripped” ( stripe) across several disk shelves. Configured virtual disk RAID 1,10,3,5,50, depending on the number of disk shelves involved, can withstand the threat of data loss if an entire disk shelf fails.

When creating a virtual disk, you can choose the default chunk size or the one that best suits your application. The stripe unit size is the amount of contiguous data written to a virtual disk, after which this characteristic cannot be changed. A stripe is a collection of contiguous fragments (stripe units) written to the same logical areas of each hard disk in a virtual one. The stripe parameters are determined by the number of physical hard drives in the virtual disk. These parameters are subject to change. Valid values are 16.32 and 64 KB (default). For example, if the host server sends data in portions of 16 KB, then this stripe size for random access will provide an even load distribution for read operations between all devices, which will have a positive impact on performance. If the data is requested at 16 KB, and the data block size is 64 KB, then some access operations will refer to the same hard disk - each stripe fragment includes four possible groups of 16 KB that can be accessed by the host server , which cannot be considered optimal. On the other hand, if the access was done in portions of 128 KB, then the host server would need to access both devices that make up the virtual disk when reading.

It is recommended to set the size of a continuous fragment equal to the size of the data block that the application operates on.

The choice of a RAID level for an array depends on whether the configuration is optimized for better fault tolerance or better performance.

If you do not need the fault tolerance or performance of using groups RAID, then it is wise to use non-redundant configurations.

Hot spare drives

When configuring virtual disks, you can add up to four hard disks (available) to the redundant virtual disk (RAID 1,3,5, b and 50) for backup. If any hard disk included in the virtual disk fails, the controller automatically uses the spare to restore the corresponding virtual disk. The type of spare disk (SAS or SATA) must be the same as the other disks in the virtual disk. The size of the spare should be sufficient to recover a smaller hard disk as part of a virtual disk. In the event of a failure of two hard disks in a RAID 6 virtual disk, two spare disks of the appropriate size must be available before starting a rebuild. If more than one of the hard disks in a virtual disk fails, RAID 50 reconstructs the data in the order of their numbering. The backup can be "global" and provide failover to any virtual disk, or "local" used to recover a specific virtual disk.

Alternatively, a dynamic global spare is used - when recovering a failed hard disk in any of the virtual ones, the system uses any of the “free” disks that are not included in any of the virtual ones.

The greatest effect is achieved if each virtual disk has its own spare, which is quite expensive, so as an alternative, you can either use the dynamic spare supported by the system, or assign one or more free hard disks to as a global reserve.

Cache Management

Controller cache parameters affect the system's fault tolerance and performance and can be set individually for each hard disk. Parameters are set using the utility HP StorageWorks 2000 CommandLinelnterface ( HPMSA2000 Cll ), and the user must have diagnostic, a not standard authority. To change them, use the commands Manage - & gt; General Config - & gt; User Configuration - & gt; Modify ' Users in the program HP Storage Management Utility.

Setting the read-ahead buffer

The value of the Read-ahead cache parameter determines how much data should be pre-read into the buffer after two consecutive read operations are performed in a row. Read-ahead can be performed in forward (LBA ascending) or reverse (descending) direction. Increasing the read-ahead buffer size can significantly improve the efficiency of bulk sequential (streaming) read operations, but random read performance can decrease.

By default, the value of the Read-ahead cache parameter for the first sequential read is set to the size of a contiguous chunk, and for subsequent accesses, equal to the size of one stripe. This layout is suitable for most users and applications. From a controller point of view, even without stripping, the stripe size is 64 KB for mirrored logical volumes and virtual disks (RAID 1).

It is advisable to change the read-ahead buffer settings only if it is clear how data is transferred between the operating system, application, adapter, network. For more fine-tuning, it may be necessary to monitor system performance using tools to collect statistics for host access to virtual disks.

The size of the Read-ahead buffer can be set as follows.

- By default ( Default). The size of the read-ahead buffer is assumed equal to the size of a contiguous fragment for the first sequential read operation, and for subsequent access operations equal to the size of one stripe. The chunk size depends on the block size that was specified when the virtual disk was created (by default, 64 KB). For virtual disks that are not in a RAID group or in a group

RAID 1, the stripe size is assumed to be 64 KB.

- Disabled ( Disabled). Disabling the read-ahead buffer is useful if control is left to the host server, for example, if the host splits random I / O into two smaller ones, with a corresponding read-ahead buffer trigger. The nature of the access operations requested by the host server can be determined using statistical histograms.

- 64,128,256 or 512 KB; 1,2,4,8,16 or 32 MB. Specific values determine the amount of data read on the first call. The same amount of data enters the buffer on subsequent read-ahead operations.

- Maximum ( Maximum). The size of the read-ahead buffer for the logical partition is dynamically set by the controller itself, for example, if there is only one logical volume, then about half of the cache memory is reserved for the read-ahead buffer.

The maximum size should be set only when the performance of the host server is critical and disk access delays need to be compensated for by caching data. For example, in applications that read data intensively, it is advisable to have data in the cache memory that is accessed more often. Otherwise, the controller will have to first determine on which disk the data is located, move it to the cache memory, and only then transfer it to the host server.

If there are two or more volumes in the case of working with the cache memory, there may be contention and conflicts, the resolution of which takes the computational resources of the controller, so in this situation you should not set the maximum size of the read-ahead buffer .

Cache optimization can be set using the Cache Optimization parameter.

- Standard ( Standard). This is the default and targets typical applications that combine sequential and random access to data.

- Super Sequential ( Super- Sequential). The standard read-ahead buffer management is slightly modified: after data from the cache has been transferred to the host server, space is made available in the buffer for the next read-ahead cycle. This option is not optimal for random access, so it should only be used to increase the speed of applications that perform strictly sequential access to data.

Setting the delayed write mode

When deferred write ( Write- back cache) the controller, having received data for writes to disk, stores them in a buffer and, without waiting for the completion of the physical operation, immediately notifies the operating system of the host server that the write operation has been completed. In this case, a copy of the data written to the disk is stored in the cache memory. The lazy write mechanism increases write speed and controller throughput.

If deferred writing is disabled, the write-through mode works ( Write- through), while the controller first writes data to disk, and then notifies the host server operating system. The write speed and throughput of the controller are slow, but the reliability is improved because the risk of data loss in the event of a power failure is reduced. However, write-through to the cache does not create a mirrored copy of the data directed to disk.

In both cache management modes, in the event of a controller failure, an active-active automatic switchover is supported. Write-back mode is enabled by default for each disk volume, but it can be disabled. Data is not lost in the event of a power failure thanks to the backup power supply using high-capacity capacitors. This mode is suitable for most applications. The speed of data exchange when mirroring the cache memory of controllers depends on the bandwidth of their data exchange bus (Backend bandwidth), therefore, when recording large contiguous data fragments (for example, when processing video data, telemetry, or logging), a write-through scheme provides a significant ( in some cases up to 70%) increase in productivity. Therefore, if this volume is not supposed to be accessed in random order, you can try disabling lazy writes.

Disabling lazy writing only makes sense if you fully understand how data moves between the operating system, the application, and the SAS adapter. Otherwise, the performance of the storage system may suffer.

Automatic recording mode selection

You can set the conditions under which the controller will automatically switch the write mode to disk (remember that system performance in write-through mode may be lower). By default, the system should go into write-back mode when there is no trigger condition. You can verify this by using the HP SMU utility or using the command line interface. You can specify the following options.

- Return to the previous state ( Revert when Trigger Condition Clears). If the condition is met, the system goes to write-back mode. This option is enabled by default, which is the most common case.

-

Notify another controller ( Notify Other Controller). If there are two controllers, the second is notified that the trigger condition is met. This option is disabled by default.

Cache data mirroring

In the default active-active mode, the caches of both controllers maintain mirrored copies of data written to disk volumes in write-back mode. Mirroring slightly reduces performance, but provides fault tolerance. If you disable mirroring, then the cache memory of each controller will be managed independently in ICPM (independent cache operation performance mode).

The advantage of ICPM mode is that each controller, along with high throughput, can provide data writing in deferred mode. The target data is stored in non-volatile memory, with high-capacity capacitors providing backup power. This option is especially productive for high-performance computing, when processing speed is more important than the risk of losing data written to disk. The disadvantage of this mode is that in the event of a hardware failure of one of the controllers, the other will not be able to “pick up” (failover) the processing of I / O operations - replacing the faulty controller will result in the loss of data in its cache memory, the write of which was delayed.

In the event of a software failure or the removal of the controller from the disk enclosure, data should not be lost - it remains in the cache and is written to disk when the controller is restarted. However, if the error cannot be cleared, ICPM data may be lost.

Data integrity can be compromised if the RAID controller fails after receiving the next portion of data before the data is written to the disks, so ICPM should not be used in fault-tolerant systems.

In conclusion, we note once again that the choice of the purpose of configuration optimization - fault tolerance or storage performance - is left to the administrator.

SAS switch

The introduction of the HP StorageWorks 3Gb SAS BL Switch opens up entirely new possibilities for scalable external storage shared between servers in HP c-Glass blades. ... This solution provides the best connectivity for servers with Smart Array P700m controllers and HP StorageWorks MSA 2000sa external disk arrays consisting of SAS or SATA drives. In this case, not only the direct costs of purchasing equipment are reduced, but also the functionality is expanded, the required level of performance, fault tolerance and reliability is provided, as well as time and resources for deploying solutions are saved, which is especially important in the face of overcoming the consequences of the crisis of the international financial system.

The external storage capacity is up to 192TB, while the unit cost of storage is lower than Fiber Channel SANs (less than $ 3 / GB). At the same time, it simplifies the deployment and management of the system (connecting devices, booting from shared external drives, Target based management). This solution provides powerful and more affordable resiliency features such as path redundancy, Active / Active controllers, RAID data protection including RAID 6:

This solution for an affordable price allows you to deploy a shared high performance and fault-tolerant pool of external disk storage, managed by the server; blade systems, it is addressed to both large organizations and medium and small businesses, as well as remote offices and branches of large companies.

Configuring the MSA 2000sa

Adjusts controllers' cache memory management. In fault-tolerant systems, the choice of the lazy write mode is preferable. For tasks with sequential and random access to data, for example, when processing transactions and updating databases, it is advisable to use the standard optimization option, in which the size of the cache memory block is set to 32 KB, If the data access is strictly sequential and you need to minimize latency , for example, when playing or post-processing video or audio data, then you should use the "super-sequential" optimization option, in which the cache block size is set to 128 KB. The table shows the parameters for optimizing the performance of the storage system in solving various problems.

|

Optimizing storage performance for different tasks | |||

|

Type of task, nature of processing |

RAID level |

Read-ahead buffer size |

Cache Optimization Option |

|

Standard |

5,6 |

Default |

Standard |

|

High Performance Computing |

5,6 |

Maximum |

Standard |

|

Email Spooling |

1 |

Default |

Standard |

|

NFS Mirroring |

1 |

Default |

Standard |

|

Oracle DBMS, decision support system |

5,6 |

Maximum |

Standard |

|

Oracle Database Transaction Processing |

5,6 |

Maximum |

Standard |

|

Oracle DBMS, transaction processing, high availability |

10 |

Maximum |

Standard |

|

Free 1 |

i |

Default |

Standard |

|

Free 5 |

5,6 |

Default |

Standard |

|

Sequential |

5,6 |

Maximum |

Super Sequential |

|

Sybase Decision Support System |

5,6 |

Maximum |

Standard |

|

Sybase transaction processing |

5,6 |

Maximum |

Standard |

|

Sybase transaction processing high availability |

10 |

Maximum |

Standard |

|

Streaming video |

1,5,6 |

Maximum |

Super Sequential |

|

MS Exchange Database |

10 |

Default |

Standard |

Increased bandwidth capacity. In this case, virtual disks should be evenly distributed between the two controllers. The physical disks should also be evenly distributed between the two controllers. Cheaper SATA drives are best used for streaming data and e-mail systems, and SAS drives otherwise. When setting the parameters of the cache memory, you must be guided by the table or specific requirements of the application.

Increase fault tolerance. Purchase a system with two controllers. Host servers should be connected in two ways. It is necessary to use the technology of parallel data transmission over several channels between the server and the storage system (Multipath Input / Output, MPIO).

Dmitry Nechaev

13 / 04.2009