2U high-density server review: 4 nodes, 8 AMD processors, 512 GB of memory in one case

Today, the main tendency in server equipment is to increase the computational density of equipment, and the reasons for this are quite commonplace - the customer's desire to save on maintenance of his infrastructure. On the one hand, processor manufacturers Intel and AMD have long since switched from a gigahertz race to a race in the number of cores in a single physical processor case. On the other hand, server hardware manufacturers are ready to offer their customers ultra-dense solutions using both the already tried-and-tested Blade server technology and the relatively new one - placing several server nodes in one chassis.

So far, for those who do not have enough money for Blade servers or who simply do not need the power of the Blade structure, the industry offers the installation of two physical servers at 1U chassis height. Accordingly, 4 physical servers can be placed in a 2U case, and they are quite powerful - two-processor, with 16 memory slots, and even one expansion slot. That is, in fact, increasing the density by two (for 1U) or four (for 2U) times, the customer loses almost nothing.

Today we will consider the server assembled by Server Unit for mathematical calculations and physical modeling programs. This machine is built on AMD Opteron 6174 processors, each with 12 physical cores installed on a 4-node Supermicro 2022TG-HIBQR 2U platform. Thus, the computational density is 48 cores per 1U! This 56-kilogram machine worth a 30K$ has visited our test stand, and I can tell you - there is something to be surprised at. But before we continue, let me give you a little technical digression.

High Density Server Applications

In such servers it is possible to accommodate more computing cores and memory on a server rack, and therefore on a 1U server shelf. At the same time, data storage capabilities are the same or worse than those of traditional servers. After all, while 12 3.5-inch hard disks can be installed in a 2U case, in the case of high-density servers, these disks are rigidly divided between modules. And if each physical node has access to 3 disks, then RAID 6 cannot be assembled on them. Although, RAID 5 on large hard drives is quite enough for the operating system and storage of some data, even small databases. The problem is partially solved by using SFF hard drives and external storage systems.

By the way, it is most likely possible to connect hard disks only to the module's motherboard, while using RAID controllers for internal disks is impossible. In some models it may be possible to use Zero Channel controllers.

Another problem with high density servers is expansion boards. In the best case, you will have one slot of half length and full or half height available. Therefore, the manufacturer tries to integrate into the board what the user needs. For example, InfiniBand interface controllers used for MPI in compute clusters and supercomputers.

Compared to the traditional design, the high-density server loses in advance in the performance of the disk subsystem, as well as in expandability. The latter can become a serious problem on the path of integration into HPC, where GPU boards such as nVidia Tesla are increasingly used. And when using such boards, modular servers already lose their advantages.

Compared to Blade servers, the multi-node server is not far from its traditional counterpart. It does not have built-in switches and KVM consoles, it is unlikely to have advanced module management, since it lacks the very notion of a smart shelf like Blade servers. And in terms of computational density, Blade servers have no alternatives.

High density servers allow the buyer to save good money on the purchase of equipment (a 4-module server will cost less than 4 traditional servers with the same configuration and from the same manufacturer) and on operation. Even the cost of power consumption will be lower, because as in the case of Blade servers, they use shared power supplies and cooling fans. Compare the costs - 4 traditional servers will have 4 to 8 power supplies and 16 system fans. And in one 2U high-density server, consisting of 4 modules, there will be only 2 power supplies and 4 system fans.

Naturally, if you rent space for a server in a data center or are simply limited in the used space, then your savings are on your face.

High-density servers are not suitable for storage systems, and databases or a database server will require external storage. Now there are models with support for GPU boards like Tesla, but this is more of a tribute to fashion, because only two modules with GPU boards can be placed in a 2U case, despite the fact that today there are 1U cases that support the installation of two boards like Tesla. However, for virtualization tasks, for web hosting or for mathematical calculations at the CPU level, this is ideal. They provide significant savings both at the purchase stage and at the stage of equipment ownership, while at the same time, they are not inferior in performance to traditional servers.

4-module server platform

The server model we are considering is built on the SuperMicro 2022TG-HIBQR platform, which was created for the new generation of 6-series AMD Opteron processors. These are the only x86 processors to date with 12 physical cores. Consequently, it is the best solution for parallel computing tasks, as you can achieve a density of 48 cores per 1U shelf using relatively inexpensive 2-processor configurations. The SuperMicro 2022TG-HIBQR platform has four dual-processor modules, 12 3.5-inch SATA drive bays, and a 1400W fault-tolerant power supply. Each module has an integrated 40 Gb/s QDR InfiniBand controller, which will be very useful for connecting the nodes of the future HPC cluster. In addition, each node has a PCI Express 16x expansion slot for cards up to 15 centimeters long. Each node can contain up to 256 GB of RAM. That is, practically nothing can stop this server :)

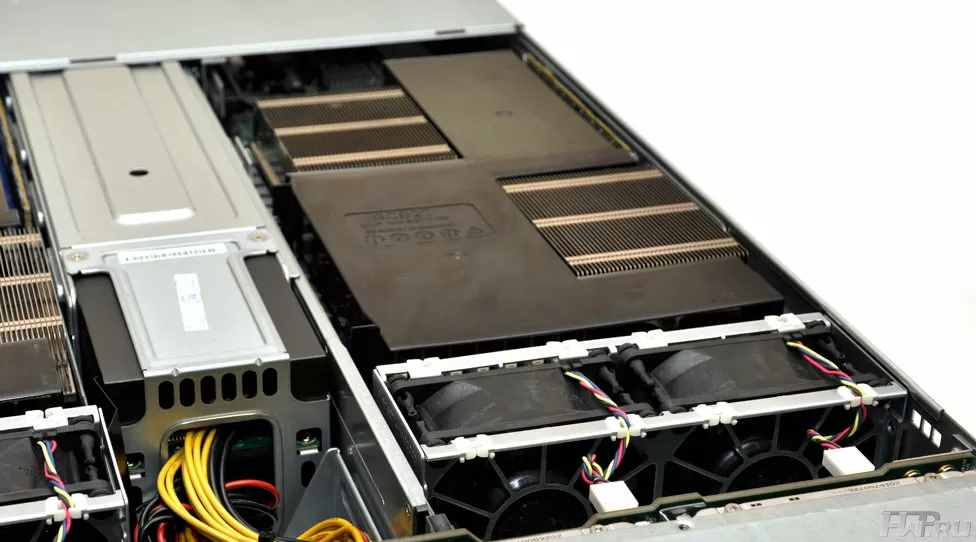

To understand what problems the manufacturer had to solve, just look at the server carefully. First of all, we have before us a rare type of 2U case, in which there was room for 12 disks - one column of three disks for each node.

As a result, there is no space left on the case, not only for USB ports, but even for buttons - switches and identification activation buttons are placed on the “ears” of the rack mount. True, the inscriptions on the buttons and indication are not visible without a microscope.

If the entire front panel is occupied by trays for HDD, then we simply have to look at them more closely.

The design of the trays resembles that of Hewlett Packard servers, with the difference that the tray does not have a grill to protect the drive electronics, and the front part is a grill with low resistance to air flow. And although the latch is plastic, it is very comfortable and gives the impression of reliability.

Let's look at the server from behind - it is from here that the power supply units and modules are taken out. To extract each node, it must first be disabled, but this does not affect the operation of other nodes.

Each of the nodes is generously endowed with the means to communicate with the world: two USB 2.0 ports, a VGA port, a funny looking RS232 port, three RJ45 and one InfiniBand port. Of the three network ports, two are Gigabit Ethernet, and the third, located above the USB ports, is for remote monitoring. If this is not enough for you, you can install an expansion board with the missing interfaces. Although, what can be installed here is difficult to imagine.

The machine is powered by a 1400W 80Plus Gold certified power supply. Typically, server power supplies are incredibly efficient anyway and do not require certification. According to the manufacturer's measurements, the power supply efficiency ranges from 84.4% to 92.31% - excellent performance.

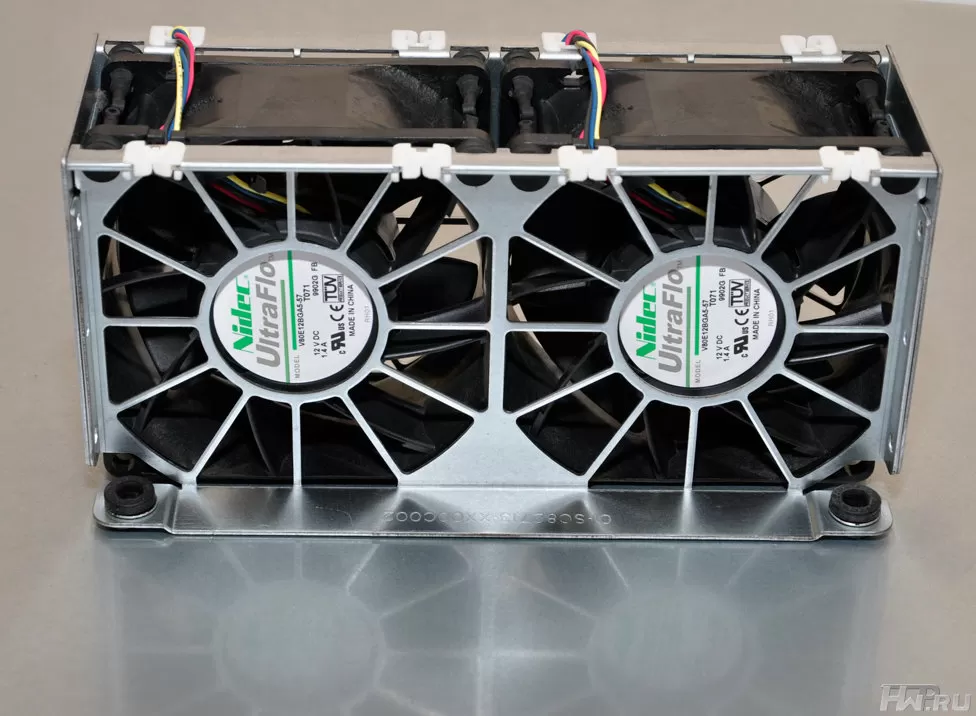

The cooling air enters the server not only through the front part with disks, but also through the side walls and the top cover. Each pair of modules is cooled by two 80 mm fans with a power of 17 W each. Moreover, if the right two modules are turned on, then a pair of fans on the left do not work in order to save energy.

The fans are replaced by a couple, and it is very inconvenient to do it.

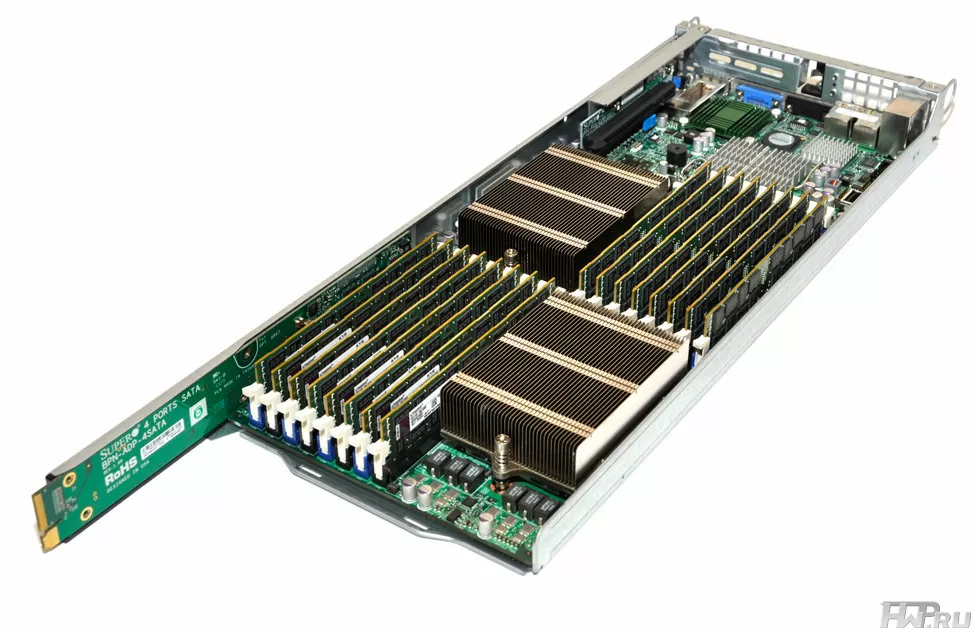

Let's see how a single node in a cluster looks like. It has a strange shape, with a long "tail", which is the board for connecting to the backplane. The contact group on this "tail" looks interesting: on top - interface contacts, on the bottom - large power contacts. Judging by the marking, this is a board for connecting to a SATA backplane, which means there is an option with a SAS interface. But surprisingly another thing is that power is supplied to all the electronics of the module only through two power contacts.

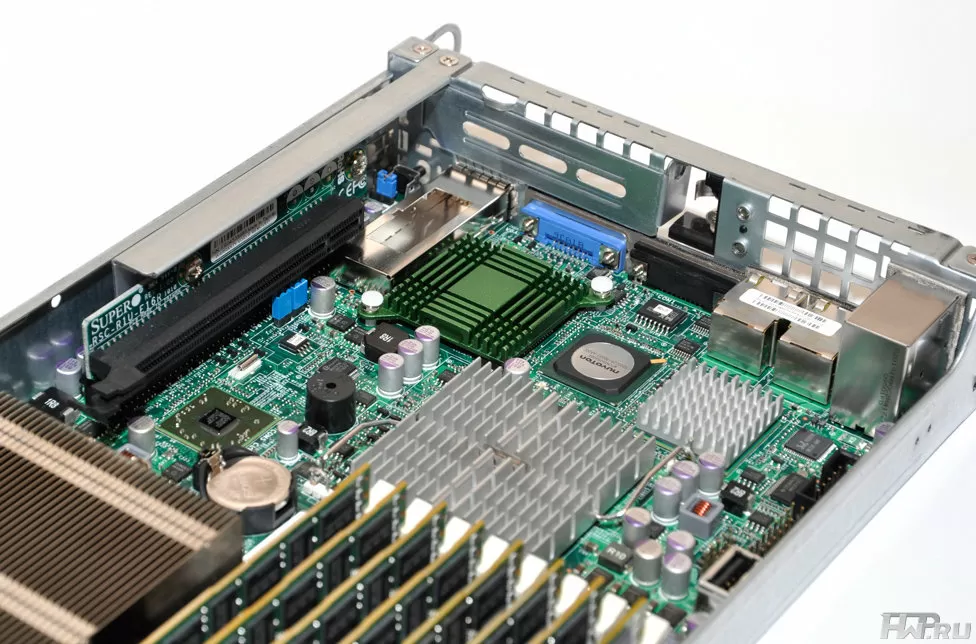

The processor sockets and memory modules are staggered, with 8 DIMM slots per CPU socket. On the rear side of the module case there is a motherboard chipset, an InfiniBand controller, and a single expansion slot. As you can see, there is not much room for an expansion card - for a half-height card up to 15 cm long. For an additional network card, this may be enough. There is no room for SD cards here, but there is a USB port into which you can install a flash drive with an operating system or access keys. The motherboard also has connectors for a bracket with USB 2.0 and RS232 ports.

In general, despite the compactness of the motherboard, it retains a typical server layout, you can even have additional interface ports and one expansion slot. Now it remains to see how this node works.

Remote monitoring

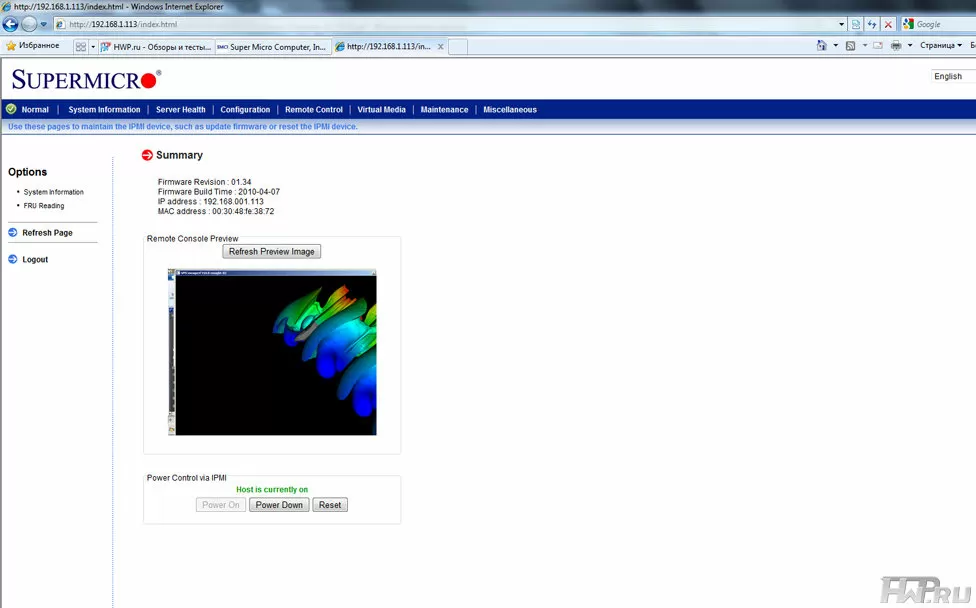

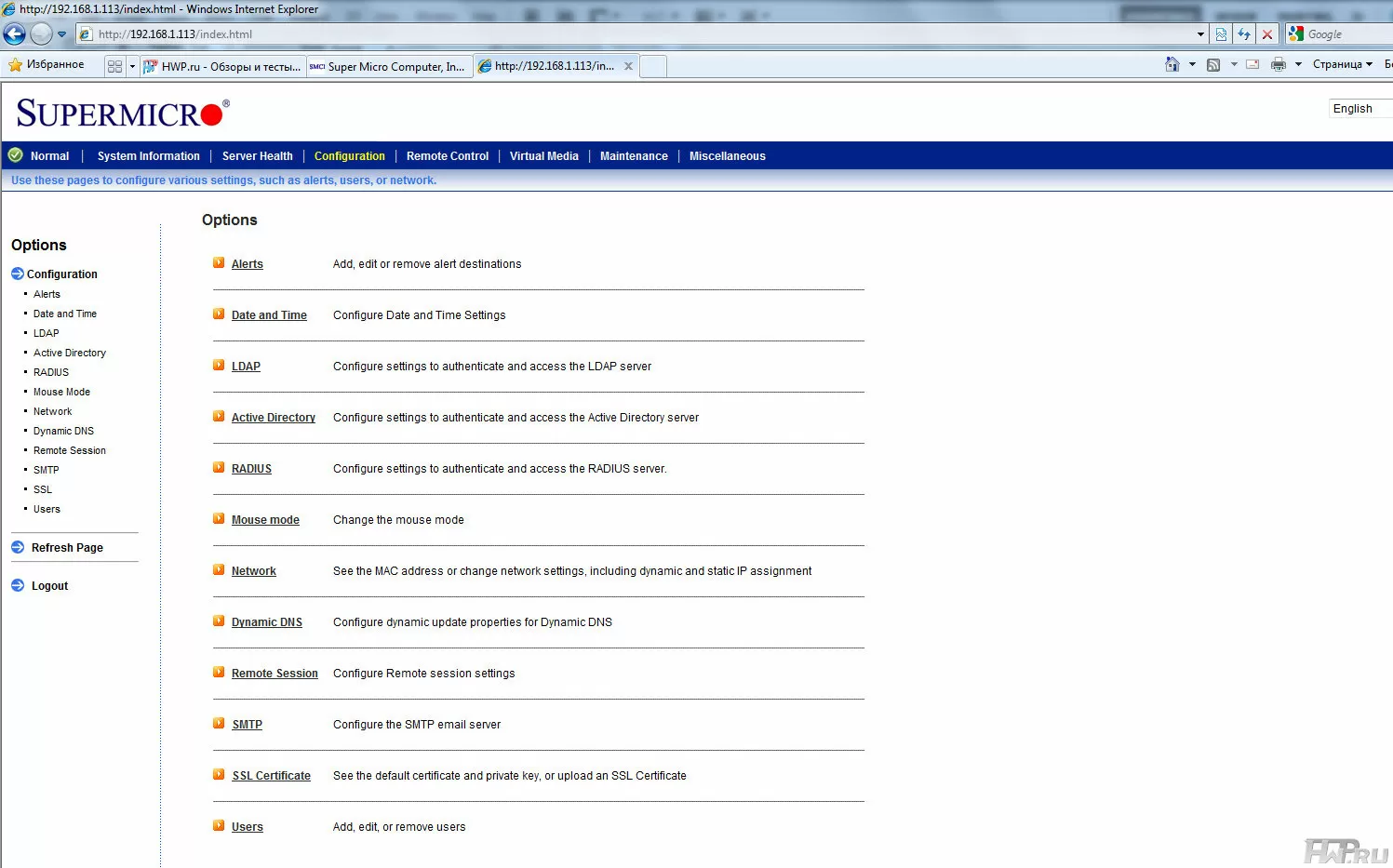

Any modern server of this class must have a functional monitoring system, and SuperMicro platforms are no exception. The user has several options for hardware monitoring, including SNMP, but monitoring via the Internet is more interesting.

To do this, you need to install the SuperODoctor utility under Windows, after which you will get access to the server through the Web interface. You will have access to KVM console, virtual disk, as well as real-time hardware monitoring data.

However, there is no startup process videogram like Dell or HP servers. And the software is available only under Windows, and under Linux there is only a very stripped-down part of it. However, if you do not want to depend on the operating system, you can connect directly to a special network port of the host and work through the HTTP protocol, without additional programs. However, centralized node management is not available: if you want to manage all four nodes, connect with four cables and open four browsers. And if there are 256 such nodes? Anyway, such a solution is better than nothing.

Testing

Let's test the performance of one high-density server node to find out the performance that it can give when working with calculations. Let's start with the SiSoft Sandra desktop benchmark. We haven't tested AMD servers yet, so let's compare the results with Dell servers running Nehalem and HarperTown Xeon series processors. These servers were tested with 12 GB of memory, so our test will not be 100% accurate, because one node of our subject has 128 GB of memory. But nevertheless, the results will be interesting.

|

Model |

Supermicro Node |

PowerEdge 2950 III |

PowerEdge R710 |

|

Processor |

2x Opteron 6174, 12 core, 2.2 GHz, 6Mb L2 |

2x Xeon X5450 QC, 3.0 GHz, 1333 MHz FSB, 12 Mb L2 |

2 x Xeon X5550 QC, HT, 2.6 GHz, 8 Mb L3 |

|

Memory |

128 Gb PC3-10600 |

12 Gb PC2-5300 Fully Buffered DIMM |

12 Gb PC3-10600 ECC Unbuffered |

|

Disk subsystem |

1xSeagate Barracuda 7200.10, 500Gb |

4x Seagate Barracuda ES2 7200 rpm SAS 250Gb RAID 10, DELL PERC 6/i |

4x DELL 146Gb SAS 15000 rpm RAID10, DELL PERC 6/i |

|

Operating system |

MS Windows Server 2008 |

MS Windows Server 2008 |

MS Windows Server 2008 |

Due to the difference in disk systems, we will not perform file speed tests.

In principle, there is something to think about - more cores give a huge advantage in CPU performance tests. The AMD architecture provides a breakthrough in memory bandwidth, although it is inferior in latency. Considering these synthetics data, we can assume a huge advantage of the AMD platform in the following tests.

MCS Benchmark

This synthetic benchmark also shows multi-core performance.

And the very first test brings us disappointment - the performance is too low, comparable to the previous generation Intel processors. But maybe it's the test code itself ... Let's move on to the SPEC group tests.

SPEC ViewPerf x64

It is strange to assume that on such systems someone would use single-threaded applications for rendering. However, the admin is not joking - we run our traditional SPEC ViewPerf test in both multi-threaded and single-threaded modes.

In this test, the AMD platform simply has no chance over even the rather old generation of Intel processors. Of course, the frequency and architecture of the Xeon pipelines gives them advantages. Turning on multitasking ...

Understand what you want, but more physical cores per node plays in favor of the AMD architecture. I don't think the amount of memory matters, since even 12 GB was enough for the test with excess. Please note - this test uses only 4 threads. In the next test from SPEC we will check if JAVA is working.

SpecJVM2008

This test can parallelize for as many branches as there are cores in the system.

Of course, the AMD platform wins in most tests, but the advantage is somewhat uncertain. For example, in complex tests Derby and Crypto, the situation is completely different. Perhaps, looking at Scimark.small and Scimark.large, it becomes clear that speed is mostly determined not by the number of cores and cache size, but by the optimization of the application, but in general the potential of the Magny-Cours processors is huge.

Conclusions

Our article explains a lot about why a high density server is more profitable than a regular 1 unit server, especially when you are building a cluster for mathematical calculations. Moreover, for this purpose it is preferable to use processors with the maximum number of physical cores, which is clearly demonstrated by the AMD Magny-Cours architecture.

The disadvantages of the platform include the fact that 3 hard disks with SATA interface are rigidly attached to each node, and the user cannot change the configuration of his own server storage system in any way. But for cases when it is necessary to use the servers' own resources for storing data, you can look at the options with 2.5-inch hard drives. And so far, there are no options for upgrading compute nodes.

The Supermicro 2022TG-HIBQR server platform proves to be more profitable than four similar 1U platforms when using the InfiniBand interface, both in purchase and in operation. High power efficiency and compute density - 48 cores per 1U make it ideal for building an HPC (High Performance Computer) or computing cluster. And compared to blade servers, here you are not tied to the hardware manufacturer, and you can replace one server with another over time, which also increases the flexibility of your infrastructure.

Mikhail Degtyarev (aka LIKE OFF)

21/03.2011